FORTRAN(ID:8/for047)

Backus et al high-level compiler

for FORmula TRANslating

Design begun in 1954, compiler released April 1957. Based on Backus earlier work with Speedcoding, but involving other programmers from many institutions and organisations.

The first and still the most widely used language for numerical calculations.

Particularly good language for processing numerical data, but it does not lend itself very well to organizing large programs.

Nonrecursive, efficient.

from BRL 1960 manifest

"Fortran (Automatic Formula Translation).

This is a program which allows expression of scientific problems in terms of

mathematical formulae, with the formulae completely acceptable to the

system. There is flexibility in the program allowing for expansion of the language

and provision for inclusion of a library of programs previously written."

Harlan Herrick invented GOTO

Roy Nutt invented FORMAT

Places

People:

- John Backus

- Sheldon Best

- Richard Goldberg

- Lois Haibt

- Harlan Herrick

- Robert Nelson

- Roy Nutt

- David Sayre

- Peter Sheridan

- Irving Ziller

- IBM 704 IBM

Related languages

| ALGAE | => | FORTRAN | Influence | |

| George | => | FORTRAN | Influence | |

| Laning and Zierler | => | FORTRAN | Influence | |

| SPEEDCODING | => | FORTRAN | Evolution of | |

| FORTRAN | => | COMTRAN | Equal portability Moderate Influence | |

| FORTRAN | => | FORTRAN II | Evolution of | |

| FORTRAN | => | FORTRANSIT | Subset | |

| FORTRAN | => | PACT I | Moderate Influence | |

| FORTRAN | => | SAKO | Influence | |

| FORTRAN | => | UNICODE | Influence |

References:

Automatic coding is a means for reducing problem costs and is one of the answers to a programmer's prayer. Since every problem must be reduced to a series of elementary steps and transformed into computer instructions, any method which will speed up and reduce the cost of this process is of importance.

Each and every problem must go through the same stages:

Analysis,

Programming,

Coding,

Debugging,

Production Running,

Evaluation

The process of analysis cannot be assisted by the computer itself. For scientific problems, mathematical or engineering, the analysis includes selecting the method of approximation, setting up specifications for accuracy of sub-routines, determining the influence of roundoff errors, and finally presenting a list of equations supplemented by definition of tolerances and a diagram of the operations. For the commercial problem, again a detailed statement describing the procedure and covering every eventuality is required. This will usually be presented in English words and accompanied again by a flow diagram.

The analysis is the responsibility of the mathematician or engineer, the methods or systems man. It defines the problem and no attempt should be made to use a computer until such an analysis is complete.

The job of the programmer is that of adapting the problem definition to the abilities and idiosyncrasies of the particular computer. He will be vitally concerned with input and output and with the flow of operations through the computer. He must have a thorough knowledge of the computer components and their relative speeds and virtues.

Receiving diagrams and equations or statements from the analysis he will produce detailed flow charts for transmission to the coders. These will differ from the charts produced by the analysts in that they will be suited to a particular computer and will contain more detail. In some cases, the analyst and programmer will be the same person.

It is then the job of the coder to reduce the flow charts to the detailed list of computer instructions. At this point, an exact and comprehensive knowledge of the computer, its code, coding tricks, details of sentinels and of pulse code are required. The computer is an extremely fast moron. It will, at the speed of light, do exactly what it is told to do no more, no less.

After the coder has completed the instructions, it must be "debugged". Few and far between and very rare are those coders, human beings, who can write programs, perhaps consisting of several hundred instructions, perfectly, the first time. The analyzers, automonitors, and other mistake hunting routines that have been developed and reported on bear witness to the need of assistance in this area. When the program has been finally debugged, it is ready for production running and thereafter for evaluation or for use of the results.

Automatic coding enters these operations at four points. First, it supplies to the analysts, information about existing chunks of program, subroutines already tested and debugged, which he may choose to use in his problem. Second, it supplies the programmer with similar facilities not only with respect to the mathematics or processing used, but also with respect to using the equipment. For example, a generator may be provided to make editing routines to prepare data for printing, or a generator may be supplied to produce sorting routines.

It is in the third phase that automatic coding comes into its own, for here it can release the coder from most of the routine and drudgery of producing the instruction code. It may, someday, replace the coder or release him to become a programmer. Master or executive routines can be designed which will withdraw subroutines and generators from a library of such routines and link them together to form a running program.

If a routine is produced by a master routine from library components, it does not require the fourth phase - debugging - from the point of view of the coding. Since the library routines will all have been checked and the compiler checked, no errors in coding can be introduced into the program (all of which presupposes a completely checked computer). The only bugs that can remain to be detected and exposed are those in the logic of the original statement of the problem.

Thus, one advantage of automatic coding appears, the reduction of the computer time required for debugging. A still greater advantage, however, is the replacement of the coder by the computer. It is here that the significant time reduction appears. The computer processes the units of coding as it does any other units of data --accurately and rapidly. The elapsed time from a programmer's flow chart to a running routine may be reduced from a matter of weeks to a matter of minutes. Thus, the need for some type of automatic coding is clear.

Actually, it has been evident ever since the first digital computers first ran. Anyone who has been coding for more than a month has found himself wanting to use pieces of one problem in another. Every programmer has detected like sequences of operations. There is a ten year history of attempts to meet these needs.

The subroutine, the piece of coding, required to calculate a particular function can be wired into the computer and an instruction added to the computer code. However, this construction in hardware is costly and only the most frequently used routines can be treated in this manner. Mark I at Harvard included several such routines — sin x, log10x, 10X- However, they had one fault, they were inflexible. Always, they delivered complete accuracy to twenty-two digits. Always, they treated the most general case. Other computers, Mark II and SEAC have included square roots and other subroutines partially or wholly built in. But such subroutines are costly and invariant and have come to be used only when speed without regard to cost is the primary consideration.

It was in the ENIAC that the first use of programmed subroutines appeared. When a certain series of operations was completed, a test could be made to see whether or not it was necessary to repeat them and sequencing control could be transferred on the basis of this test, either to repeat the operations or go on to another set.

At Harvard, Dr. Aiken had envisioned libraries of subroutines. At Pennsylvania, Dr. Mauchly had discussed the techniques of instructing the computer to program itself. At Princeton, Dr. von Neumman had pointed out that if the instructions were stored in the same fashion as the data, the computer could then operate on these instructions. However, it was not until 1951 that Wheeler, Wilkes, and Gill in England, preparing to run the EDSAC, first set up standards, created a library, and the required satellite routines and wrote a book about it, "The Preparation of Programs for Electronic Digital Computers". In this country, comprehensive automatic techniques first appeared at MIT where routines to facilitate the use of Whirlwind I by students of computers and programming were developed.

Many different automatic coding systems have been developed - Seesaw, Dual, Speed-Code, the Boeing Assembly, and others for the 701, the A—series of compilers for the UNIVAC, the Summer Session Computer for Whirlwind, MAGIC for the MIDAC and Transcode for the Ferranti Computer at Toronto. The list is long and rapidly growing longer. In the process of development are Fortran for the 704, BIOR and GP for the UNIVAC, a system for the 705, and many more. In fact, all manufacturers now seem to be including an announcement of the form, "a library of subroutines for standard mathematical analysis operations is available to users", "interpretive subroutines, easy program debugging - ... - automatic program assembly techniques can be used."

The automatic routines fall into three major classes. Though some may exhibit characteristics of one or more, the classes may be so defined as to distinguish them.

1) Interpretive routines which translate a machine-like pseudocode into machine code, refer to stored subroutines and execute them as the computation proceeds — the MIT Summer Session Computer, 701 Speed-Code, UNIVAC Short-Code are examples.

2) Compiling routines, which also read a pseudo-code, but which withdraw subroutines from a library and operate upon them, finally linking the pieces together to deliver, as output, a complete specific program for future running — UNIVAC A — compilers, BIOR, and the NYU Compiler System.

3) Generative routines may be called for by compilers, or may be independent routines. Thus, a compiler may call upon a generator to produce a specific input routine. Or, as in the sort-generator, the submission of the specifications such as item-size, position of key-to produce a routine to perform the desired operation. The UNIVAC sort-generator, the work of Betty Holberton, was the first major automatic routine to be completed. It was finished in 1951 and has been in constant use ever since. At the University of California Radiation Laboratory, Livermore, an editing generator was developed by Merrit Ellmore — later a routine was added to even generate the pseudo-code.

The type of automatic coding used for a particular computer is to some extent dependent upon the facilities of the computer itself. The early computers usually had but a single input-output device, sometimes even manually operated. It was customary to load the computer with program and data, permit it to "cook" on them, and when it signalled completion, the results were unloaded. This procedure led to the development of the interpretive type of routine. Subroutines were stored in closed form and a main program referred to them as they were required. Such a procedure conserved valuable internal storage space and speeded the problem solution.

With the production of computer systems, like the UNIVAC, having, for all practical purposes, infinite storage under the computers own direction, new techniques became possible. A library of subroutines could be stored on tape, readily available to the computer. Instead of looking up a subroutine every time its operation was needed, it was possible to assemble the required subroutines into a program for a specific problem. Since most problems contain some repetitive elements, this was desirable in order to make the interpretive process a one-time operation.

Among the earliest such routines were the A—series of compilers of which A-0 first ran in May 1952. The A-2 compiler, as it stands at the moment, commands a library of mathematical and logical subroutines of floating decimal operations. It has been successfully applied to many different mathematical problems. In some cases, it has produced finished, checked and debugged programs in three minutes. Some problems have taken as long as eighteen minutes to code. It is, however, limited by its library which is not as complete as it should be and by the fact that since it produces a program entirely in floating decimal, it is sometimes wasteful of computer time. However, mathematicians have been able rapidly to learn to use it. The elapsed time for problems— the programming time plus the running time - has been materially reduced. Improvements and techniques now known, derived from experience with the A—series, will make it possible to produce better compiling systems. Currently, under the direction of Dr. Herbert F. Mitchell, Jr., the BIOR compiler is being checked out. This is the pioneer - the first of the true data-processing compilers.

At present, the interpretive and compiling systems are as many and as different as were the computers five years ago. This is natural in the early stages of a development. It will be some time before anyone can say this is the way to produce automatic coding.

Even the pseudo-codes vary widely. For mathematical problems, Laning and Zeirler at MIT have modified a Flexowriter and the pseudo-code in which they state problems clings very closely to the usual mathematical notation. Faced with the problem of coding for ENIAC, EDVAC and/or ORDVAC, Dr. Gorn at Aberdeen has been developing a "universal code". A problem stated in this universal pseudo-code can then be presented to an executive routine pertaining to the particular computer to be used to produce coding for that computer. Of the Bureau of Standards, Dr. Wegstein in Washington and Dr. Huskey on the West Coast have developed techniques and codes for describing a flow chart to a compiler.

In each case, the effort has been three-fold:

1) to expand the computer's vocabulary in the direction required by its users.

2) to simplify the preparation of programs both in order to reduce the amount of information about a computer a user needed to learn, and to reduce the amount he needed to write.

3) to make it easy, to avoid mistakes, to check for them, and to detect them.

The ultimate pseudo-code is not yet in sight. There probably will be at least two in common use; one for the scientific, mathematical and engineering problems using a pseudo-code closely approximating mathematical symbolism; and a second, for the data-processing, commercial, business and accounting problems. In all likelihood, the latter will approximate plain English.

The standardization of pseudo-code and corresponding subroutine is simple for mathematical problems. As a pseudo-code "sin x" is practical and suitable for "compute the sine of x", "PWT" is equally obvious for "compute Philadelphia Wage Tax", but very few commercial subroutines can be standardized in such a fashion. It seems likely that a pseudocode "gross-pay" will call for a different subroutine in every installation. In some cases, not even the vocabulary will be common since one computer will be producing pay checks and another maintaining an inventory.

Thus, future compiling routines must be independent of the type of program to be produced. Just as there are now general-purpose computers, there will have to be general-purpose compilers. Auxiliary to the compilers will be vocabularies of pseudo-codes and corresponding volumes of subroutines. These volumes may differ from one installation to another and even within an installation. Thus, a compiler of the future will have a volume of floating-decimal mathematical subroutines, a volume of inventory routines, and a volume of payroll routines. While gross-pay may appear in the payroll volume both at installation A and at installation B, the corresponding subroutine or standard input item may be completely different in the two volumes. Certain more general routines, such as input-output, editing, and sorting generators will remain common and therefore are the first that are being developed.

There is little doubt that the development of automatic coding will influence the design of computers. In fact, it is already happening. Instructions will be added to facilitate such coding. Instructions added only for the convenience of the programmer will be omitted since the computer, rather than the programmer, will write the detailed coding. However, all this will not be completed tomorrow. There is much to be learned. So far as each group has completed an interpreter or compiler, they have discovered in using it "what they really wanted to do". Each executive routine produced has lead to the writing of specifications for a better routine.

1955 will mark the completion of several ambitious executive routines. It will also see the specifications prepared by each group for much better and more efficient routines since testing in use is necessary to discover these specifications. However, the routines now being completed will materially reduce the time required for problem preparation; that is, the programming, coding, and debugging time. One warning must be sounded, these routines cannot define a problem nor adapt it to a computer. They only eliminate the clerical part of the job.

Analysis, programming, definition of a problem required 85%, coding and debugging 15$, of the preparation time. Automatic coding materially reduces the latter time. It assists the programmer by defining standard procedures which can be frequently used. Please remember, however, that automatic programming does not imply that it is now possible to walk up to a computer, say "write my payroll checks", and push a button. Such efficiency is still in the science-fiction future.

in the High Speed Computer Conference, Louisiana State University, 16 Feb. 1955, Remington Rand, Inc. 1955 view details

in the High Speed Computer Conference, Louisiana State University, 16 Feb. 1955, Remington Rand, Inc. 1955 view details

We asked Mr. Bemer about the multi-computer language referred to in the Times. He said that I.B.M. has already developed two synthetic languages for its computers: Fortran, which is strictly for scientific use, and Print I, which can handle both scientific and commercial information. by I.B.M.‘s strict standards, both languages leave much to be desired (what strikes us as miraculous is mere irritating clumsiness to I.B.M.); for example, through the point has been reached where computers can translate scientific data from Russian into English at the rate of four or five sentences a minute, the data requires pre-editing and post-editing. "We’re out to develop a language that will let computers think pretty much as we do-make ready use of their stored memories and be capable of free association," Bemer said. "A computer has been designed that plays checkers and has beaten all comers so far. Chess is still beyond it, but won’t be for long. There’s no telling how many ticklish problems computers will someday be able to solve. I foresee the time when every major city in the country will have its community computer. Grocers, doctors, lawyers-they will all throw problems to the computer and will all have their problems solved. Some people fear that these machines will put them out of work. On the contrary, they permit the human mind to devote itself to what it can do best. We will always be able to outthink machines." Triumphantly, Bemer tumed a sign on his desk in our direction. It read, "REFLEXIONE." Extract: An advertisement for programmers

The International Business Machines people ran an ad in the Times a few weeks ago asking any "research programmers for digital computers" who might be interested in taking part in an "expanding research effort in the development and automatic translation of a multi-computer language" to apply to Mr. R. W. Bemer, assistant manager of the I.B.M. programming-research department.

in the High Speed Computer Conference, Louisiana State University, 16 Feb. 1955, Remington Rand, Inc. 1955 view details

in Rosen, Saul (ed) Programming Systems & Languages. McGraw Hill, New York, 1967. view details

in [JCC 11] Proceedings of the Western Joint Computer Conference, Los Angeles February 1957 view details

Let me elaborate these points with examples. UNICODE is expected to require about fifteen man-years. Most modern assembly systems must take from six to ten man-years. SCAT expects to absorb twelve people for most of a year. The initial writing of the 704 FORTRAN required about twenty-five man-years. Split among many different machines, IBM's Applied Programming Department has over a hundred and twenty programmers. Sperry Rand probably has more than this, and for utility and automatic coding systems only! Add to these the number of customer programmers also engaged in writing similar systems, and you will see that the total is overwhelming.

Perhaps five to six man-years are being expended to write the Alodel 2 FORTRAN for the 704, trimming bugs and getting better documentation for incorporation into the even larger supervisory systems of various installations. If available, more could undoubtedly be expended to bring the original system up to the limit of what we can now conceive. Maintenance is a very sizable portion of the entire effort going into a system.

Certainly, all of us have a few skeletons in the closet when it comes to adapting old systems to new machines. Hardly anything more than the flow charts is reusable in writing 709 FORTRAN; changes in the characteristics of instructions, and tricky coding, have done for the rest. This is true of every effort I am familiar with, not just IBM's.

What am I leading up to? Simply that the day of diverse development of automatic coding systems is either out or, if not, should be. The list of systems collected here illustrates a vast amount of duplication and incomplete conception. A computer manufacturer should produce both the product and the means to use the product, but this should be done with the full co-operation of responsible users. There is a gratifying trend toward such unification in such organizations as SHARE, USE, GUIDE, DUO, etc. The PACT group was a shining example in its day. Many other coding systems, such as FLAIR, PRINT, FORTRAN, and USE, have been done as the result of partial co-operation. FORTRAN for the 705 seems to me to be an ideally balanced project, the burden being carried equally by IBM and its customers.

Finally, let me make a recommendation to all computer installations. There seems to be a reasonably sharp distinction between people who program and use computers as a tool and those who are programmers and live to make things easy for the other people. If you have the latter at your installation, do not waste them on production and do not waste them on a private effort in automatic coding in a day when that type of project is so complex. Offer them in a cooperative venture with your manufacturer (they still remain your employees) and give him the benefit of the practical experience in your problems. You will get your investment back many times over in ease of programming and the guarantee that your problems have been considered.

Extract: IT, FORTRANSIT, SAP, SOAP, SOHIO

The IT language is also showing up in future plans for many different computers. Case Institute, having just completed an intermediate symbolic assembly to accept IT output, is starting to write an IT processor for UNIVAC. This is expected to be working by late summer of 1958. One of the original programmers at Carnegie Tech spent the last summer at Ramo-Wooldridge to write IT for the 1103A. This project is complete except for input-output and may be expected to be operational by December, 1957. IT is also being done for the IBM 705-1, 2 by Standard Oil of Ohio, with no expected completion date known yet. It is interesting to note that Sohio is also participating in the 705 FORTRAN effort and will undoubtedly serve as the basic source of FORTRAN-to- IT-to-FORTRAN translational information. A graduate student at the University of Michigan is producing SAP output for IT (rather than SOAP) so that IT will run on the 704; this, however, is only for experience; it would be much more profitable to write a pre-processor from IT to FORTRAN (the reverse of FOR TRANSIT) and utilize the power of FORTRAN for free.

in "Proceedings of the Fourth Annual Computer Applications Symposium" , Armour Research Foundation, Illinois Institute of Technology, Chicago, Illinois 1957 view details

Introduction

It is possible so to standardize programming and coding for general purpose, automatic, high-speed, digital computing machines that most of the process becomes mechanical and, to a great degree, independent of the machine. To the extent that the programming and coding process is mechanical a machine may be made to carry it out, for the procedure is just another data processing one.

If the machine has a common storage for its instructions along with any other data, it can even carry out each instruction immediately after having coded it. This mode of operation in automatic coding is known as 'interpretive'. There have been a number of interpretive automatic coding procedures on various machines, notably MIT's Summer Session and Comprehensive systems for Whirlwind, Michigan's Magic System for MIDAC, and IBM's Speedcode; in addition there have been some interpretive systems beginning essentially with mathematical formulae as the pseudocode, such as MIT's Algebraic Coding, one for the SEAC, and others.

We will be interested, however, in considering the coding of a routine as a separate problem, whose result is the final code. Automatic coding which imitates such a process is, in the main, non-interpretive. Notable examples are the A-2 and B-O compiler systems, and the G-P (general purpose) system, all for UNIVAC, and IBM's FORTRAN, of the algebraic coding type.

Although, unlike interpretive systems, compilers do not absolutely require their machines to possess common storage of instructions and the data they process, they are considerably simpler when their machines do have this property. Much more necessary for the purpose is that the machines possess a reasonable amount of internal erasable storage, and the ability to exercise discrimination among alternatives by simple comparison instructions. I t will be assumed that the machines under discussion, whether we talk about standardized or about automatic coding, possess these three properties, namely, common storage, erasable storage, and discrimination. Such machines are said to possess "loop control".

We will be interested in that part of the coding process which all machines having loop control and a sufficiently large storage can carry out in essentially the same manner; it is this part of coding that is universal and capable of standardization by a universal pseudo-code.

The choice of such a pseudo-code is, of course, a matter of convention, and is to that extent arbitrary, provided it is

(1) a language rich enough to permit the description of anything these machines can do, and

(2) a language whose basic vocabulary is not too microscopically detailed.

The first requirement is needed for universality of application; the second is necessary if we want to be sure that the job of hand coding with the pseudo-code is considerably less detailed than the job of hand coding directly in machine code. Automatic coding is pointless practically if this second condition is not fulfilled.

In connection with the first condition we should remark on what the class of machines can produce; in connection with the second we should give some analysis of the coding process. In either case we should say a few things about the logical concept of computability and the syntax of machine codes.

in [ACM] JACM 4(3) July 1957 view details

GENERAL INTRODUCTION

Every type of electronic computer is designed to respond to a special code, called a "machine language, " which differs for different types of computers. A program, or set of instructions, telling a computer what steps to perform to solve a problem must ultimately be given to the computer in its own language. However, the FORTRAN System makes it unnecessary for the 704 programmer to learn the 704 machine language.

The FORTRAN System has been developed to enable the programmer to state in a relatively simple language, resembling familiar usage, the steps of a procedure to be carried out by the 704 computer, and to obtain automatically from the 704 an efficient machine language program for this procedure. The FORTME' System has two parts: the FORTRAN language, and the FORTRAN translator or executive routine.

The FORTRAN language consists of 32 types of statements, which may be grouped into four classifications: arithmetic statements, control statements, input/output statements, and specification statements. The FORTRAN programmer uses this language to state the steps ultimatley to be carried out by the 704. The FORTRAN translator is a large set of machine language instructions which causes the 704 to translate a FORTRAN program into an efficient (or "optimized") machine language program. The 704 does not respond directly to a FORTRAN program, but to the machine language program produced by means of the FORTRAN translator. The name FORTRAN comes from "FORmula TRANslation" and was chosen because many of the statements which this system translates look like algebraic formulas.

The purpose of the FORTRAN Primer is to introduce the reader to the FORTRAN language, which has been designed as a concise, convenient means of stating the steps to be carried out by the 704 in the solution of many types of problems, such as frequently occur in engineering, physics, and other scientific and technical fields. No prerequisite lmowledge of computing technology or techniques on the part of ?&e reader is assumed.

Once the 704 has been told how to solve a problem, it can take in data from punched cards at a rate exceeding 1000 numbers per minute, perform arithmetic steps at an approximate rate of 10,000 per second, and print results at a rate of about 750 per minute. Thus the 704 can do in minutes calculations which would require weeks or months to do manually.

Virtually any numerical procedure may be expressed in the FORTRAN language. The FORTRAN System is intended to reduce substantially the time required to produce an efficient machine language program for the numerical solution of a problem, and to relieve the programmer of a considerable amount of manual "clerical" work, minimizing the possibility of human error by relegating the mechanics of coding and optimization to the 704. In this primer, as an aid to efficient study, the FORTRAN language is approached cumulatively through three stages, Section I, Section 11, and Section 111. The division into three sections is convenient for the description of successively more complex problem- solving procedures .

By using only the types of statements presented in Section I, it is possible to direct the 704 to take individual numbers from a card reader; combine them according to formulas involving arithmetic operations and standard functions such as sine, square root, log, etc. ; make tests and follow different directions depending on the outcome of the tests; and finally print the results. Section I1 presents additional types of statements which provide for the definition and use of functions peculiar to the problem to be solved; the iterative manipulation of subscripted variables (the elements of vectors or lists of numbers); the use of magnetic tape for input and output information; and greater flexibility in the format of input and output information. When magnetic tape is used for input and output information, the 704 can read or write more than 900 numbers per second, a much greater rate than is possible when the 704 reads cards and prints results directly. Since all information to be used or processed by the 704, other than magnetic tape output from a previous computer operation, must initially be recorded on punched cards, and since it is often desirable to maintain permanent records on punched cards or in printed form, the use of magnetic tape for input and output requires a means of transferring information from cards to tapes, tapes to cards, and tapes to printed form; this transfer may be effected by means of separate peripheral equipment.

in [ACM] JACM 4(3) July 1957 view details

in [ACM] JACM 4(3) July 1957 view details

permit computers to be programmed in a manner which

is easy for beginners to grasp, the actual translation to

machine language being performed by the machine itself.

Several such "automatic coding" schemes accept programs

in a form which is based on the conventional

notation of mathematics, and which is particularly

appropriate for scientific work. One such scheme is

described by R. A. Brooker in the first issue of The

Computer Journal. Another is FORTRAN, which can be

used with the IBM 704 (and also, in a restricted form,

with the 650).

When FORTRAN is used, the programmer prepares his

program in the form of "statements" which are transcribed

on to punched cards and fed into the computer.

Instructions already in the machine cause it to start

reading the FORTRAN statements from the cards. The

computer then translates these statements into machinelanguage

instructions, recording these onto more punched

cards. In this translation one FORTRAN statement gives

rise to an average of 9 machine-code orders. The new

set of cards can then be fed into the computer any

number of times, causing it to carry out the procedure

specified in the original statements.

in [ACM] JACM 4(3) July 1957 view details

in [ACM] JACM 4(3) July 1957 view details

GENERAL INTRODUCTION

The original FORTRAN language was designed as a concise, convenient means of stating the steps to be carried out by the IBM 704 Data Processing System in the solution of many types of problems, particularly in scientific and technical fields. As the language is simple and the 704, with the FORTRAN Translator program, performs most of the clerical work, FORTRAN has afforded a significant reduction in the time required to write programs,

The original FORTRAN language contained 32 types of statements. Virtually any numerical procedure may be expressed by combinations of these statements. Arithmetic formulas are expressed in a language close to that of mathematics. Iterative processes can be easily governed by control statements and arithmetic statements. Input and output data are flexibly handled in a variety of formats.

Extract: General introduction: FORTRAN II

GENERAL INTRODUCTION

The FORTRAN II language contains six new types of statements and incorporates all the statements in the original FORTRAN language. Thus, the FORTRAN II system and language are compatible with the original FORTRAN, and any program used with the earlier system can also be used with FORTRAN II. The 38 FORTRAN II statements are listed in Appendix A, page 59.

The additional facilities of FORTRAN II effectively enable the programmer to expand the language of the system indefinitely. This expansion is obtained by writing subprograms which define new statements or elements of the FORTRAN II language. All statements so defined will be of a single type, the CALL type. All elements so defined will be the symbolic names of single-valued functions. Each new statement or element, when used in a FORTRAN II program, will constitute a call for the defining subprogram, which may carry out a procedure of any length or complexity within the capacity of the computer.

The FORTRAN II subprogram facilities are completely general; subprograms can in turn use other subprograms to whatever degree is required. These subprograms may be written in source program language. For example, subprograms may be written in FORTRAN II language such that matrices may be processed as units by a main program. Also, for example, it is possible to write SAP (SHARE Assembly Program) subprograms which perform double precision arithmetic, logical operations, etc . Certain additional advantages flow from the above concept. Any program may be used as a subprogram (with appropriate minor changes) in FORTRAN II, thus making use, as a library, of programs previously written. A large program may be divided into sections and each section written, compiled, and tested separately. In the event it is desirable to change the method of performing a computation, proper sectioning of a program will allow this specific method to be changed without disturbing the rest of the program and with only a small amount of recompilation time.

There are two ways FORTRAN II links a main program to subprograms, and subprograms to lower level subprograms. The first way is by statements of the new CALL type. This type may be indefinitely expanded, by means of subprograms, to include particular statements specifying any procedures whatever within the power of the computer. The defining subprogram may be any FORTRAN II subprogram, SAP subprogram, or program written in any language which is reducible to machine language. Since a subprogram may call for other subprograms to any desired depth, a particular CALL statement may be defined by a pyramid of multilevel subprograms. A particular CALL statement consists of the word CALL, followed by the symbolic name of the highest level defining subprogram and a parenthesized list of arguments. A FORTRAN II subprogram to be linked by means of a CALL statement must have a SUBROUTINE statement as its first statement. SUBROUTINE is followed by the name of the subprogram and by 2 number of symbols in parentheses. The symbols in parentheses must agree in number, order, and mode with the arguments in the CALL statement used to call this subprogram. A subprogram headed by a SUBROUTINE statement has a RETURN statement at the point where control is to be returned to the calling program. A subprogram may, of course, contain more than one RETURN statement.

The second way in which FORTRAN II links programs together is by means of an arithmetic statement involving the name of a function with a parenthesized list of arguments. The function terminology in the FORTRAN II language may be indefinitely expanded to include as elements of the language any single-valued functions which can be evaluated by a process within the capacity of the computer. The power of function definition was available in the original FORTRAN but has been made much more flexible in FORTRAN II.

As in the original FORTRAN, library tape functions and built-in functions may be used in any FORTRAN II program. The library tape functions may be supplemented as desired. Two new built-in functions have been added in FORTRAN II, and provision has been made for the addition of up to ten by the individual installation. The most flexible and powerful means of function definition in FORTRAN II is, however, the subprogram headed by a FUNCTION statement. The FUNCTION statement specifies the function name, followed by a parenthesized list of arguments corresponding in number, order, and mode to the list following the function name in the calling program. This new facility enables the programmer to define functions in source language in a subprogram which can be compiled from alphanumeric cards or tape in the same way as a main program. Function subprograms may use other subprograms to any depth desired. A subprogram headed by a FUNCTION statement is logically terminated by a RETURN statement in the same manner as the SUBROUTINE subprogram. Subprograms of the function type may also be written in SAP code, or in any other language reducible to machine language. Subprograms of the function type may freely use subprograms of both the subroutine type and the function type without restriction. Similarly, the subroutine type may use subprograms of both the subroutine type and the function type without restriction. The names of variables listed in a subprogram in a SUBROUTINE or FUNCTION statement are dummy variables. These names are independent of the d h g program =d, therefore, need not be the same as the corresponding variable names in the calling program, and may even be the same as non-corresponding variable names in the calling program. This enables a subprogram or group of subprograms to be used with various independently written main programs.

There are many occasions when it is desirable for a subprogram to be able to refer to variables in the calling program without requiring that they be listed every time the subprogram is to be used. Such cross-referencing of the variables in a calling program and in various levels of subprograms is accomplished by means of the COMMON statement which defines the storage areas to be shared by several programs. This feature also gives the programmer more flexible control over the allocation of data in storage.

The END statement has been added to the FORTRAN II language for multiple program compilation, another new feature of FORTRAN 11. This statement acts as an end-of-file for either cards or tape so that there may be many programs in the card reader or on a reel of tape at any one time. Five digits in parentheses follow the END statement. These digits refer to the first five Sense Switches on the 704 Console, allowing the programmer, if he wishes, to indicate to the Translator which of certain options it is to take, regardless of the arranging of the these switches. In an early phase of the FORTRAN II Translator, a diagnostic program has been incorporated which finds many types of errors much earlier during the compilation process, provides more complete information on error print-outs, and reduces the number of stops. Thus, both programming time and machine compilation time are saved.

The object programs, both main programs and subprograms, are stored in 704 memory by the Binary Symbolic Subroutine Loader. The Loader interprets symbolic references between a main program and its subprograms and between various levels of subprograms and provides for the proper flow of control between the various programs during program execution.

Because of the function of the Loader, the programmer need know only the symbolic name of an available subprogram and the procedure which it carries out; he does not need to be concerned with the constitution of the machine language deck, nor with the location of the subprogram in storage. h machine language decks, symbolic references are retained in a set of names, or ??Transfer List, " at the beginning of each program which calls for subprograms.

The symbolic name of each subprogram is also retained on a special card, the fl Program Card, at the front of each subprogram deck. At the beginning of loading, a call for a subprogram is a transfer to the appropriate symbolic name in the Transfer List. Before program execution commences, the Loader replaces the Transfer List names with transfers to the actual locations occupied in storage by the corresponding subprogram entry points.

The order in which the decks are loaded determines the actual locations occupied by the main program and subprograms in storage but does not affect the logical flow of control. The order in which decks are loaded is therefore arbitrary.

in [ACM] JACM 4(3) July 1957 view details

THE FORTRAN SYSTEM

The IBM Mathematical Formula Translating System FORTRAN is an automatic coding system for the IBM 704 Data Processing System. More precisely, it is a 704 program which accepts a source program written in the FORTRAN language, closely resembling the ordinary language of mathematics, und which produces an object program in 704 machine language, ready to be run on a 704. FORTRAN therefore in eflect transforms the 704 into a machine with which communication can be made in a language more concise and more familiar than the 704 language itself. The result should be a considerable reduction in the training required to program, as well as in the time consumed in writing programs and eliminating their errors.

Among the features which characterize the FORTRAN system are the following.

Size of Machine Required

The system has been designed to operate on a "small" 704, but to write object programs for any 704. (For further details, see the section on Source and Object Machines in Chapter 7.) If an object program is produced which is too large for the machine on which it is to be run, the programmer must subdivide the program.

Efficiency of the Object Program

Object programs produced by FORTRAN will be nearly as efficient as those written by good programmers.

Scope of Applicability

The FORTRAN language is intended to be capable of expressing any problem of numerical computation. In particular, it deals easily with problems containing large sets of formulae and many variables, and it permits any variable to have up to three independent subscripts.

However, for problems in which machine words have a logical rather than a numerical meaning it is less satisfactory, and it may fail entirely to express some such problems. Nevertheless, many logical operations not directly expressible in the FORTRAN language can be obtained by making use of the provisions for incorporating library routines.

Inclusion of Pre-written Routines

Library routines to evaluate any single-valued functions of any number of arguments can be made available for incorporation into object programs by placing them on the master FORTRAN tape.

Provision for Input and Output

Certain statements in the FORTRAN language cause the object program to be equipped with its necessary input and output programs. Those which deal with decimal information include conversion to or from binary, and permit considerable freedom of format in the external medium.

Nature of Fortran Arithmetic

Arithmetic in the object progrgam will generally be performd with single precision 704 floating point numbers. These numbers provide 27 binary digits (about 8 decimal digits) of precision, and may have magnitudes between approximately 10-38 and 1038, and zero. Fixed point arithmetic, but for integers only, is also provided.

in [ACM] JACM 4(3) July 1957 view details

in Proceedings of the Symposium on the Mechanisation of Thought Processes. Teddington, Middlesex, England: The National Physical Laboratory, November 1958 view details

in E. M. Crabbe, S. Ramo, and D. E. Wooldridge (eds.) "Handbook of Automation, Computation, and Control," John Wiley & Sons, Inc., New York, 1959. view details

in [ACM] CACM 2(02) February 1959 view details

Assembly and Compiling Systems both obey the "pre-translation"7 principle. Pseudo instructions are interpreted and a running program is produced before the solution is initiated. Usually this makes possible a single set of references to the library rather than many repeated references.

In an assembly system the pseudo-code is ordinarily modified computer code. Each pseudo instruction refers to one machine instruction or to a relatively short subroutine. Under the control of the master routine, the assembly system sets up all controls for monitoring the flow of input and output data and instructions.

A compiler system operates in the same way as an assembly system, but does much more. In most compilers each pseudo instruction refers to a subroutine consisting of from a few to several hundred machine instructions.8 Thus it is frequently possible to perform all coding in pseudo-code only, without the use of any machine instructions.

From the viewpoint of the user, compilers are the more desirable type of automatic programming because of the comparative ease of coding with them. However, compilers are not available with all existing equipments. In order to develop a compiler, it is usually necessary to have a computer with a large supplementary storage such as a magnetic tape system or a large magnetic drum. This storage facilitates compilation by making possible as large a running program as the problem requires.

Examples of assembly systems are /Symbolic Optimum Assembly Programming (S.O.A.P.) for the IBM 650 and REgional COding (RECO) for the UNIVAC SCIENTIFIC 1103 Computer. The X-l Assembly System for the UNIVAC I and II Computers is not only an assembly system, but is also used as an internal part of at least two compiling systems. Extract: MATHMATIC, FORTRAN and UNICODE

For scientific and mathematical calculations, three compilers which translate formulas from standard symbologies of algebra to computer code are available for use with three different computers. These are the MATH-MATIC (AT-3) System for the UNIVAC I and II Computers, FORTRAN (for FOR-mula TRANslation) as used for the IBM 704 and 709, and the UNICODE Automatic Coding System for the UNIVAC SCIENTIFIC 1103A Computer. Extract: FLOW-MATIC and REPORT GENERATOR

Two advanced compilers have also been developed for use with business data processing. These are the FLOW-MATIC (B-ZERO) Compiler for the UNIVAC I and II Computers and REPORT GENERATOR for the new IBM 709.13 In these compilers, English words and sentences are used as pseudocode.

in [ACM] CACM 2(02) February 1959 view details

Introduction

The present paper describes, in formal terms, the steps in translation employed by the Fortran aritlimetic translator in converting Fortran formulas into 704 assembly code. The steps are described in about the order in which they are; actually taken during translation. Although sections 2 and 3 give a formal description of the Fortran source language, insofar as arithmetic type statements are concerned, the reader is expected to be familiar with Fobtran II, as well as with Sap II and the programming logic of the 704 computer.

in [ACM] CACM 2(02) February 1959 view details

employ new methods in many areas of research. Performance of 1 million

multiplications on a desk calculator is estimated to require about five vears

and to cost $25,000. On an early scientific computer, a million

multiplications required eight minutes and cost (exclusive of programing

and input preparation) about $10. With the recent LARC computer,

1 million multiplications require eight seconds and cost about

50 cents (Householder, 1956). Obviously it is imperative that researchers

examine their methods in light of the abilities of the computer.

It should be noted that much of the information published on computers

and their use has not appeared in educational or psychological literature

but rather in publications specifically concerned with computers. mathematics,

engineering, and business. The following selective survey is intended

to guide the beginner into this broad and sometimes confusing

area. It is not an exhaustive survey. It is presumed that the reader has

access to the excellent Wrigley (29571 article; so the major purpose of

this review is to note additions since 1957.

The following topics are discussed: equipment availabilitv, knowledge

needed to use computers, general references, programing the computer,

numerical analysis, statistical techniques, operations research, and mechanization

of thought processes. Extract: Compiler Systems

Compiler Systems

A compiler is a translating program written for a particular computer which accepts a form of mathematical or logical statement as input and produces as output a machine-language program to obtain the results.

Since the translation must be made only once, the time required to repeatedly run a program is less for a compiler than for an interpretive system. And since the full power of the computer can be devoted to the translating process, the compiler can use a language that closely resembles mathematics or English, whereas the interpretive languages must resemble computer instructions. The first compiling program required about 20 man-years to create, but use of compilers is so widely accepted today that major computer manufacturers feel obligated to supply such a system with their new computers on installation.

Compilers, like the interpretive systems, reflect the needs of various types of users. For example, the IBM computers use "FORTRAN" for scientific programing and "9 PAC" and "ComTran" for commercial data processing; the Sperry Rand computers use "Math-Matic" for scientific programing and "Flow-Matic" for commercial data processing; Burroughs provides "FORTOCOM" for scientific programming and "BLESSED 220" for commercial data processing.

There is some interest in the use of "COBOL" as a translation system common to all computers.

in [ACM] CACM 2(02) February 1959 view details

in [ACM] CACM 2(02) February 1959 view details

in [ACM] CACM 4(01) (Jan 1961) view details

Univac LARC is designed for large-scale business data processing as well as scientific computing. This includes any problems requiring large amounts of input/output and extremely fast computing, such as data retrieval, linear programming, language translation, atomic codes, equipment design, largescale customer accounting and billing, etc.

University of California

Lawrence Radiation Laboratory

Located at Livermore, California, system is used for the

solution of differential equations.

[?]

Outstanding features are ultra high computing speeds and the input-output control completely independent of computing. Due to the Univac LARC's unusual design features, it is possible to adapt any source of input/output to the Univac LARC. It combines the advantages of Solid State components, modular construction, overlapping operations, automatic error correction and a very fast and a very large memory system.

[?]

Outstanding features include a two computer system (arithmetic, input-output processor); decimal fixed or floating point with provisions for double

precision for double precision arithmetic; single bit error detection of information in transmission and arithmetic operation; and balanced ratio of high speed auxiliary storage with core storage.

Unique system advantages include a two computer system, which allows versatility and flexibility for handling input-output equipment, and program interrupt on programmer contingency and machine error, which allows greater ease in programming.

in [ACM] CACM 4(01) (Jan 1961) view details

in Invited papers view details

The 701 used a rather unreliable electrostatic tube storage system. When Magnetic core storage became available there was some talk about a 701M computer that would be an advanced 701 with core storage. The idea of a 701M was soon dropped in favor of a completely new computer, the 704. The 704 was going to incorporate into hardware many of the features for which programming systems had been developed in the past. Automatic floating point hardware and index registers would make interpretive systems like Speedcode unnecessary.

Along with the development of the 704 hardware IBM set up a project headed by John Backus to develop a suitable compiler for the new computer. After the expenditure of about 25 man years of effort they produced the first Fortran compiler.19,20 Fortran is in many ways the most important and most impressive development in the early history of automatic programming.

Like most of the early hardware and software systems, Fortran was late in delivery, and didn't really work when it was delivered. At first people thought it would never be done. Then when it was in field test, with many bugs, and with some of the most important parts unfinished, many thought it would never work. It gradually got to the point where a program in Fortran had a reasonable expectancy of compiling all the way through and maybe even of running. This gradual change of status from an experiment to a working system was true of most compilers. It is stressed here in the case of Fortran only because Fortran is now almost taken for granted, as if it were built into the computer hardware.

In the early days of automatic programming, the most important criterion on which a compiler was judged was the efficiency of the object code. "You only compile once, you run the object program many times," was a statement often quoted to justify a compiler design philosophy that permitted the compiler to take as long as necessary, within reason, to produce good object code. The Fortran compiler on the 704 applied a number of difficult and ingenious techniques in an attempt to produce object coding that would be as good as that produced by a good programmer programming in machine code. For many types of programs the coding produced is very good. Of course there are some for which it is not so good. In order to make effective use of index registers a very complicated index register assignment algorithm was used that involved a complete analysis of the flow of the program and a simulation of the running of the program using information obtained from frequency statements and from the flow analysis. This was very time consuming, especially on the relatively small initial 704 configuration. Part of the index register optimization fell into disuse quite early but much of it was carried along into Fortran II and is still in use on the 704/9/90. In many programs it still contributes to the production of better code than can be achieved on the new Fortran IV compiler.

Experience led to a gradual change of philosophy with respect to compilers. During debugging, compiling is done over and over again. One of the major reasons for using a problem oriented language is to make it easy to modify programs frequently on the basis of experience gained in running the programs. In many cases the total compile time used by a project is much greater than the total time spent running object codes. More recent compilers on many computers have emphasized compiling time rather than run time efficiency. Some may have gone too far in that direction.

It was the development of Fortran II that made it possible to use Fortran for large problems without using excessive compiling time. Fortran II permitted a program to be broken down into subprograms which could be tested and debugged separately. With Fortran II in full operation, the use of Fortran spread very rapidly. Many 704 installations started to use nothing but Fortran. A revolution was taking place in the scientific computing field, but many of the spokesmen for the computer field were unaware of it. A number of major projects that were at crucial points in their development in 1957-1959 might have proceeded quite differently if there was more general awareness of the extent to which the use of Fortran had been accepted in many major 704 installations.

Extract: Algol vs Fortran

With the use of Fortran already well established in 1958, one may wonder why the American committee did not recommend that the international language be an extension of, or at least in some sense compatible with Fortran. There were a number of reasons. The most obvious has to do with the nature and the limitations of the Fortran language itself. A few features of the Fortran language are clumsy because of the very limited experience with compiler languages that existed when Fortran was designed. Most of Fortran's most serious limitations occur because Fortran was not designed to provide a completely computer independent language; it was designed as a compiler language for the 704. The handling of a number of statement types, in particular the Do and If statements, reflects the hardware constraints of the 704, and the design philosophy which kept these statements simple and therefore restricted in order to simplify optimization of object coding.

Another and perhaps more important reason for the fact that the ACM committee almost ignored the existence of Fortran has to do with the predominant position of IBM in the large scale computer field in 1957-1958 when the Algol development started. Much more so than now there were no serious competitors. In the data processing field the Univac II was much too late to give any serious competition to the IBM 705. RCA's Bizmac never really had a chance, and Honeywell's Datamatic 1000, with its 3 inch wide tapes, had only very few specialized customers. In the Scientific field there were those who felt that the Univac 1103/1103a/1105 series was as good or better than the IBM 701 / 704 /709. Univac's record of late delivery and poor service and support seemed calculated to discourage sales to the extent that the 704 had the field almost completely to itself. The first Algebraic compiler produced by the manufacturer for the Univac Scientific computer, the 1103a, was Unicode, a compiler with many interesting features that was not completed until after 1960, for computers that were already obsolete. There were no other large scale scientific computers. There was a feeling on the part of a number of persons highly placed in the ACM that Fortran represented part of the IBM empire, and that any enhancement of the status of Fortran by accepting it as the basis of an international standard would also enhance IBM's monopoly in the large scale scientific computer field.

The year 1958 in which the first Algol report was published, also marked the emergence of large scale high speed transistorized computers, competitive in price and superior in performance to the vacuum tube computers in general use. At the time I was in charge of Programming systems for the new model 2000 computers that Philco was preparing to market. An Algebraic compiler was an absolute necessity, and there was never really any serious doubt that the language had to be Fortran. The very first sales contracts for the 2000 specified that the computer had to be equipped with a compiler that would accept 704 Fortran source decks essentially without change. Other manufacturers, Honeywell, Control Data, Bendix, faced with the same problems, came to the same conclusion. Without any formal recognition, in spite of the attitude of the professional committees, Fortran became the standard scientific computing language. Incidentally, the emergence of Fortran as a standard helped rather than hindered the development of a competitive situation in the scientific computer field.

in [AFIPS JCC 25] Proceedings of the 1964 Spring Joint Computer Conference SJCC 1964 view details

in Computers & Automation 16(6) June 1967 view details

The family tree of programming languages, like those of humans, is quite different from the tree with leaves from which the name derives.

That is, branches grow together as well as divide, and can even join with branches from other trees. Similarly, the really vital requirements for mating are few. PL/I is an offspring of a type long awaited; that is, a deliberate result of the marriage between scientific and commercial languages.

The schism between these two facets of computing has been a persistent one. It has prevailed longer in software than in hardware, although even here the joining was difficult. For example, the CPC (card-programmed calculator) was provided either with a general purpose floating point arithmetic board or with a board wired specifically to do a (usually) commercial operation. The decimal 650 was partitioned to be either a scientific or commercial installation; very few were mixed. A machine at Lockheed Missiles and Space Company, number 3, was the first to be obtained for scientific work. Again, the methods of use for scientific work were then completely different from those for commercial work, as the proliferation of interpretive languages showed.

Some IBM personnel attempted to heal this breach in 1957. Dr. Charles DeCarlo set up opposing benchmark teams to champion the 704 and 705, possibly to find out whether a binary or decimal machine was more suited to mixed scientific and commercial work. The winner led to the 709, which was then touted for both fields in the advertisements, although the scales might have tipped the other way if personnel assigned to the data processing side had not exposed the file structure tricks which gave the 705 the first edge. Similarly fitted, the 704 pulled ahead.

It could be useful to delineate the gross structure of this family tree for programming languages, limited to those for compilers (as opposed to interpreters, for example).

On the scientific side, the major chronology for operational dates goes like this:

1951, 52 Rutishauser language for the Zuse Z4 computer

1952 A0 compiler for Univac I (not fully formula)

1953 A2 compiler to replace A0

1954 Release of Laning and Zierler algebraic compiler for Whirlwind

1957 Fortran I (704)

1957 Fortransit (650)

1957 AT3 compiler for Univac II (later called Math-Matic)

1958 Fortran II (704)

1959 Fortran II (709)

A fuller chronology is given in the Communications of the ACM, 1963 May, 94-99.

IBM personnel worked in two directions: one to deriving Fortran II, with its ability to call previously compiled subroutines, the other to Xtran in order to generalize the structure and remove restrictions. This and other work led to Algol 58 and Algol 60. Algol X will probably metamorphose into Algol 68 in the early part of that year, and Algol Y stands in the wings. Meanwhile Fortran II turned into Fortran IV in 1962, with some regularizing of features and additions, such as Boolean arithmetic.

The corresponding chronology for the commercial side is:

1956 B-0, counterpart of A-0 and A-2, growing into

1958 Flowmatic

1960 AIMACO, Air Material Command version of Flowmatic

1960 Commercial Translator

1961 Fact

Originally, I wanted Commercial Translator to contain set operators as the primary verbs (match, delete, merge, copy, first occurrence of, etc.), but it was too much for that time. Bosak at SDC is now making a similar development. So we listened to Roy Goldfinger and settled for a language of the Flowmatic type. Dr. Hopper had introduced the concept of data division; we added environment division and logical multipliers, among other things, and also made an unsuccessful attempt to free the language of limitations due to the 80-column card.

As the computer world knows, this work led to the CODASYL committee and Cobol, the first version of which was supposed to be done by the Short Range Committee by 1959 September. There the matter stood, with two different and widely used languages, although they had many functions in common, such as arithmetic. Both underwent extensive standardization processes. Many arguments raged, and the proponents of "add A to B giving C" met head on with those favoring "C = A + B". Many on the Chautauqua computer circuit of that time made a good living out of just this, trivial though it is.

Many people predicted and hoped for a merger of such languages, but it seemed a long time in coming. PL/I was actually more an outgrowth of Fortran, via SHARE, the IBM user group historically aligned to scientific computing. The first name applied was in fact Fortran VI, following 10 major changes proposed for Fortran IV.

It started with a joint meeting on Programming Objectives on 1963 July 1, 2, attended by IBM and SHARE Representatives. Datamation magazine has told the story very well. The first description was that of D. D. McCracken in the 1964 July issue, recounting how IBM and SHARE had agreed to a joint development at SHARE XXII in 1963 September. A so-called "3 x 3" committee (really the SHARE Advanced Language Development Committee) was formed of 3 users and 3 IBMers. McCracken claimed that, although not previously associated with language developments, they had many years of application and compiler-writing experience, I recall that one of them couldn't tell me from a Spanish-speaking citizen at the Tijuana bullring.

Developments were apparently kept under wraps. The first external report was released on 1964 March 1. The first mention occurs in the SHARE Secretary Distribution of 1964 April 15. Datamation reported for that month:

"That new programming language which came out of a six-man IBM/ SHARE committee and announced at the recent SHARE meeting seems to have been less than a resounding success. Called variously 'Sundial' (changes every minute), Foalbol (combines Fortran, Algol and Cobol), Fortran VI, the new language is said to contain everything but the kitchen sink... is supposed to solve the problems of scientific, business, command and control users... you name it. It was probably developed as the language for IBM's new product line.

"One reviewer described it as 'a professional programmer's language developed by people who haven't seen an applied program for five years. I'd love to use it, but I run an open shop. Several hundred jobs a day keep me from being too academic. 'The language was described as too far from Fortran IV to be teachable, too close to be new. Evidently sharing some of these doubts, SHARE reportedly sent the language back to IBM with the recommendation that it be implemented tested... 'and then we'll see. '"

In the same issue, the editorial advised us "If IBM announces and implements a new language - for its whole family... one which is widely used by the IBM customer, a de facto standard is created.? The Letters to the Editor for the May issue contained this one:

"Regarding your story on the IBM/SHARE committee - on March 6 the SHARE Executive Board by unanimous resolution advised IBM as follows:

"The Executive Board has reported to the SHARE body that we look forward to the early development of a language embodying the needs that SHARE members have requested over the past 3 1/2 years. We urge IBM to proceed with early implementation of such a language, using as a basis the report of the SHARE Advanced Language Committee. "

It is interesting to note that this development followed very closely the resolution of the content of Fortran IV. This might indicate that the planned universality for System 360 had a considerable effect in promoting more universal language aims. The 1964 October issue of Datamation noted that:

"IBM PUTS EGGS IN NPL BASKET

"At the SHARE meeting in Philadelphia in August, IBM?s Fred Brooks, called the father of the 360, gave the word: IBM is committing itself to the New Programming Language. Dr. Brooks said that Cobol and Fortran compilers for the System/360 were being provided 'principally for use with existing programs. '

"In other words, IBM thinks that NPL is the language of the future. One source estimates that within five years most IBM customers will be using NPL in preference to Cobol and Fortran, primarily because of the advantages of having the combination of features (scientific, commercial, real-time, etc.) all in one language.

"That IBM means business is clearly evident in the implementation plans. Language extensions in the Cobol and Fortran compilers were ruled out, with the exception of a few items like a sort verb and a report writer for Cobol, which after all, were more or less standard features of other Cobol. Further, announced plans are for only two versions of Cobol (16K, 64K) and two of Fortran (16K and 256K) but four of NPL (16K, 64K, 256K, plus an 8K card version).

"IBM's position is that this emphasis is not coercion of its customers to accept NPL, but an estimate of what its customers will decide they want. The question is, how quickly will the users come to agree with IBM's judgment of what is good for them? "

Of course the name continued to be a problem. SHARE was cautioned that the N in NPL should not be taken to mean "new"; "nameless" would be a better interpretation. IBM's change to PL/I sought to overcome this immodest interpretation.

Extract: Definition and Maintenance

Definition and Maintenance

Once a language reaches usage beyond the powers of individual communication about it, there is a definite need for a definition and maintenance body. Cobol had the CODASYL committee, which is even now responsible for the language despite the existence of national and international standards bodies for programming languages. Fortran was more or less released by IBM to the mercies of the X3. 4 committee of the U. S. A. Standards Institute. Algol had only paper strength until responsibility was assigned to the International Federation for Information Processing, Technical Committee 2. 1. Even this is not sufficient without standard criteria for such languages, which are only now being adopted.

There was a minor attempt to widen the scope of PL/I at SHARE XXIV meeting of 1965 March, when it was stated that X3. 4 would be asked to consider the language for standardization. Unfortunately it has not advanced very far on this road even in 1967 December. At the meeting just mentioned it was stated that, conforming to SHARE rules, only people from SHARE installations or IBM could be members of the project. Even the commercial users from another IBM user group (GUIDE) couldn't qualify.

Another major problem was the original seeming insistence by IBM that the processor on the computer, rather than the manual, would be the final arbiter and definer of what the language really was. Someone had forgotten the crucial question, "The processor for which version of the 360? , " for these were written by different groups. The IBM Research Group in Vienna, under Dr. Zemanek, has now prepared a formal description of PL/I, even to semantic as well as syntactic definitions, which will aid immensely. However, the size of the volume required to contain this work is horrendous. In 1964 December, RCA said it would "implement NPL for its new series of computers when the language has been defined.?

If it takes so many decades/centuries for a natural language to reach such an imperfect state that alternate reinforcing statements are often necessary, it should not be expected that an artificial language for computers, literal and presently incapable of understanding reinforcement, can be created in a short time scale. From initial statement of "This is it" we have now progressed to buttons worn at meetings such as "Would you believe PL/II?" and PL/I has gone through several discrete and major modifications.

Extract: Introduction

The family tree of programming languages, like those of humans, is quite different from the tree with leaves from which the name derives.

That is, branches grow together as well as divide, and can even join with branches from other trees. Similarly, the really vital requirements for mating are few. PL/I is an offspring of a type long awaited; that is, a deliberate result of the marriage between scientific and commercial languages.

The schism between these two facets of computing has been a persistent one. It has prevailed longer in software than in hardware, although even here the joining was difficult. For example, the CPC (card-programmed calculator) was provided either with a general purpose floating point arithmetic board or with a board wired specifically to do a (usually) commercial operation. The decimal 650 was partitioned to be either a scientific or commercial installation; very few were mixed. A machine at Lockheed Missiles and Space Company, number 3, was the first to be obtained for scientific work. Again, the methods of use for scientific work were then completely different from those for commercial work, as the proliferation of interpretive languages showed.

Some IBM personnel attempted to heal this breach in 1957. Dr. Charles DeCarlo set up opposing benchmark teams to champion the 704 and 705, possibly to find out whether a binary or decimal machine was more suited to mixed scientific and commercial work. The winner led to the 709, which was then touted for both fields in the advertisements, although the scales might have tipped the other way if personnel assigned to the data processing side had not exposed the file structure tricks which gave the 705 the first edge. Similarly fitted, the 704 pulled ahead.

It could be useful to delineate the gross structure of this family tree for programming languages, limited to those for compilers (as opposed to interpreters, for example).

in PL/I Bulletin, Issue 6, March 1968 view details

in PL/I Bulletin, Issue 6, March 1968 view details

in PL/I Bulletin, Issue 6, March 1968 view details

in Computers and Automation 20(11) November 1971 view details

[321 programming languages with indication of the computer manufacturers, on whose machinery the appropriate languages are used to know. Register of the 74 computer companies; Sequence of the programming languages after the number of manufacturing firms, on whose plants the language is implemented; Sequence of the manufacturing firms after the number of used programming languages.]

in Computers and Automation 20(11) November 1971 view details

in [ACM] CACM 15(06) (June 1972) view details

in Computers & Automation 21(6B), 30 Aug 1972 view details

in Computers & Automation 21(6B), 30 Aug 1972 view details

in ACM Computing Reviews 15(04) April 1974 view details

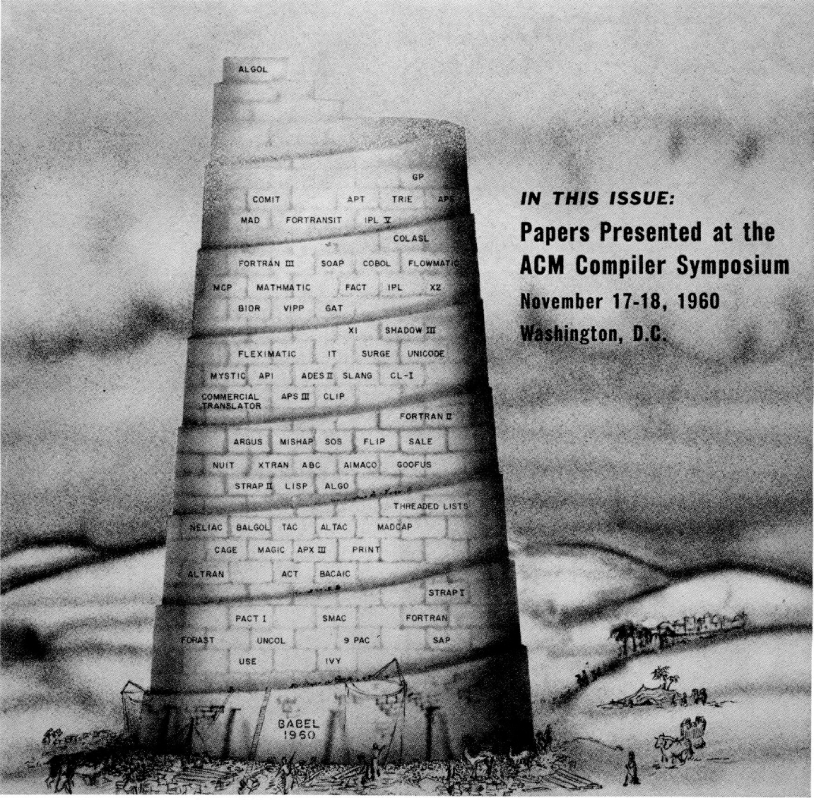

The exact number of all the programming languages still in use, and those which are no longer used, is unknown. Zemanek calls the abundance of programming languages and their many dialects a "language Babel". When a new programming language is developed, only its name is known at first and it takes a while before publications about it appear. For some languages, the only relevant literature stays inside the individual companies; some are reported on in papers and magazines; and only a few, such as ALGOL, BASIC, COBOL, FORTRAN, and PL/1, become known to a wider public through various text- and handbooks. The situation surrounding the application of these languages in many computer centers is a similar one.

There are differing opinions on the concept "programming languages". What is called a programming language by some may be termed a program, a processor, or a generator by others. Since there are no sharp borderlines in the field of programming languages, works were considered here which deal with machine languages, assemblers, autocoders, syntax and compilers, processors and generators, as well as with general higher programming languages.