SOAP II(ID:4754/soa004)

Symbolic Optimum Assembly Program

Modifications of SOAP

Hardware:

- IBM 650 IBM

Related languages

| SOAP I | => | SOAP II | Evolution of | |

| SOAP II | => | Compiler II-SOAP II | Compiled to | |

| SOAP II | => | IT 3 | Targetting | |

| SOAP II | => | SOAP H | Augmentation of | |

| SOAP II | => | SOAP IIA | Evolution of | |

| SOAP II | => | SOAP III | Evolution of |

References:

Introduction

The principal achievement of an assembly program is that it almost completely relieves the programmer of the problem of assigning actual storage locations to instructions or quantities manipulated by the program. If, in the course of programming, he wishes to refer to any location in storage which will contain some quantity used by the program, he gives this location a "name", preferably one of high mnemonic value, and refers to it by means of this name. Thus, salary might be stored in "WAGES", sin x in "SIN X". The assembly program assigns actual locations to these "names " and produces an absolute machine language program.

SOAP II is a symbolic assembly program for the IBM 650 Data Processing System. It is designed to assemble programs written for any array of equipment including tapes, printer, immediate access storage, indexing registers, floating point, disk storage and inquiry stations. The assembly program itself uses none of these features. It will operate on a basic 650 having an alphabetic device. Special features described in the sequel should render it equally useful for both scientific and commercial applications.

The principal achievement of an assembly program is that it almost completely relieves the programmer of the problem of assigning actual storage locations to instructions or quantities manipulated by the program, If, in the course of programming, he wishes to refer to any location in storage which will contain some quantity used by the program, he gives this location a "name", preferably one of high mnemonic value, and refers to it by means of this name. Thus, salary might be stored in "WAGES", sin x in "SIN X". The assembly program assigns actual locations to these "names" and produces an absolute machine language program.

SOAP II is a symbolic assembly program for the IBM 650 Data Processing System. It is designed to assemble programs written for any array of equipment including tapes, printer, immediate access storage, indexing registers, floating point, disk storage and inquiry stations. The assembly program itself uses none of these features . It will operate on a basic 650 having an alphabetic device. Special features described in the sequel should render it equally useful for both scientific and commercial applications.

Let me elaborate these points with examples. UNICODE is expected to require about fifteen man-years. Most modern assembly systems must take from six to ten man-years. SCAT expects to absorb twelve people for most of a year. The initial writing of the 704 FORTRAN required about twenty-five man-years. Split among many different machines, IBM's Applied Programming Department has over a hundred and twenty programmers. Sperry Rand probably has more than this, and for utility and automatic coding systems only! Add to these the number of customer programmers also engaged in writing similar systems, and you will see that the total is overwhelming.

Perhaps five to six man-years are being expended to write the Alodel 2 FORTRAN for the 704, trimming bugs and getting better documentation for incorporation into the even larger supervisory systems of various installations. If available, more could undoubtedly be expended to bring the original system up to the limit of what we can now conceive. Maintenance is a very sizable portion of the entire effort going into a system.

Certainly, all of us have a few skeletons in the closet when it comes to adapting old systems to new machines. Hardly anything more than the flow charts is reusable in writing 709 FORTRAN; changes in the characteristics of instructions, and tricky coding, have done for the rest. This is true of every effort I am familiar with, not just IBM's.

What am I leading up to? Simply that the day of diverse development of automatic coding systems is either out or, if not, should be. The list of systems collected here illustrates a vast amount of duplication and incomplete conception. A computer manufacturer should produce both the product and the means to use the product, but this should be done with the full co-operation of responsible users. There is a gratifying trend toward such unification in such organizations as SHARE, USE, GUIDE, DUO, etc. The PACT group was a shining example in its day. Many other coding systems, such as FLAIR, PRINT, FORTRAN, and USE, have been done as the result of partial co-operation. FORTRAN for the 705 seems to me to be an ideally balanced project, the burden being carried equally by IBM and its customers.

Finally, let me make a recommendation to all computer installations. There seems to be a reasonably sharp distinction between people who program and use computers as a tool and those who are programmers and live to make things easy for the other people. If you have the latter at your installation, do not waste them on production and do not waste them on a private effort in automatic coding in a day when that type of project is so complex. Offer them in a cooperative venture with your manufacturer (they still remain your employees) and give him the benefit of the practical experience in your problems. You will get your investment back many times over in ease of programming and the guarantee that your problems have been considered.

Extract: IT, FORTRANSIT, SAP, SOAP, SOHIO

The IT language is also showing up in future plans for many different computers. Case Institute, having just completed an intermediate symbolic assembly to accept IT output, is starting to write an IT processor for UNIVAC. This is expected to be working by late summer of 1958. One of the original programmers at Carnegie Tech spent the last summer at Ramo-Wooldridge to write IT for the 1103A. This project is complete except for input-output and may be expected to be operational by December, 1957. IT is also being done for the IBM 705-1, 2 by Standard Oil of Ohio, with no expected completion date known yet. It is interesting to note that Sohio is also participating in the 705 FORTRAN effort and will undoubtedly serve as the basic source of FORTRAN-to- IT-to-FORTRAN translational information. A graduate student at the University of Michigan is producing SAP output for IT (rather than SOAP) so that IT will run on the 704; this, however, is only for experience; it would be much more profitable to write a pre-processor from IT to FORTRAN (the reverse of FOR TRANSIT) and utilize the power of FORTRAN for free.

in "Proceedings of the Fourth Annual Computer Applications Symposium" , Armour Research Foundation, Illinois Institute of Technology, Chicago, Illinois 1957 view details

in "Proceedings of the Fourth Annual Computer Applications Symposium" , Armour Research Foundation, Illinois Institute of Technology, Chicago, Illinois 1957 view details

We had full intentions of using the IT language for our input to the Univac, as Mr. Bemer reported here last year, then changed our minds and decided that we needed something with "name" variables similar to Fortran or AT-3 (Mathmatic).

We also desire to use a language that will be usable on both the Univac and the 650 without too much changing-around of the pseudo-code. This desire is motivated largely by both the presence of the two machines as well as by the very nature of our main activity, namely, educational work. The advantages of a common language (or, more properly, a compatible language) are quite clear but not easily attained on two machines of such different capacities.

At the present time we are planning to use a multistage process in translating from the pseudo-code (problem-oriented language) to the machine language.

It is likely that we will process the original pseudo-code into a simpler pseudo-code, then translate that code, etc., according to the following list:

POL

POL1

SML

Preprocessor

Unisap

C-10

POL will be an algebraic problem-oriented language which will be quite rich in its abilities (possibly including such things as general summation notation, matrix manipulation, "find maximum," "find minimum," differentiation, integration, etc.). The POLl will be another algebraic problem-oriented language with about the same capabilities as presently found in AT-3 (Math-matic) or Fortran. SML is Simple Machine Language and is a language which has the characteristics of a computer but no reference at all to any particular computer. We have already completed the specifications of SML and how it is to work. Following SML will come a "preprocessor," so named because it was invented for the very purpose of preceding Unisap before its utility in this particular connection was evident.

The preprocessor is currently being debugged. The coding for SML to preprocessor is now being written. Unisap has already been mentioned as an excellent example of a symbolic assembly program and has been widely distributed. C-10 neither needs any explanation (it is the Univac machine language) nor does it particularly deserve explanation.

Extract: SOAP, CASE SOAP

I shall now descend from my blue-sky position and describe what we have already finished doing in the automatic programming business. We, as have several others, started our first efforts at automatic programming after taking a long close look at Dr. Perlis' IT compiler for the IBM 650. As usual, when one examines something created elsewhere, we thought that we could make some improvements in the existing scheme without giving up any of the advantages that were already present. Our first effort was then to write a program named "Compiler II-SOAP II." This program worked very well, turned out nice "tight" coding, and included IT as a subset language. The bulk of the flow charting, planning, and coding for Compiler II-SOAP II was done by two of my undergraduate students, Mr. Lynch, a sophomore, and Mr. Knuth, a freshman.

0ne of the more unpleasant experiences encountered in the work on the compiler was the discovery that SOAP II was unable to assemble the entire compiler owing to the symbol table becoming filled up at an early stage of the assembly process. The solution to the problem was obvious but not very satisfactory.

As a matter of fact we did modify SOAP II to dump the symbol table and then reload it again in modified form, but we abandoned this philosophy as not being a worthwhile solution to the problem. Therefore, Mr. Knuth suggested that he write a new symbolic assembly program with some new features incorporated in it. Accordingly, SOAP III (later renamed CASE-SOAP III due to some rather peculiar complaints from a large corporation) was written. CASE-SOAP-III solved the symbol-table difficulty by introducing a fairly new idea--the program point. Program points are addresses which the programmer needs to introduce in order to cause the machine to function properly but which have no mnemonic value to the functioning of the program. For example, one may frequently encounter the use of addresses such as NEXT and NXT, etc., which are included solely to link the program together but which have no significance at all in the logical structure of the problem. The program point was introduced just to solve the problem of naming these "random" addresses. The program point uses no room at all in the symbol table and is continuously redefinable by the simple expedient of using the same point over again. As an example consider the following segment of coding:

RAU ROO02

BMI 1F

STL PO005

RSL ONE6 1F

1 ALO PCON

In both cases of the use of "1F" in the instruction address position, the meaning is to use the address of program point ''1" forward. Now look at the next example (which, by the way, does nothing) :

2 RAU ROO01 1F

1 SUP ONES

NZE 1F 2B

1 RAL 6 1B

Note that the use of "1F" still refers to the forward program point, while "1B" refers to the correspondingly numbered backward program point. The use of "6" in the data address of the last instruction refers to the address of that particular line of coding (i.e., the line "1 RAL 6 1B"). Using CASE-SOAP III to write our next compiler revealed that there was an economy of approximately 50 per cent in the number of symbols used in CASE-SOAP III over the equivalent program written in SOAP II. Since we are looking forward to acquiring some day some of the optional attachments for our 650, Mr. Knuth incorporated additional pseudo-operations into CASE-SOAP III which allow one to effectively write programs for the augmented machine.

The new compiler referred to in the previous paragraph has been named RUNCIBLE. I shall now stick out my neck and state quite flatly that I do not believe that any tighter program will ever be devised for the 650. RUNCIBLE is again an algebraic problem-oriented compiler for the 650. The input language includes both IT and Compiler II-SOAP II as proper subset languages. The compiler is in a single deck of cards but exists logically in twenty-four different versions. The various versions are selected at read-in time by the storage-entry switch settings. The various possibilities are given from examining the following:

(Multipass OR One-pass) AND (650 OR 653) AND (Minimum Clocking OR

Full Clocking OR No Clocking) AND (Normal Output OR Error Search)

= (Operating Mode)

We now examine in some detail the options appearing in the foregoing equation. The multipass mode of operation is the one usually associated with 650 compilers (i.e., an intermediate language step is used). In the case at hand, the intermediate language is CASE-SOAP III. The one-pass mode turns out a machine-language program directly in a five instruction per card format. In the second option the term 650 refers to the basic 2,000-word 650 as being used as the object machine (i.e., the machine on which the compiled problem is to be run), the term 653 means that the compiled program is to be run on a machine equipped with floating point, index registers, and (at the programmer's option) core storage. It is also possible to cause the output machine language to be tailored to fit a machine equipped with core storage only and neither index registers nor floating point. The various "clocking" options mentioned refer to the several "tracing" modes that can be called upon to help debug the object program. It is possible to ascertain (while actually operating the object program) upon which statement in the pseudolanguage the machine is working. This one feature is proving to be one of the most helpful debugging aids that we have ever encountered.

Extract: IT, Runcible

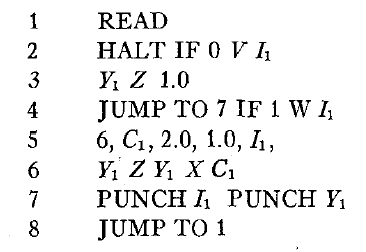

The input language to RUNCIBLE is an augmented version of Compiler II-SOAP II which includes names of subroutines and some other English-language options in the control words. A sample program which appears on page 20 of the manual follows :

The program reads in a non-negative value (and tests to see that it is non-negative) of I1 and then calculates I1 factorial and punches both the input number and the factorial value.

We are now naturally looking forward to the day when we shall have to consider a 650 compiler for the machine with tapes and RAMAC unit.

In conclusion let me state that in our opinion the most important thing to worry about, as far as application of machines to scientific and engineering problems is concerned, is the time consumed from the statement of the problem in English to the presentation of the numerical answer to the problem proposer.

We see no reason why the time consumed on the computer should be the only time interval which comes under close scrutiny. It seems much more reasonable to conserve the time of the scientist and engineer rather than the machine-after all, one may obtain machines by the simple expedient of ordering them from the manufacturer, but a foolproof, economic method of obtaining competent personnel is as yet an almost unsolved problem.

Extract: MATH-MATIC vs. RUNCIBLE

M. O. LOCKS (Remington Rand Univac) :

Could you give me your interpretation of the difference between an assembly system and a compiler system? Also, could you explain how something which is as flexible as a compiler can be gotten onto a 2,000-word drum with no further erasable storage?

MR. WAY:

Our view of an assembly system is a facility whereby one writes a program in some language-usually similar to machine language-and gets out an essentially one-to-one translation of that language. In our case we write in a symbolic language, using pseudo-codes and symbolic addresses, and get out a machine-language code along with the actual locations-in the case of the Univac I, for instance, C-10 operation codes and addresses-when we assemble the tape which will run the problem. A compiler, on the other hand, is usually a one-to-many translator. You put in, usually, algrebraic statements of your problem and get out a machine code to do the problem; the latter, of course, in appearance bears no resemblance whatsoever to the original problem statement.

Now your question about how we do this on a 2,000-word drum is of interest. A number of people said it could not be done, but Perlis proved that this was wrong. I notice that you are with Remington Rand Univac, so I see why the question comes up! If anybody ever writes a compiler that will fit inside the 1,000- word memory of the Univac I, I would ce~tainly like to see it. The whole philosophy of compiling is dictated by the machine available to do it. In the case of the Univac I, with ten tape units, one can spin wheels and do nearly anything if he has time. As examples, witness Math-matic and Flow-matic, which do spin wheels and take time, but do compile. With the 650 one does not use this type of philosophy, mainly because he cannot. So, one uses a different method of generating the machine language. I realize I haven't told you in detai! how to write a compiler; I didn't intend to. Does that answer your question?

MR. LOCKS:

Yes, that does basically answer the question, but I would like to inquire how much your 650 compiler can do as compared to a compiler which does spin wheels, Math-matic, for instance.

MR. WAY:

I am sure we can run rings around it with this 650 compiler, the basic reason being that the 650 storage of 2,000 words allows us to get more in the main Current Developments in Computer Pvogremming Techniques 131 storage of the machine at once. In our view the Math-matic system at present is rather handicapped by the input-output of the Univac I. The man who writes pseudo-code in Math-matic must know a lot about the Univac I, or he will not be able successfully to segment a problem. At least this has been our experience. Extract: FORTRANSIT, IT, RUNCIBLE

R. B. WISE (Armour Research Foundation) :

You mentioned that you were going to bypass the Perlis language compiler for the Univac I and write something more ambitious. Will this allow for IT compiler input?

MR. WAY:

We haven't decided as yet, but we would like to allow it if possible.

MR. WISE:

I, for one, would want to register a small protest if you do not. The IT language is about the closest thing we have today to the universal language among computers, and it seems to me that it would be very much worthwhile to have something-even something which some people may classify as mediocrewhich will allow communication among computers. This has been written for the 650; through the use of For Transit, you have access to some programs for the 704; it has been written for the 1103A; and, in somewhat modified form, it also fits in the Datatron 205. I think there is a big argument for allowing that type of input.

MR. WAY:

YOU opened a loophole when you said "in somewhat modified form." When I said before that our compiler would accept previous programs, I meant without any alteration.

in Proceedings of the 1958 Computer Applications Symposium, Armour Research Foundation, Illinois Institute of Technology, Chicago, Illinois view details

in E. M. Crabbe, S. Ramo, and D. E. Wooldridge (eds.) "Handbook of Automation, Computation, and Control," John Wiley & Sons, Inc., New York, 1959. view details

in [ACM] CACM 6(03) (Mar 1963) view details

In the current struggles between the subcultures of proprietary development and of the open-source movement, it is notable that so many of us from the era of the IBM 650 are completely in the debt of Stan Poley for assuring that the documentation, flow charts, and source code of the SOAP II assembly program were printed in the manual. I had the privilege of acknowledging Stan (who was trained in structural engineering and devoted to developing the IBM STRESS system) personally for that amazingly singular contribution and I want to point out here that we are all in his debt in ways we may never know -- what must have seemed such a small thing and what a momentous difference it made External link: Online copy Extract: RUNCIBLEs, SOAPs etc

I suppose it was natural for a person like me to fall in love with his first computer. But there was something special about the IBM 650, something that has provided the inspiration for much of my life's work. Somehow this machine was powerful in spite of its severe limitations. Somehow it was friendly in spite of its primitive man- machine interface.

I had just turned 19 when I was offered a part-time job helping the statisticians at Case Institute of Technology. My first task was to draw graphs; but soon I was given some keypunching duties, and I was taught how to use the wondrous card sorter. Meanwhile a strange new machine had been installed across the hall -- it was what our student newspaper called "an IBM 650 Univac," or a "giant brain." I was fascinated to look through the window and see the lights flashing on its console.

One afternoon George Haynam explained some of the machine's internal code to a bunch of us freshmen who happened to be in the lab. It all sounded mysterious to me, but it seemed to make a bit of sense, so I got hold of a couple of manuals. My first chance to try the machine came a few weeks later, when one of the upperclassmen at the fraternity I was pledging needed to know the five roots of a particular fifth-degree equation. I decided that it would be fun to compute the roots by using the 650. More precisely, I had been reading the manual for the Wolontis-Bell Labs Interpreter, and I decided that polynomial root finding would be a good test case.

A program for the Bell System (as we called it) consisted of 10-digit numbers like 1 271 314 577 which meant "Add the (floating-point) number in location 271 to the (floating-point) number in location 314 and put the result in location 577." I found a book that gave formulas for the roots of a general fourth-degree equation; so it was easy to factor a general real polynomial of degree 5 by first doing a simpleminded search for a real root r, then dividing by x-r and plugging the result into the formulas for quartics.

I realize now how lucky I was to have had such a good first encounter with computers. The polynomial problem was well matched to my mathematical knowledge and interests, and I had a chance for hands-on experience, pushing buttons on the machine and seeing it punch the cards containing the answers. Furthermore, the Bell language was an easy way to learn the notion of a program that a machine could carry out. I've forgotten the name of the fraternity brother who asked me to solve this particular problem, but I bet he's kicking himself now for not having done it himself.

I often wonder whether it might not still be best to teach programming to novices by starting with a numeric language like that of the Bell interpreter, instead of an algebraic language like BASIC or LOGO. I think a small child can understand machinelike language better than an algebraic language. But I know that such ideas are now considered out of date, and I suppose I'm being an old fogy.

I learned a few years ago that the Bell interpreter had been inspired by John Backus's Speedcoding system for the IBM 701 (Backus 1954). During my student days I had never heard of the 701, and this, I think, leads to an important point: The IBM 650 was the first computer to be manufactured in really large quantities. Therefore the number of people in the world who knew about programming increased by an order of magnitude. Most of the world's programmers at that particular time knew only about the 650, and were unaware of the already extensive history of computer developments in other countries and on other machines. We can still see this phenomenon occurring today, as the number of programmers continues to grow rapidly.

When I did finally learn about the existence of the IBM 701, it had been improved to the 709, and it was shortly to become the 7090; but I must confess that I still liked my good old 650 a lot better. The 650 had only 44 operation codes (IBM 1955), while the 709 had more than 200; yet I never enjoyed coding for the 709, because I never seemed to be able to write short and elegant programs for it -- the opcodes didn't blend together especially well. By contrast, it was somehow quite easy and pleasant to do complex things on the 650 with very few instructions. Most of the commands in the 650's repertoire accomplished several things at once, and it was frequently possible to make good use of the side effects. For example, the instruction 60 1234 1009 meant, "Load the contents of location 1234 into the distributor; put it also into the upper accumulator; set the lower accumulator to zero; and then go to location 1009 for the next instruction." All four of these actions were often useful in the subsequent program steps.

In fact, I usually got by with only 34 of the 44 opcodes, because I seldom had a good application for the ten "branch on distributor digit equal to 8" commands. After 25 years I still can remember the numeric codes for most of the remaining 34 ops; and I'll never forget the fact that addresses 8001, 8002, and 8003 referred to the distributor, lower, and upper accumulator registers.

The 650's "one-plus-one address" code, in which each instruction designated the location of its successor (and branch instructions designated both successors), has been rejected by modern machine designers. But it was in fact extremely effective, because it allowed convenient subroutine linkage and because it became easy to execute instructions from registers. A one-plus-one scheme was important, of course, on a machine without efficient access to all words of memory, because instructions could be located in "optimum" places on the magnetic drum.

The incredible thing about the 650 was that we could do so many things with it, although it was three orders of magnitude slower than today's computers, and it had three orders of magnitude less memory. The memory-space limitation was more important than anything else during my first year of programming. I had to learn how to pack data and how to use subroutines in order to save space. For example, my first large program was a tic-sac-toe routine that "learned" to play by remembering the relative desirability or undesirability of each position that it had ever encountered. The hardest part was figuring out how to keep one digit of memory for each possible configuration of the board; board positions that were equivalent under rotation or reflection were considered to be identical.

The first program that I ever wrote in machine language still stands out in my mind. It was June 1957, and my freshman year at Case had just ended. I decided to hang around Cleveland instead of going home, and I was allowed to stay up all night playing with the computer by myself. So I decided to write a program that would find prime factors. The idea was that a person could set up a 10-digit number in the console switches and start my routine, which would punch the corresponding prime factors on a card and stop; then another number could be set up and factored in the same way, etc. I believe my first draft program was about 80 instructions long, but I didn't save it, so I can't be sure. Anyway, I wrote it as a sequence of about 80 decimal numbers, and punched it onto cards -- much as I had done with my previous (Bell system) program for root-finding. Then I sat down at the console of the machine and began to learn how to debug, using the half-cycle switch to step through the instructions slowly, or using the addresss-top switch to discover when the program used particular locations for data or instructions. The 650 console was excellent for on-line debugging, and nobody else was using the machine at that time of night.

Well, my program was riddled with errors, and I removed them one by one during the next two weeks. Besides the "obvious" mistakes, I hadn't realized at first that a 10-digit number can have as many as 33 prime factors. Only eight numbers could be punched on a card, so I would have to punch up to five cards. (My original program had only thought of punching one card.) Then I had to clear the memory between runs so that spurious data from a previous factorization wouldn't appear on the next one, and so on. You know the story; we all make the same mistakes. I was lucky enough to have the opportunity to make lots of mistakes right from the beginning, and to diagnose them all by myself, sitting at the machine. All the facts I needed were available to me, because I was working in machine language, and no operating system or other software was interposing itself between me and what I needed to know. Debugging took a long time at first, but I think I had the machine to myself about six hours every night. Finally I arrived at a program that was satisfactory; I vaguely recall that it took about 11 minutes to determine that the number 9999999967 was prime, although at one point this particular test case had taken 17 minutes.

By this time my program had grown to 140 words long, and I think I had changed each of the instructions at least twice. I had also learned about the SOAP assembly language (Poley and Mitchell 1955), so my final program was expressed in symbolic form; I had been weaned away from numeric machine language during those two weeks. The success of this program gave me the confidence to try another (which converted a given number on the console switches to a specified radix); then I was ready for tic-tac-toe.

The SOAP language allowed symbols to be up to five letters long, and I recall spending a lot of time trying to come up with suitable names. It was a great moment when I hit on the right term for the program step that was to be executed when the computer had won at tic-tac-toe by finding three x's in a row: I called that step BINGO.

I regret to report that I've recently looked again at my prime factors and tic-tac-toe programs, and they are entirely free of any comments or documentation.

Shortly afterward I got hold of the SOAP II manual (Poley 1957), which impressed me greatly and had an enormous influence on my subsequent career. This manual included the entire listing of SOAP II in its own language, and the program was absolutely beautiful. Reading Poley's code was like listening to a symphony; I wanted to be able to compose programs like that. I also learned several new techniques, such as hashing, from this code. My next project was to write a modification of SOAP II that would have worked on a 650 with only 1000 words of memory. (I knew that such machines were sold, but I never actually saw one.) Then I spent the rest of the summer writing SOAP III (Knuth 1958), which went the other way by adding additional features for enhanced 650s that had index registers and/or floating-point hardware.

SOAP III was my introduction to software writing. In particular, I learned about what is now called "creeping featurism," where each of my friends would suggest different things they wanted in an assembler. I probably tried to accommodate them all, since SOAP III had 24 pseudo-operations that were not in SOAP II. I also left 150 memory locations available for user-defined pseudo-operations. I put liberal comments into the code, having learned that lesson at last.

Our lab received an amazing program from Carnegie Tech during the summer of 1957, namely the famous IT compiler by Perlis and Smith (1957). IT took algebraic statements as input, then computed awhile, and punched SOAP programs as output. I had no idea how such a feat would be possible, but I got hold of the program listing at the end of the summer, and I read it while vacationing with my parents at a beach resort on Lake Erie. This program was not beautifully written like Poley's, but it accomplished remarkable things, so I naturally had an urge to rewrite everything in the style of 650 coding that I had just learned. Bill Lynch and I began this project late in 1957, under the direction of Fred Way III and George Haynam. We first called our program Compiler III, but it eventually became known as RUNCIBLE (Knuth 1959b; Case 1959). The language was a superset of Perlis's IT, and we worked very hard to squeeze in as many new features as we could.

Somehow it was possible to cram a complex compiler into the 2000 words of the 650. Yet when we were done, I don't think we could have gotten by with only 1999 words, because we had spent considerable time finding every last bit of space -- by using terrible tricks so that small changes to one part of our code would usually cause some apparently unrelated part to blow up. I guess Parkinson's Law applies to programs as well as to organizations; we kept adding features until the space was filled.

RUNCIBLE had four versions called AX, AY, BX, and BY, where X stood for object code that invoked subroutines for floating-point arithmetic, while Y stood for object code that used the 650's optional floating-point hardware; A stood for SOAP output, while B stood for directly loadable machine-language programs punched five per card (and bypassing the need for assembly). It turned out that the X version became a Y version by replacing exactly 95 instructions by 95 others; similarly, the A version became a B version by replacing exactly 406 instructions by 406 others. If we discovered a way to save one line of code in, say, the A version, we looked closely at the B version until we had saved a line there, too.

We called the A version "two-pass operation," while the B version was called "one-pass." At the end of the summer I hacked together a "zero-pass" version that took one less pass than B, since it loaded machine instructions directly into their memory locations instead of punching anything on cards. For this I had to eliminate the matrix feature of IT; that is, doubly subscripted arrays were not permitted in "RUNCIBLE zero." My main goal was to prove that 2000 words of memory were not too few for a compile-load-and-go system, because somebody (Perlis?) had reportedly said that it would be impossible.

By 1959 our lab had acquired the ultimate in 650 upgrades: we had a full 653 system (IBM 1959) including index registers, floating-point hardware, and 60 whole new words of core memory! It was heavenly. Besides this, we put our printer on-line (so that listings didn't have to be made via cards), and we acquired a RAMAC disk storage, as well as several tape units.

At this point it was desirable to have a new assembly program so that we would make proper use of the new equipment. I therefore wrote SuperSoap (Knuth 1959a), a major improvement over SOAP III. I'm still pretty proud of SuperSoap, because it introduced some good ways of dealing with programs that would be loaded into the drum but executed from core, and because I had the courage to remove some features of SOAP III that didn't work as well as planned. Furthermore, SuperSoap introduced what I think was the best approach to the problem of "optimizing" the drum locations of data and instructions for the 650; it was a combination of machine and hand methods (Knuth 1961).

The name SOAP stood for Symbolic Optimal Assembly Program, and optimal meant that the machine would choose drum locations so that at least one reference to that location would involve no delay. Such optimization was much better than random placement of instructions; I had (for fun) experimented with SHOAP, a "Symbolic Horribly Optimizing Assembly Program" that used the algorithm of SOAP in reverse so that at least one reference to each location would lead to a 49-word-time delay. By adding seven cards to the normal SOAP program deck, you had SHOAP, which produced extremely slow programs. Conversely, it was possible to improve significantly on SOAP'S performance by choosing locations carefully by hand and rewriting the program when necessary, as I discuss in Knuth (1961); the Bell interpretive system had been hand-optimized in a particularly beautiful way, which was quite an inspiration to me. In 1958, I wrote HAND SOAP, which permitted me to hand-optimize the locations without giving up the advantage of symbolic assembly. We used HAND SOAP to prepare the runtime system for RUNCIBLE; SuperSoap was later designed to incorporate similar ideas into a full-fledged assembler.

Somebody in 1958 or so circulated a joke about a program called RINSO, a "Real Ingenious New Symbolic Optimizer"; we were carried away by acronyms in those days. For some reason there has been an intimate relation between cleaning agents and software that I have written through the years, even though my programs haven't always been very clean. For example, John McNeley and I devised a system called SOL in 1963 (Knuth and McNeley 1964), and when I visited Norway a few years later I learned that SOL is the name of a Norwegian laundry detergent. Even more amazing was that my MIXAL assembler language (Knuth 1968) turned out to have the same name as a popular detergent in Yugoslavia -- although I had had no idea that MIXAL would even be a word in any language! More recently, I have learned that TEX is a brand name for toilet paper in Greece … but I am digressing.

My preface to the SuperSoap manual (Knuth 1959a) gives a glimpse into the mood that prevailed at IBM 650 sites during the late 1950s:

Soap 3 was written attempting to get as many features into 2000 memory locations as possible, but SuperSoap was written under a different philosophy; speed was the prime consideration, and storage space was conserved only when speed was not appreciably decreased. A factor of roughly 3:1 in running time over Soap 3 has thus been obtained…. Some of the pseudo-op rules have become more logical thanks to Carnegie Tech's TASS [a competing assembler, written by Art Evans]…. Once again much gratitude must be given to the Case Computing Center for letting me chew up thousands of cards.

On rereading SuperSoap, I find most of it reasonably similar to today's assemblers except in one significant respect: We assumed in 1959 that the computer lab would be an "open-shop" operation in which any student could come in and take personal charge of the machines while running a program. Therefore the error messages in SuperSoap consisted of machine halts, and my manual gave the following advice for error recovery:

SuperSoap believes that the best place to catch errors is during assembly, and so it will stop if it finds something amiss…. The offending card is the fourth-last card out if you clear the read feed…. To restart, correct the bad card, … reinsert it in the deck, and hit Program Start.

There was a keypunch right next to the console, so this was probably the most efficient way to get the job done in those days.

Cards, cards, cards; we used tens of thousands each day. The run-time system of RUNCIBLE had a debugging feature whereby you could turn the console knobs and get a card punched for every statement of your program that was being executed; or you could even trace every machine-language operation, with one card per instruction. The 533 Card Read Punch could produce 100 cards per minute, and it often did.

One of the nice things about the 650 and its peripherals was their robustness. Our computing center staff could safely let random students work all of the IBM machines, changing plugboard control panels, clearing the punch hopper, mounting tapes, fixing card jams, etc., without worrying that the machines would be ruined. (This was emphatically not the case for the Univac equipment in another part of our laboratory; those machines had been designed with the assumption of a trained operator in attendance, and I tended to break them accidentally every time I went nearby. If all computers had been like those, a lot of people like me would never have gotten a good start on the use of computers, because we would never have been allowed to touch them.) During all my experience with the 650 I can remember only two instances where the design could perhaps have been slightly more foolproof: Once I discovered a special case of the divide operation that put our machine into an infinite loop, restartable only by hitting Power Off. (Later I visited Carnegie Tech and tried it on their 650; it blew the fuse! Ah yes, those were the joys of student life.) The other time was when one of the tiny console display lights was broken; the glass was gone and two little wires were sticking out. I changed the display so that this particular light was off, then tried to pull out the broken bulb by grabbing onto what looked like a dead filament. This gave me quite a jolt, and I was sick for a day or so. Perhaps the machine was trying to fill my brain with advice about how to write better software; or perhaps it was trying to kill me.

By 1959 I had developed a pretty good style of 650 coding, and I must confess also being addicted to tricks. One of the competitions among students was to do as much as possible with programs that would fit on a single card -- which had room for only eight instructions. One of the unsolved problems was to take the 10-digit number on the console and to reverse its digits from left to right, then display the answer and stop; nobody could figure out how to do this on a single card. But one day I proudly marched up to the machine and made a demonstration: I read in a card, then dialed the number 0123456789 on the console, and started the machine. Sure enough, it stopped, displaying the number 9876543210. Everybody applauded. I didn't explain until later that my card would display the number 9876543210 regardless of what number appeared on the console switches.

There's more to the story. Our machine had an extra set of console switches, which were called register 8004. (As far as I know, Case's 650 was unique with this particular feature.) It turned out that nine instructions on an extended 650 were sufficient to reverse the digits of a number, and the ninth instruction could be put into one of the sets of console switches. Therefore I was able to solve the problem without cheating (see the appendix following).

The dirtiest trick I ever discovered for the extended 650 was to use the instruction "shift and count by 9004" in a certain context. This one instruction caused four things to happen simultaneously: (1) the upper accumulator was shifted left by four digits; (2) the lower accumulator was set equal to 10; (3) the core memory "timing ring" was set to 9004; and (4) the overflow indicator was turned on. I had an application in which all four of those things were useful.

SuperSoap was the last "system" software I wrote for the 650, although I wrote many application programs during the following year. Then I graduated, and began to tackle other machines. My favorite computer for the next five years became the Burroughs 220, which was another joy to use.

A number of my classmates and co-workers at Case later became leading figures in other computing centers; they include Bill Lynch, Mel Conway, Joe Speroni, Gilbert Steil, Jack Alanen, Mike Harrison, and many others. Our incubation period with the 650 was the foundation of our later work. And the same is true for thousands of other people (such as Bob Floyd) who became intimately familiar with 650s at other computer centers.

What was it about the 650 that made our experiences such a good foundation for our later careers?

Surely I wouldn't recommend that today's software be produced as we did the job then; we would never advance very far past the rudimentary levels achieved in those days, if we remained rooted in that methodology. But growing up with the 650 gave us valuable intuitions about what is easy for a machine to do and what is hard. It was a great machine on which to learn about machines. We had a machine organization that was rudimentary but pleasant to use; and we had program masterpieces like the Bell interpreter and Poley's assembler, as examples of excellent style.

We were forced to think and to develop our abilities to make mental abstractions about control structures; these experiences seem to have made us better able to do complex things later, when the task became easier. I'm reminded that Edsger Dijkstra began his programming experience in a similar way (but on a different computer); he and Zonneveld wrote the first ALGOL 60 compiler in a strictly numeric machine language.

This article about the 650 has turned out to be largely autobiographical. The fact is, it's impossible for me to write about that wonderful machine without writing about myself. We were very close. (One night I missed a date with my wife-to-be, because I was so engrossed in debugging that I had forgotten all about the time. I'll never live that down.) The 650 provided me with solid instruction in the art of computer programming. It was directly related to the topics of the first two technical articles that I ever submitted for publication (Knuth 1959b; 1961). Therefore it's not at all surprising that I decided in 1967 to dedicate my books on computer programming

" ... to the Type 650 computer once installed at Case Institute of Technology, in remembrance of many pleasant evenings."

in Annals of the History of Computing, 08(1) January 1986 (IBM 650 Issue) view details

At Carnegie Tech (now CMU) the 650 arrived in July 1956. Earlier in the spring I had accepted the directorship of a new computation center at Carnegie that was to be its cocoon. Joseph W. Smith, a mathematics graduate student at Purdue, also came to fill out the technical staff. A secretary-keypuncher, Peg Lester, and a Tech math grad student, Harold Van Zoeren, completed the staff later that summer. The complete annual budget -- computer, personnel, and supplies -- was $50,000. During the tenure of the 650, the center budget never exceeded $85,000. Before the arrival of the computer, a few graduate students and junior faculty in engineering and science had been granted evening access to a 650 at Mellon National Bank. In support of their research, the 650, largely programmed using the Wolontis-Bell Labs three-address interpreter system, proved invaluable. The success of their efforts was an important source of support for the newly established Computation Center.

The 650 operated smoothly almost immediately. The machine was quite reliable. Even though only a one-shift maintenance contract was in force, by the start of fall classes the machine was being used on the second shift, as well as weekends. The talented user group, the stable machine, two superb software tools -- SOAP (Poley 1957) and Wolontis (see Technical Newsletter No. 11 in this issue) -- and an uninhibited open atmosphere contributed to make the center productive and, even more, an idea-charged focus on the campus for the burgeoning insights into the proper -- nay, inevitable -- role of the computer in education and research. Other than the usual financial constraints, the only limits were lack of time and assistance. The center was located in the basement of the Graduate School of Industrial Administration (GSIA). Its dean, Lee Bach, was an enthusiastic supporter of digital computation. Consequently, he was not alarmed at the explosion in the use of the center by his faculty and graduate students, and he acceded graciously to the pressure, when it came, to support the center in its requests to the administration for additional space and equipment.

From its beginning the center, its staff, and many of the users were engaged in research on programming as well as with programming. So many problems were waiting to be solved whose programs we lacked the resources to write: We were linguistically inhibited, so that our programs were too often exercises in stuttering fueled by frustration. Before coming to Carnegie, Smith and I had already begun work on an algebraic language translator at Purdue intended for use on the ElectroData Datatron computer, and we were determined to continue the work at Carnegie. The 650 proved to be an excellent machine on which to pursue this development. Indeed, the translator was completed on the 650 well before the group at Purdue finished theirs. The 650 turned out to have three advantages over the Datatron for this particular programming task: punched cards being superior to paper tape, simplicity in handling alphanumerics, and SOAP. The latter was an absolutely crucial tool. Any large programming task is dominated by the utility with which its parts can be automatically assembled, modified, and reassembled.

The translator, called IT for Internal Translator (see Perlis and Smith 1957), was completed shortly after Thanksgiving of 1956. In the galaxy of programming languages IT has become a star of lesser magnitude. IT'S technical constructs are of historical interest only, but its existence had enormous consequences. Languages invite traffic, and use compels development. Thus IT became the root of a tree of language and system developments whose most important consequence was the opening of universities to programming research. The 650, already popular in universities, could be used the way industry and government were soon to use FORTRAN, and education could turn its attention to the subject of programming over and above applications to the worlds of Newton and Einstein. The nature of programming awaited our thoughts.

No other moment in my professional life has approached the dramatic intensity of our first IT compilation. The 650 accepted five cards (see Figure 1) and produced 42 cards of SOAP code (see Figure 2) evaluating a polynomial. The factor of 8 was a measure of magic, not the measure of a poor code generator. For me it was the latest in a sequence of amplifiers, the search for which exercises computation. The 650 implementation of IT had an elastic quality: it used 1998 of the 2000 words of 650 storage, no matter what new feature was added to the language. Later in 1957 IT-2 was made available and bypassed the need for SOAP completely. IT-2 translated the IT language directly into machine code. By the beginning of 1958 IT3 became available. It was identical to IT-2 except that all floating-point arithmetic was performed in double precision. For its needs GSIA produced IT-2- S which was IT-2 using scaled fixed-point arithmetic. The installation of the FORTRAN character set prompted the replacement of IT-9 by IT-2- A-S, which used both the FORTRAN character set and floating-point hardware. With IT-2-A-S the work on IT improvements came to an end at Carnegie.

While the IT developments were being carried out within our Computation Center, parallel efforts were under way on our machine in the development of list-processing languages under the direction of Allen Newell and Herbert Simon. The IPL family and the IT family have no linguistic structure in common, but they benefited from each other's existence through the continual interaction of the people, problems, and ideas within each system.

The use of Wolontis decreased. Soon almost all computation was in IT, and use expanded to three shifts. By the end of the summer of 1957, IT was in the hands of a number of other universities. Case and Michigan made their own improvements and GAT, developed by Michigan, became available in 1958 (see Arden and Graham 1958). It bypassed SOAP, producing machine code directly, and used arithmetic precedence. We were so impressed by GAT that we immediately embarked on its extension and produced GATE (GAT Extended) by spring of 1959. GATE was later transported to the Bendix G-20 when that computer replaced the 650 at Carnegie in 1961.

The increased use of the machine and the increased dependence on IT and its successors as a programming medium pressured the computing center into continual machine expansion. As soon as IBM provided enhancements to the 650 that would improve the use of our programming tools, our machine absorbed them: the complete FORTRAN character set, index registers, floating point, 60 core registers, masking and format commands and, most important, a RAMAC disk unit. All but the last introduced trivial modifications to our language processors. There was the usual grumbling from some users because the enhancements pressured (not required) them to modify both the form and logic of their programs. The users were becoming computer-bound by choice as well as need, though, and they had learned the first, and most important, lesson of computer literacy: In man-machine symbioses it is the human who must adjust, adapt, and learn as the computer evolves along its own peculiar gradients. Getting involved with a computer is like having a cannibal as a valet.

Most universities opted for magnetic tape as their secondary storage medium; Carnegie chose disks. Our concern with the improvement of the programming process had thrust upon us the question: How do programs come into being? Our answer: Pure reasoning and the artful combination of programs already available, understood, and poised for modification and execution. It is not enough to be able to write programs easily; one must be able to assemble new ones from old ones. Sooner or later everyone accepts this view -- first mechanized so admirably on the EDSAC almost 40 years ago (Wilkes, Wheeler, and Gill 1957). Looked at in hindsight, our concern with the process of assembly was an appreciation of the central role evolution plays in the man-computer dialogue: making things fit is a crucial part in making things work. It was obvious that retention and assembly of programs was more easily realized with disk than with tape. Like everything else associated with the 650, the RAMAC unit worked extremely well. Computation became completely dependent on it.

GATE, our extension of GAT, made heavy use of the disk (Perks, Van Zoeren, and Evans 1959). Programs were getting larger, and a form of segmentation was needed. The assembly of machine-language programs and already compiled GATE programs into new ones was becoming a normal mode of use. GATE provided the vehicle for accomplishing these tasks. The construction of GATE was done by Van Zoeren and Smith. Not all programs originated in GATE; some were done in machine language. SOAP, always our model of a basic machine assembly language, had matured into SOAP 11 but had not developed into an adult assembler for a 650 with disks. IBM was about to stunt that species, so we designed and built TASS (Tech Assembly System). Smith and Arthur Evans wrote the code; Smith left Carnegie, and Evans completed TASS. A few months later he extended it to produce TASS I! and followed it with SUPERTASS. TASS and its successors were superb assemblers and critical to our programming research (Perks, Smith, and Evans 1959).

Essentially, any TASS routine could be assembled and appended to the GATE subroutine library. These routines were relocatable. GATE programs were fashioned from independently compiled segments connected by link commands whose executions loaded new segments from disk. Unfortunately, we never implemented local variables in GATE, although their value was appreciated and an implementation was sketched.

The TASS family represented our thoughts on the combinational issues of programming. In the TASS manual is the following list of desiderata for an assembler:

1. Programs should be constructed from the combination of units (called P routines in TASS) so that relationships between them are only those specified by the programmer.

2. A programmer should be able to combine freely P routines written elsewhere with his own.

3. Any program, once written, may become a P routine in the library.

4. When a P routine is used from the library, no detailed knowledge of its internal structure is required.

5. All of the features found in SOAP I! should be available in P routines.

TASS supported an elaborate, but not rococo, mechanism for controlling circumstances when two symbols were (1) identical but required different addresses and (2) different but required identical addresses. Communication between P routines was possible both at assembly and at run time. Language extension through macrodefinitions was supported. SUPERTASS permitted nesting of macrocalls and P routine definition. Furthermore, SUPERTASS permitted interruptions of assembly by program execution and interruption of execution for the purpose of assembly.

Many of the modern ideas on modularization and structured programming were anticipated in TASS more as logical extensions to programming than as good practice. As so often happens in life cycles, both TASS and GATE attained stable maturity about the time the decision to replace the 650 by the Bendix G-20 was made.

Three other efforts to smooth the programming process developed as a result of the programming language work. IBM developed the FORTRANSIT system (see Hemmes in this issue) for translating FORTRAN programs into IT, thus providing a gradient for programs that would anticipate the one for computer acquisition. Van Zoeren (1959) developed a program GIF, under support of Gulf Oil Research, that went the other way so that programs written by their engineering department for their 650 could run on an available 704. Both programs were written in SOAP II. GATE translated programs in one pass, statement by statement. Van Zoeren quickly developed a processor called CORREGATE that enabled editing of compiled GATE programs by processing, compiling, and assembling into already compiled GATE programs only new statements. GATE was anticipating BASIC, although the interactive, time-sharing mode was far from our thoughts in those days.

As so often happened, when a new computer arrived, sufficient software didn't. The 650 was replaced in the spring of 1961 by a superior computer, the Bendix G20, whose software was inferior to that in use on our 650. For a variety of reasons, it had been decided to port GATE to the new machine -- but no adequate assembly language existed in which to code GATE. TASS had become as complex as GATE and appeared to be an inappropriate vehicle to port to the new machine, particularly because of the enormous differences in instruction architecture between the two machines. Consequently, a new assembler, THAT (To Help Assemble Translators) was designed (Jensen 1961). It was a minimal assembler and never attained the sophistication of TASS -- another example of the nonmonotonicity of software and hardware development over time.

We found an important lesson in this first transition. In the design and construction of software systems, you learn more from a study of the consequences of success than from analysis of failure. The former uses evolution to expose necessary revolution; the latter too often invokes the minimal backtrack. But who gave serious attention to the issues of portability in those days?

The 650 was a small computer, and its software, while dense in function, was similarly small in code size. The porting of GATE to the G-20 was accomplished in less than one man-year by three superb programmers, Evans, Van Zoeren, and Jorn Jensen, a visitor from the Danish Regnecentralen. They shared an office, each being the vertex of a triangle, and cooperated in the coding venture: Jensen was defining and writing THAT, Evans was writing the lexical analyzer and parser, and Van Zoeren was doing the code generator. The three activities were intricately meshed much as co-routines with backtracking: new pseudooperations for THAT would be suggested, approved, and code restructured, requiring reorganization in some of the code already written. This procedure converged quite quickly but would not be recommended for doing an Ada compiler.

in Annals of the History of Computing, 08(1) January 1986 (IBM 650 Issue) view details

in Bemer, Bob "Computer History Vignettes" (Web published, retrieved 2000) view details