IT(ID:21/it:001)

Internal Translator mathematical autocoder

for Internal Translator.

Alan Perlis, Mark Koschman, Sylvia Orgel, Joseph W. Smith and Joanne Chipps, Purdue University Computing Laboratory 1955-7

initially DATATRON 205 (later a Burroughs 205), to get around the problem of alphanumeric input.

Then ported to IBM 650 at Carnegie Tech ca 1957 where it became a translator for SAP.

Knuth says "first really useful compiler"

Versions:

IT - Source code converted to PIT, thence to SPIT.

IT-2 - produced machine language directly

IT-3 - developed at Carnegie added double-precision floating point.

Places

People: Hardware:

- Datatron 205 Philco

- IBM 650 IBM

Related languages

| Laning and Zierler | => | IT | Influence | |

| IT | => | Compiler II-SOAP II | Port | |

| IT | => | GAT | Evolution of | |

| IT | => | GIF | Translator for | |

| IT | => | IT 2 | Evolution of | |

| IT | => | PIT | Intermediate language for |

References:

Introduction.

A compiler may be defined as a program which satisfies the following four conditions:

- Universality, i.e., the ability to translate into machine language any program which could have been coded in machine language. (This is trivially satisfied if the compiler can accept machine language.)

- Direct machine translation of flow charts into a program. A flow chart is here understood to consist of an ordering of substitutional and relational statements; and connectives.

- Automatic allocation of machine storage, since such allocation is independent of the logical structure of a problem.

- Extension of its list of recognizable symbols to include those corresponding to any flow chart when instructed to do so.

The motivations leading to the consideration of a compiler are obvious to anyone who has ever written at least one program. Nevertheless we summarize them briefly as follows:

- Programming is a highly repetitive technique and hence capable of automation.

- People who propose problems should program them.

- The ratio of the time required to flow chart a problem to that for programming a problem should be much larger than 1. Currently it is much smaller than 1 and as the machines get larger this ratio is tending to approach 0.

- Recognizing that computor design should be in perpetual transition, the structure of compilers may provide insight into the logical design of future computors.

in Proceedings of the 1956 11th ACM national meeting view details

Let me elaborate these points with examples. UNICODE is expected to require about fifteen man-years. Most modern assembly systems must take from six to ten man-years. SCAT expects to absorb twelve people for most of a year. The initial writing of the 704 FORTRAN required about twenty-five man-years. Split among many different machines, IBM's Applied Programming Department has over a hundred and twenty programmers. Sperry Rand probably has more than this, and for utility and automatic coding systems only! Add to these the number of customer programmers also engaged in writing similar systems, and you will see that the total is overwhelming.

Perhaps five to six man-years are being expended to write the Alodel 2 FORTRAN for the 704, trimming bugs and getting better documentation for incorporation into the even larger supervisory systems of various installations. If available, more could undoubtedly be expended to bring the original system up to the limit of what we can now conceive. Maintenance is a very sizable portion of the entire effort going into a system.

Certainly, all of us have a few skeletons in the closet when it comes to adapting old systems to new machines. Hardly anything more than the flow charts is reusable in writing 709 FORTRAN; changes in the characteristics of instructions, and tricky coding, have done for the rest. This is true of every effort I am familiar with, not just IBM's.

What am I leading up to? Simply that the day of diverse development of automatic coding systems is either out or, if not, should be. The list of systems collected here illustrates a vast amount of duplication and incomplete conception. A computer manufacturer should produce both the product and the means to use the product, but this should be done with the full co-operation of responsible users. There is a gratifying trend toward such unification in such organizations as SHARE, USE, GUIDE, DUO, etc. The PACT group was a shining example in its day. Many other coding systems, such as FLAIR, PRINT, FORTRAN, and USE, have been done as the result of partial co-operation. FORTRAN for the 705 seems to me to be an ideally balanced project, the burden being carried equally by IBM and its customers.

Finally, let me make a recommendation to all computer installations. There seems to be a reasonably sharp distinction between people who program and use computers as a tool and those who are programmers and live to make things easy for the other people. If you have the latter at your installation, do not waste them on production and do not waste them on a private effort in automatic coding in a day when that type of project is so complex. Offer them in a cooperative venture with your manufacturer (they still remain your employees) and give him the benefit of the practical experience in your problems. You will get your investment back many times over in ease of programming and the guarantee that your problems have been considered.

Extract: IT, FORTRANSIT, SAP, SOAP, SOHIO

The IT language is also showing up in future plans for many different computers. Case Institute, having just completed an intermediate symbolic assembly to accept IT output, is starting to write an IT processor for UNIVAC. This is expected to be working by late summer of 1958. One of the original programmers at Carnegie Tech spent the last summer at Ramo-Wooldridge to write IT for the 1103A. This project is complete except for input-output and may be expected to be operational by December, 1957. IT is also being done for the IBM 705-1, 2 by Standard Oil of Ohio, with no expected completion date known yet. It is interesting to note that Sohio is also participating in the 705 FORTRAN effort and will undoubtedly serve as the basic source of FORTRAN-to- IT-to-FORTRAN translational information. A graduate student at the University of Michigan is producing SAP output for IT (rather than SOAP) so that IT will run on the 704; this, however, is only for experience; it would be much more profitable to write a pre-processor from IT to FORTRAN (the reverse of FOR TRANSIT) and utilize the power of FORTRAN for free.

in "Proceedings of the Fourth Annual Computer Applications Symposium" , Armour Research Foundation, Illinois Institute of Technology, Chicago, Illinois 1957 view details

Appendix H Graphical Circuit Analysis Employing The IBM 650 Digital Computer

A program to perform the graphical analysis discussed in Section 1 has been prepared for the IBM 650 computer. The program has been run and the results obtained are shown in Fig. H-1. The program consists of two major parts. The first reduces the type 437A plate characteristics to digitized form and then interpolates several intermediate curves. The second performs the numerical computations as indicated in Section 1. Approximation of the characteristic curves was done by representing each curve by a number of straight line segments. The points thus obtained were converted to slope-intercept straight line equations. Each curve was represented by 4 segments. The method of interpolation used was to bisect the angle between a given segment and the respective segment on the next higher grid curve. This was repeated until 17 curves had been obtained from the original 5. Figure H-2 illustrates the original curves obtained experimentally. Figure H-3 shows the approximation used for representing these curves and Fig. H-4 shows the method of interpolation used. The equations solved by the program are listed after Fig. H-4. The results obtained agree closely with what would be expected with the values of circuit constants used. It is felt that the program would be useful for evaluating various combinations of input values. The program has been written so that any parameter of the circuit may be varied at will as well as the input pulse conditions. Since the original tube curves are not exact, it is felt that more exact curve fitting methods or interpolation methods would not be justified. The program was written in the "IT" language which is an interpretive translator from a mathematical language format to "SOAP" language. The deck of cards to the 650 program is available if desired.

in "Proceedings of the Fourth Annual Computer Applications Symposium" , Armour Research Foundation, Illinois Institute of Technology, Chicago, Illinois 1957 view details

in "Proceedings of the Fourth Annual Computer Applications Symposium" , Armour Research Foundation, Illinois Institute of Technology, Chicago, Illinois 1957 view details

in "Proceedings of the Fourth Annual Computer Applications Symposium" , Armour Research Foundation, Illinois Institute of Technology, Chicago, Illinois 1957 view details

in Automatic Coding, Monograph No. 3, Journal of the Franklin Institute Philadelphia, Pa., April 1957. view details

in Automatic Coding, Monograph No. 3, Journal of the Franklin Institute Philadelphia, Pa., April 1957. view details

in [ACM] CACM 1(07) July 1958 view details

in [ACM] CACM 1(07) July 1958 view details

We had full intentions of using the IT language for our input to the Univac, as Mr. Bemer reported here last year, then changed our minds and decided that we needed something with "name" variables similar to Fortran or AT-3 (Mathmatic).

We also desire to use a language that will be usable on both the Univac and the 650 without too much changing-around of the pseudo-code. This desire is motivated largely by both the presence of the two machines as well as by the very nature of our main activity, namely, educational work. The advantages of a common language (or, more properly, a compatible language) are quite clear but not easily attained on two machines of such different capacities.

At the present time we are planning to use a multistage process in translating from the pseudo-code (problem-oriented language) to the machine language.

It is likely that we will process the original pseudo-code into a simpler pseudo-code, then translate that code, etc., according to the following list:

POL

POL1

SML

Preprocessor

Unisap

C-10

POL will be an algebraic problem-oriented language which will be quite rich in its abilities (possibly including such things as general summation notation, matrix manipulation, "find maximum," "find minimum," differentiation, integration, etc.). The POLl will be another algebraic problem-oriented language with about the same capabilities as presently found in AT-3 (Math-matic) or Fortran. SML is Simple Machine Language and is a language which has the characteristics of a computer but no reference at all to any particular computer. We have already completed the specifications of SML and how it is to work. Following SML will come a "preprocessor," so named because it was invented for the very purpose of preceding Unisap before its utility in this particular connection was evident.

The preprocessor is currently being debugged. The coding for SML to preprocessor is now being written. Unisap has already been mentioned as an excellent example of a symbolic assembly program and has been widely distributed. C-10 neither needs any explanation (it is the Univac machine language) nor does it particularly deserve explanation.

Extract: SOAP, CASE SOAP

I shall now descend from my blue-sky position and describe what we have already finished doing in the automatic programming business. We, as have several others, started our first efforts at automatic programming after taking a long close look at Dr. Perlis' IT compiler for the IBM 650. As usual, when one examines something created elsewhere, we thought that we could make some improvements in the existing scheme without giving up any of the advantages that were already present. Our first effort was then to write a program named "Compiler II-SOAP II." This program worked very well, turned out nice "tight" coding, and included IT as a subset language. The bulk of the flow charting, planning, and coding for Compiler II-SOAP II was done by two of my undergraduate students, Mr. Lynch, a sophomore, and Mr. Knuth, a freshman.

0ne of the more unpleasant experiences encountered in the work on the compiler was the discovery that SOAP II was unable to assemble the entire compiler owing to the symbol table becoming filled up at an early stage of the assembly process. The solution to the problem was obvious but not very satisfactory.

As a matter of fact we did modify SOAP II to dump the symbol table and then reload it again in modified form, but we abandoned this philosophy as not being a worthwhile solution to the problem. Therefore, Mr. Knuth suggested that he write a new symbolic assembly program with some new features incorporated in it. Accordingly, SOAP III (later renamed CASE-SOAP III due to some rather peculiar complaints from a large corporation) was written. CASE-SOAP-III solved the symbol-table difficulty by introducing a fairly new idea--the program point. Program points are addresses which the programmer needs to introduce in order to cause the machine to function properly but which have no mnemonic value to the functioning of the program. For example, one may frequently encounter the use of addresses such as NEXT and NXT, etc., which are included solely to link the program together but which have no significance at all in the logical structure of the problem. The program point was introduced just to solve the problem of naming these "random" addresses. The program point uses no room at all in the symbol table and is continuously redefinable by the simple expedient of using the same point over again. As an example consider the following segment of coding:

RAU ROO02

BMI 1F

STL PO005

RSL ONE6 1F

1 ALO PCON

In both cases of the use of "1F" in the instruction address position, the meaning is to use the address of program point ''1" forward. Now look at the next example (which, by the way, does nothing) :

2 RAU ROO01 1F

1 SUP ONES

NZE 1F 2B

1 RAL 6 1B

Note that the use of "1F" still refers to the forward program point, while "1B" refers to the correspondingly numbered backward program point. The use of "6" in the data address of the last instruction refers to the address of that particular line of coding (i.e., the line "1 RAL 6 1B"). Using CASE-SOAP III to write our next compiler revealed that there was an economy of approximately 50 per cent in the number of symbols used in CASE-SOAP III over the equivalent program written in SOAP II. Since we are looking forward to acquiring some day some of the optional attachments for our 650, Mr. Knuth incorporated additional pseudo-operations into CASE-SOAP III which allow one to effectively write programs for the augmented machine.

The new compiler referred to in the previous paragraph has been named RUNCIBLE. I shall now stick out my neck and state quite flatly that I do not believe that any tighter program will ever be devised for the 650. RUNCIBLE is again an algebraic problem-oriented compiler for the 650. The input language includes both IT and Compiler II-SOAP II as proper subset languages. The compiler is in a single deck of cards but exists logically in twenty-four different versions. The various versions are selected at read-in time by the storage-entry switch settings. The various possibilities are given from examining the following:

(Multipass OR One-pass) AND (650 OR 653) AND (Minimum Clocking OR

Full Clocking OR No Clocking) AND (Normal Output OR Error Search)

= (Operating Mode)

We now examine in some detail the options appearing in the foregoing equation. The multipass mode of operation is the one usually associated with 650 compilers (i.e., an intermediate language step is used). In the case at hand, the intermediate language is CASE-SOAP III. The one-pass mode turns out a machine-language program directly in a five instruction per card format. In the second option the term 650 refers to the basic 2,000-word 650 as being used as the object machine (i.e., the machine on which the compiled problem is to be run), the term 653 means that the compiled program is to be run on a machine equipped with floating point, index registers, and (at the programmer's option) core storage. It is also possible to cause the output machine language to be tailored to fit a machine equipped with core storage only and neither index registers nor floating point. The various "clocking" options mentioned refer to the several "tracing" modes that can be called upon to help debug the object program. It is possible to ascertain (while actually operating the object program) upon which statement in the pseudolanguage the machine is working. This one feature is proving to be one of the most helpful debugging aids that we have ever encountered.

Extract: IT, Runcible

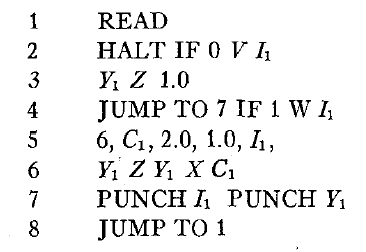

The input language to RUNCIBLE is an augmented version of Compiler II-SOAP II which includes names of subroutines and some other English-language options in the control words. A sample program which appears on page 20 of the manual follows :

The program reads in a non-negative value (and tests to see that it is non-negative) of I1 and then calculates I1 factorial and punches both the input number and the factorial value.

We are now naturally looking forward to the day when we shall have to consider a 650 compiler for the machine with tapes and RAMAC unit.

In conclusion let me state that in our opinion the most important thing to worry about, as far as application of machines to scientific and engineering problems is concerned, is the time consumed from the statement of the problem in English to the presentation of the numerical answer to the problem proposer.

We see no reason why the time consumed on the computer should be the only time interval which comes under close scrutiny. It seems much more reasonable to conserve the time of the scientist and engineer rather than the machine-after all, one may obtain machines by the simple expedient of ordering them from the manufacturer, but a foolproof, economic method of obtaining competent personnel is as yet an almost unsolved problem.

Extract: MATH-MATIC vs. RUNCIBLE

M. O. LOCKS (Remington Rand Univac) :

Could you give me your interpretation of the difference between an assembly system and a compiler system? Also, could you explain how something which is as flexible as a compiler can be gotten onto a 2,000-word drum with no further erasable storage?

MR. WAY:

Our view of an assembly system is a facility whereby one writes a program in some language-usually similar to machine language-and gets out an essentially one-to-one translation of that language. In our case we write in a symbolic language, using pseudo-codes and symbolic addresses, and get out a machine-language code along with the actual locations-in the case of the Univac I, for instance, C-10 operation codes and addresses-when we assemble the tape which will run the problem. A compiler, on the other hand, is usually a one-to-many translator. You put in, usually, algrebraic statements of your problem and get out a machine code to do the problem; the latter, of course, in appearance bears no resemblance whatsoever to the original problem statement.

Now your question about how we do this on a 2,000-word drum is of interest. A number of people said it could not be done, but Perlis proved that this was wrong. I notice that you are with Remington Rand Univac, so I see why the question comes up! If anybody ever writes a compiler that will fit inside the 1,000- word memory of the Univac I, I would ce~tainly like to see it. The whole philosophy of compiling is dictated by the machine available to do it. In the case of the Univac I, with ten tape units, one can spin wheels and do nearly anything if he has time. As examples, witness Math-matic and Flow-matic, which do spin wheels and take time, but do compile. With the 650 one does not use this type of philosophy, mainly because he cannot. So, one uses a different method of generating the machine language. I realize I haven't told you in detai! how to write a compiler; I didn't intend to. Does that answer your question?

MR. LOCKS:

Yes, that does basically answer the question, but I would like to inquire how much your 650 compiler can do as compared to a compiler which does spin wheels, Math-matic, for instance.

MR. WAY:

I am sure we can run rings around it with this 650 compiler, the basic reason being that the 650 storage of 2,000 words allows us to get more in the main Current Developments in Computer Pvogremming Techniques 131 storage of the machine at once. In our view the Math-matic system at present is rather handicapped by the input-output of the Univac I. The man who writes pseudo-code in Math-matic must know a lot about the Univac I, or he will not be able successfully to segment a problem. At least this has been our experience. Extract: FORTRANSIT, IT, RUNCIBLE

R. B. WISE (Armour Research Foundation) :

You mentioned that you were going to bypass the Perlis language compiler for the Univac I and write something more ambitious. Will this allow for IT compiler input?

MR. WAY:

We haven't decided as yet, but we would like to allow it if possible.

MR. WISE:

I, for one, would want to register a small protest if you do not. The IT language is about the closest thing we have today to the universal language among computers, and it seems to me that it would be very much worthwhile to have something-even something which some people may classify as mediocrewhich will allow communication among computers. This has been written for the 650; through the use of For Transit, you have access to some programs for the 704; it has been written for the 1103A; and, in somewhat modified form, it also fits in the Datatron 205. I think there is a big argument for allowing that type of input.

MR. WAY:

YOU opened a loophole when you said "in somewhat modified form." When I said before that our compiler would accept previous programs, I meant without any alteration.

in Proceedings of the 1958 Computer Applications Symposium, Armour Research Foundation, Illinois Institute of Technology, Chicago, Illinois view details

Bob Bemer states that this table (which appeared sporadically in CACM) was partly used as a space filler. The last version was enshrined in Sammet (1969) and the attribution there is normally misquoted.

in [ACM] CACM 2(05) May 1959 view details

in E. M. Crabbe, S. Ramo, and D. E. Wooldridge (eds.) "Handbook of Automation, Computation, and Control," John Wiley & Sons, Inc., New York, 1959. view details

in E. M. Crabbe, S. Ramo, and D. E. Wooldridge (eds.) "Handbook of Automation, Computation, and Control," John Wiley & Sons, Inc., New York, 1959. view details

in E. M. Crabbe, S. Ramo, and D. E. Wooldridge (eds.) "Handbook of Automation, Computation, and Control," John Wiley & Sons, Inc., New York, 1959. view details

in [ACM] CACM 4(01) (Jan 1961) view details

Univac LARC is designed for large-scale business data processing as well as scientific computing. This includes any problems requiring large amounts of input/output and extremely fast computing, such as data retrieval, linear programming, language translation, atomic codes, equipment design, largescale customer accounting and billing, etc.

University of California

Lawrence Radiation Laboratory

Located at Livermore, California, system is used for the

solution of differential equations.

[?]

Outstanding features are ultra high computing speeds and the input-output control completely independent of computing. Due to the Univac LARC's unusual design features, it is possible to adapt any source of input/output to the Univac LARC. It combines the advantages of Solid State components, modular construction, overlapping operations, automatic error correction and a very fast and a very large memory system.

[?]

Outstanding features include a two computer system (arithmetic, input-output processor); decimal fixed or floating point with provisions for double

precision for double precision arithmetic; single bit error detection of information in transmission and arithmetic operation; and balanced ratio of high speed auxiliary storage with core storage.

Unique system advantages include a two computer system, which allows versatility and flexibility for handling input-output equipment, and program interrupt on programmer contingency and machine error, which allows greater ease in programming.

in [ACM] CACM 4(01) (Jan 1961) view details

The third table defines a set of some twenty-odd subroutines which do the various tasks that occur in a translation. The fourth table defines the translation algorithm by coupling all legal symbol pairs to a set of 81 generators. These generators are defined in this table and consist of sequences of subroutines defined in the previous table. The paper concludes with two examples.

The method described in this article is basically the same as that used in the original IT compiler. The addition of the operator precedence levels makes possible a natural hierarchy of operations in the input language. In the IT language, this hierarchy was determined strictly by the order in which the operators were encountered in a statement as the statement was scanned from right to left.

in ACM Computing Reviews 3(01) March-April 1962 view details

in Invited papers view details

The major competitor to the 650 among the early magnetic drum computers was the Datatron 205, which eventually became a Burroughs product. It featured a 4000 word magnetic drum storage plus a small 80 word fast access memory in high speed loops on the drum. Efficient programs had to make very frequent use of block transfer instructions, moving both data and programs to high speed storage A number of interpretive and assembly system were built to provide programmed floating point instructions and some measure of automatic use of the high speed loops. The eventual introduction of floating point hardware removed one of the principal advantages of most of these systems. The Datatron was the first commercial system to provide an index register and automatic relocation of subroutines, features provided by programming systems on other computers. For these reasons among others the use of machine code programming persisted through most of the productive lifetime of the Datatron 205.

One of the first Datatron computers was installed at Purdue University. One of the first Algebraic compilers was designed for the Datatron by a group at Purdue headed by Dr. Al Perils. This is another example of a compiler effort based on a firm belief that programming should be done in problem-oriented languages, even if the computer immediately available may not lend itself too well to the use of such languages. A big problem in the early Datatron systems was the complete lack of alphanumeric input. The computer would recognize pairs of numbers as representing characters for printing on the Flexowriter, but there was no way to produce the same pair of numbers by a single key stroke on any input preparation device. Until the much later development of new input-output devices, the input to the Purdue compiler was prepared by manually transcribing the source language into pairs of numbers.

When Dr. Perils moved to Carnegie Tech the same compiler was written for the 650, and was named IT. IT made use of the alphanumeric card input of the 650, and translated from a simplified algebraic language into SOAP language as output. IT and languages derived from it became quite popular on the 650, and on other computers, and have had great influence on the later development of programming languages. A language Fortransit provided translation from a subset of Fortran into IT, whence a program would be translated into SOAP, and after two or more passes through SOAP it would finally emerge as a machine language program. The language would probably have been more popular if its translation were not such an involved and time-consuming process. Eventually other, more direct translators were built that avoided many of the intermediate passes.

in [AFIPS JCC 25] Proceedings of the 1964 Spring Joint Computer Conference SJCC 1964 view details

in [AFIPS JCC 25] Proceedings of the 1964 Spring Joint Computer Conference SJCC 1964 view details

[321 programming languages with indication of the computer manufacturers, on whose machinery the appropriate languages are used to know. Register of the 74 computer companies; Sequence of the programming languages after the number of manufacturing firms, on whose plants the language is implemented; Sequence of the manufacturing firms after the number of used programming languages.]

in [AFIPS JCC 25] Proceedings of the 1964 Spring Joint Computer Conference SJCC 1964 view details

in [ACM] CACM 15(06) (June 1972) view details

in Computers & Automation 21(6B), 30 Aug 1972 view details

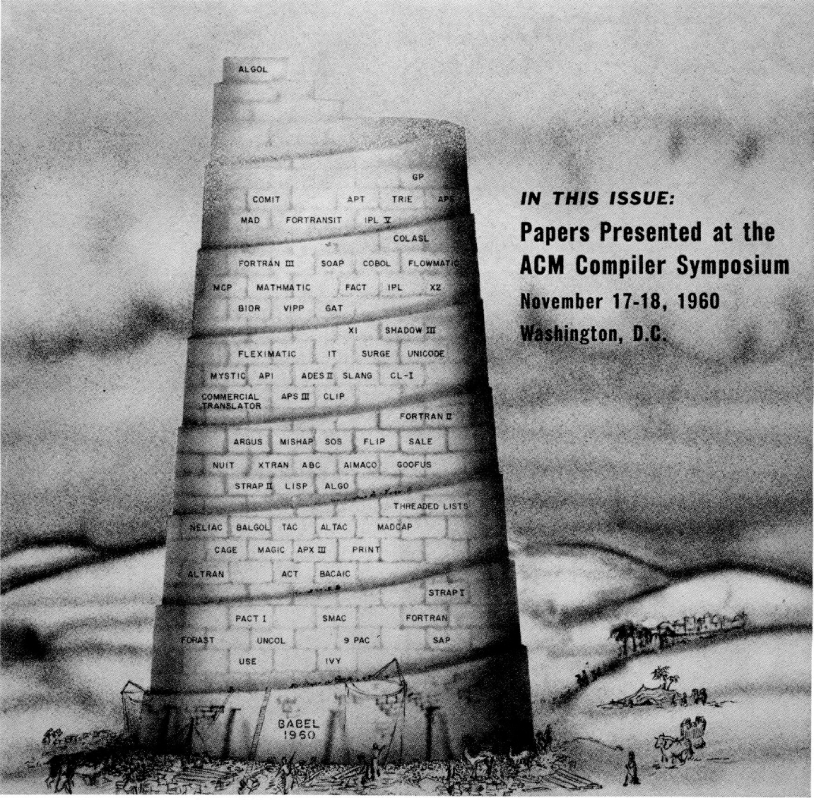

The exact number of all the programming languages still in use, and those which are no longer used, is unknown. Zemanek calls the abundance of programming languages and their many dialects a "language Babel". When a new programming language is developed, only its name is known at first and it takes a while before publications about it appear. For some languages, the only relevant literature stays inside the individual companies; some are reported on in papers and magazines; and only a few, such as ALGOL, BASIC, COBOL, FORTRAN, and PL/1, become known to a wider public through various text- and handbooks. The situation surrounding the application of these languages in many computer centers is a similar one.

There are differing opinions on the concept "programming languages". What is called a programming language by some may be termed a program, a processor, or a generator by others. Since there are no sharp borderlines in the field of programming languages, works were considered here which deal with machine languages, assemblers, autocoders, syntax and compilers, processors and generators, as well as with general higher programming languages.

The bibliography contains some 2,700 titles of books, magazines and essays for around 300 programming languages. However, as shown by the "Overview of Existing Programming Languages", there are more than 300 such languages. The "Overview" lists a total of 676 programming languages, but this is certainly incomplete. One author ' has already announced the "next 700 programming languages"; it is to be hoped the many users may be spared such a great variety for reasons of compatibility. The graphic representations (illustrations 1 & 2) show the development and proportion of the most widely-used programming languages, as measured by the number of publications listed here and by the number of computer manufacturers and software firms who have implemented the language in question. The illustrations show FORTRAN to be in the lead at the present time. PL/1 is advancing rapidly, although PL/1 compilers are not yet seen very often outside of IBM.

Some experts believe PL/1 will replace even the widely-used languages such as FORTRAN, COBOL, and ALGOL.4) If this does occur, it will surely take some time - as shown by the chronological diagram (illustration 2) .

It would be desirable from the user's point of view to reduce this language confusion down to the most advantageous languages. Those languages still maintained should incorporate the special facets and advantages of the otherwise superfluous languages. Obviously such demands are not in the interests of computer production firms, especially when one considers that a FORTRAN program can be executed on nearly all third-generation computers.

The titles in this bibliography are organized alphabetically according to programming language, and within a language chronologically and again alphabetically within a given year. Preceding the first programming language in the alphabet, literature is listed on several languages, as are general papers on programming languages and on the theory of formal languages (AAA).

As far as possible, the most of titles are based on autopsy. However, the bibliographical description of sone titles will not satisfy bibliography-documentation demands, since they are based on inaccurate information in various sources. Translation titles whose original titles could not be found through bibliographical research were not included. ' In view of the fact that nany libraries do not have the quoted papers, all magazine essays should have been listed with the volume, the year, issue number and the complete number of pages (e.g. pp. 721-783), so that interlibrary loans could take place with fast reader service. Unfortunately, these data were not always found.

It is hoped that this bibliography will help the electronic data processing expert, and those who wish to select the appropriate programming language from the many available, to find a way through the language Babel.

We wish to offer special thanks to Mr. Klaus G. Saur and the staff of Verlag Dokumentation for their publishing work.

Graz / Austria, May, 1973

in Computers & Automation 21(6B), 30 Aug 1972 view details

in Belzer, J. ; A. G. Holzman, A. Kent, (eds) Encyclopedia of Computer Science and Technology, Marcel Dekker, Inc., New York. 1979 view details

The first generation of computers were programed in machine language, typically by binary digits punched into a paper tape. Activity in higher-level programming was found on both the large-scale machine and on the smaller commercial drum computers.

High-level programming languages have their roots in the mundane. A pressing problem for users of drum computers was placing the program and data on the drum in a way that minimized the waiting time for the computer to fetch them.

It did not take long to realize that the computer could perform the necessary calculations to minimize the so called latency, and out of these routines grew the first rudimentary compilers and interpreters. Indeed, nearly every drum or delay line computer had at least one optimizing compiler. Some of the routines among the serial memory computers include SOAP for the IBM 650, IT for the Datatron, and Magic for the University of Michigan's MIDAC.

Parallel memory machines had less sophisticated and diverse compilers and interpreters. Among the exceptions were SPEEDCODE developed for the IBM 701, JOSS for the Johnniac, and a number of compilers and interpreters for the Whirlwind.

in The Computer Museum Report, Volume 7, Winter/1983/84 view details

At Carnegie Tech (now CMU) the 650 arrived in July 1956. Earlier in the spring I had accepted the directorship of a new computation center at Carnegie that was to be its cocoon. Joseph W. Smith, a mathematics graduate student at Purdue, also came to fill out the technical staff. A secretary-keypuncher, Peg Lester, and a Tech math grad student, Harold Van Zoeren, completed the staff later that summer. The complete annual budget -- computer, personnel, and supplies -- was $50,000. During the tenure of the 650, the center budget never exceeded $85,000. Before the arrival of the computer, a few graduate students and junior faculty in engineering and science had been granted evening access to a 650 at Mellon National Bank. In support of their research, the 650, largely programmed using the Wolontis-Bell Labs three-address interpreter system, proved invaluable. The success of their efforts was an important source of support for the newly established Computation Center.

The 650 operated smoothly almost immediately. The machine was quite reliable. Even though only a one-shift maintenance contract was in force, by the start of fall classes the machine was being used on the second shift, as well as weekends. The talented user group, the stable machine, two superb software tools -- SOAP (Poley 1957) and Wolontis (see Technical Newsletter No. 11 in this issue) -- and an uninhibited open atmosphere contributed to make the center productive and, even more, an idea-charged focus on the campus for the burgeoning insights into the proper -- nay, inevitable -- role of the computer in education and research. Other than the usual financial constraints, the only limits were lack of time and assistance. The center was located in the basement of the Graduate School of Industrial Administration (GSIA). Its dean, Lee Bach, was an enthusiastic supporter of digital computation. Consequently, he was not alarmed at the explosion in the use of the center by his faculty and graduate students, and he acceded graciously to the pressure, when it came, to support the center in its requests to the administration for additional space and equipment.

From its beginning the center, its staff, and many of the users were engaged in research on programming as well as with programming. So many problems were waiting to be solved whose programs we lacked the resources to write: We were linguistically inhibited, so that our programs were too often exercises in stuttering fueled by frustration. Before coming to Carnegie, Smith and I had already begun work on an algebraic language translator at Purdue intended for use on the ElectroData Datatron computer, and we were determined to continue the work at Carnegie. The 650 proved to be an excellent machine on which to pursue this development. Indeed, the translator was completed on the 650 well before the group at Purdue finished theirs. The 650 turned out to have three advantages over the Datatron for this particular programming task: punched cards being superior to paper tape, simplicity in handling alphanumerics, and SOAP. The latter was an absolutely crucial tool. Any large programming task is dominated by the utility with which its parts can be automatically assembled, modified, and reassembled.

The translator, called IT for Internal Translator (see Perlis and Smith 1957), was completed shortly after Thanksgiving of 1956. In the galaxy of programming languages IT has become a star of lesser magnitude. IT'S technical constructs are of historical interest only, but its existence had enormous consequences. Languages invite traffic, and use compels development. Thus IT became the root of a tree of language and system developments whose most important consequence was the opening of universities to programming research. The 650, already popular in universities, could be used the way industry and government were soon to use FORTRAN, and education could turn its attention to the subject of programming over and above applications to the worlds of Newton and Einstein. The nature of programming awaited our thoughts.

No other moment in my professional life has approached the dramatic intensity of our first IT compilation. The 650 accepted five cards (see Figure 1) and produced 42 cards of SOAP code (see Figure 2) evaluating a polynomial. The factor of 8 was a measure of magic, not the measure of a poor code generator. For me it was the latest in a sequence of amplifiers, the search for which exercises computation. The 650 implementation of IT had an elastic quality: it used 1998 of the 2000 words of 650 storage, no matter what new feature was added to the language. Later in 1957 IT-2 was made available and bypassed the need for SOAP completely. IT-2 translated the IT language directly into machine code. By the beginning of 1958 IT3 became available. It was identical to IT-2 except that all floating-point arithmetic was performed in double precision. For its needs GSIA produced IT-2- S which was IT-2 using scaled fixed-point arithmetic. The installation of the FORTRAN character set prompted the replacement of IT-9 by IT-2- A-S, which used both the FORTRAN character set and floating-point hardware. With IT-2-A-S the work on IT improvements came to an end at Carnegie.

While the IT developments were being carried out within our Computation Center, parallel efforts were under way on our machine in the development of list-processing languages under the direction of Allen Newell and Herbert Simon. The IPL family and the IT family have no linguistic structure in common, but they benefited from each other's existence through the continual interaction of the people, problems, and ideas within each system.

The use of Wolontis decreased. Soon almost all computation was in IT, and use expanded to three shifts. By the end of the summer of 1957, IT was in the hands of a number of other universities. Case and Michigan made their own improvements and GAT, developed by Michigan, became available in 1958 (see Arden and Graham 1958). It bypassed SOAP, producing machine code directly, and used arithmetic precedence. We were so impressed by GAT that we immediately embarked on its extension and produced GATE (GAT Extended) by spring of 1959. GATE was later transported to the Bendix G-20 when that computer replaced the 650 at Carnegie in 1961.

The increased use of the machine and the increased dependence on IT and its successors as a programming medium pressured the computing center into continual machine expansion. As soon as IBM provided enhancements to the 650 that would improve the use of our programming tools, our machine absorbed them: the complete FORTRAN character set, index registers, floating point, 60 core registers, masking and format commands and, most important, a RAMAC disk unit. All but the last introduced trivial modifications to our language processors. There was the usual grumbling from some users because the enhancements pressured (not required) them to modify both the form and logic of their programs. The users were becoming computer-bound by choice as well as need, though, and they had learned the first, and most important, lesson of computer literacy: In man-machine symbioses it is the human who must adjust, adapt, and learn as the computer evolves along its own peculiar gradients. Getting involved with a computer is like having a cannibal as a valet.

Most universities opted for magnetic tape as their secondary storage medium; Carnegie chose disks. Our concern with the improvement of the programming process had thrust upon us the question: How do programs come into being? Our answer: Pure reasoning and the artful combination of programs already available, understood, and poised for modification and execution. It is not enough to be able to write programs easily; one must be able to assemble new ones from old ones. Sooner or later everyone accepts this view -- first mechanized so admirably on the EDSAC almost 40 years ago (Wilkes, Wheeler, and Gill 1957). Looked at in hindsight, our concern with the process of assembly was an appreciation of the central role evolution plays in the man-computer dialogue: making things fit is a crucial part in making things work. It was obvious that retention and assembly of programs was more easily realized with disk than with tape. Like everything else associated with the 650, the RAMAC unit worked extremely well. Computation became completely dependent on it.

GATE, our extension of GAT, made heavy use of the disk (Perks, Van Zoeren, and Evans 1959). Programs were getting larger, and a form of segmentation was needed. The assembly of machine-language programs and already compiled GATE programs into new ones was becoming a normal mode of use. GATE provided the vehicle for accomplishing these tasks. The construction of GATE was done by Van Zoeren and Smith. Not all programs originated in GATE; some were done in machine language. SOAP, always our model of a basic machine assembly language, had matured into SOAP 11 but had not developed into an adult assembler for a 650 with disks. IBM was about to stunt that species, so we designed and built TASS (Tech Assembly System). Smith and Arthur Evans wrote the code; Smith left Carnegie, and Evans completed TASS. A few months later he extended it to produce TASS I! and followed it with SUPERTASS. TASS and its successors were superb assemblers and critical to our programming research (Perks, Smith, and Evans 1959).

Essentially, any TASS routine could be assembled and appended to the GATE subroutine library. These routines were relocatable. GATE programs were fashioned from independently compiled segments connected by link commands whose executions loaded new segments from disk. Unfortunately, we never implemented local variables in GATE, although their value was appreciated and an implementation was sketched.

The TASS family represented our thoughts on the combinational issues of programming. In the TASS manual is the following list of desiderata for an assembler:

1. Programs should be constructed from the combination of units (called P routines in TASS) so that relationships between them are only those specified by the programmer.

2. A programmer should be able to combine freely P routines written elsewhere with his own.

3. Any program, once written, may become a P routine in the library.

4. When a P routine is used from the library, no detailed knowledge of its internal structure is required.

5. All of the features found in SOAP I! should be available in P routines.

TASS supported an elaborate, but not rococo, mechanism for controlling circumstances when two symbols were (1) identical but required different addresses and (2) different but required identical addresses. Communication between P routines was possible both at assembly and at run time. Language extension through macrodefinitions was supported. SUPERTASS permitted nesting of macrocalls and P routine definition. Furthermore, SUPERTASS permitted interruptions of assembly by program execution and interruption of execution for the purpose of assembly.

Many of the modern ideas on modularization and structured programming were anticipated in TASS more as logical extensions to programming than as good practice. As so often happens in life cycles, both TASS and GATE attained stable maturity about the time the decision to replace the 650 by the Bendix G-20 was made.

Three other efforts to smooth the programming process developed as a result of the programming language work. IBM developed the FORTRANSIT system (see Hemmes in this issue) for translating FORTRAN programs into IT, thus providing a gradient for programs that would anticipate the one for computer acquisition. Van Zoeren (1959) developed a program GIF, under support of Gulf Oil Research, that went the other way so that programs written by their engineering department for their 650 could run on an available 704. Both programs were written in SOAP II. GATE translated programs in one pass, statement by statement. Van Zoeren quickly developed a processor called CORREGATE that enabled editing of compiled GATE programs by processing, compiling, and assembling into already compiled GATE programs only new statements. GATE was anticipating BASIC, although the interactive, time-sharing mode was far from our thoughts in those days.

As so often happened, when a new computer arrived, sufficient software didn't. The 650 was replaced in the spring of 1961 by a superior computer, the Bendix G20, whose software was inferior to that in use on our 650. For a variety of reasons, it had been decided to port GATE to the new machine -- but no adequate assembly language existed in which to code GATE. TASS had become as complex as GATE and appeared to be an inappropriate vehicle to port to the new machine, particularly because of the enormous differences in instruction architecture between the two machines. Consequently, a new assembler, THAT (To Help Assemble Translators) was designed (Jensen 1961). It was a minimal assembler and never attained the sophistication of TASS -- another example of the nonmonotonicity of software and hardware development over time.

We found an important lesson in this first transition. In the design and construction of software systems, you learn more from a study of the consequences of success than from analysis of failure. The former uses evolution to expose necessary revolution; the latter too often invokes the minimal backtrack. But who gave serious attention to the issues of portability in those days?

The 650 was a small computer, and its software, while dense in function, was similarly small in code size. The porting of GATE to the G-20 was accomplished in less than one man-year by three superb programmers, Evans, Van Zoeren, and Jorn Jensen, a visitor from the Danish Regnecentralen. They shared an office, each being the vertex of a triangle, and cooperated in the coding venture: Jensen was defining and writing THAT, Evans was writing the lexical analyzer and parser, and Van Zoeren was doing the code generator. The three activities were intricately meshed much as co-routines with backtracking: new pseudooperations for THAT would be suggested, approved, and code restructured, requiring reorganization in some of the code already written. This procedure converged quite quickly but would not be recommended for doing an Ada compiler.

in Annals of the History of Computing, 08(1) January 1986 (IBM 650 Issue) view details

in Bemer, Bob "Computer History Vignettes" (Web published, retrieved 2000) view details

I should talk a little about the history of MAD, because MAD was one of our major activities at the Computing Center. It goes back to the 650, when we had the IT compiler from Al Perlis. Because of the hardware constraints - mainly the size of storage and the fact that there were no index registers on the machine - IT had to have some severe limitations on what could be expressed in the language. Arden and Graham decided to take advantage of index registers when we finally got them on the 650 hardware. We also began to understand compilers a little better, and they decided to generalize the language a little. In 1957, they wrote a compiler called GAT, the Generalized Algebraic Translator. Perlis took GAT and added some things, and called it GATE - GAT Extended.

GAT was not used very long at Michigan. It was okay, but there was another development in 1958. The ACM [Association for Computing Machinery] and a European group cooperatively announced a "standard" language called IAL, the International Algebraic Language, later changed to Algol, Algorithmic Language, specifically Algol 58. They published a description of the language, and noted: "Please everyone, implement this. Let's find out what's wrong with it. In two years we'll meet again and make corrections to the language," in the hope that everyone would use this one wonderful language universally instead of the several hundred already developing all over the world. The cover of an issue of the ACM Communications back then showed a Tower of Babel with all the different languages on it.

John Carr was very active in this international process. When he returned to Michigan from the 1958 meeting with the Europeans, he said:

We've got to do an Algol 58 compiler. To help the

process, let's find out what's wrong with the language.

We know how to write language compilers,

we've already worked with IT, and we've

done GAT. Let's see if we can help.

So we decided to do an Algol 58 compiler. I worked with Arden and Graham; Carr was involved a little but left Michigan in 1959. There were some things wrong - foolish inclusions, some things very difficult to do - with the Algol 58 language specification. When you write a compiler, you have to make a number of decisions. By the time we designed the language that we thought would be worth doing and for which we could do a compiler, we couldn't call it Algol any more; it really was different. That's when we adopted the name MAD, for the Michigan Algorithm Decoder. We had some funny interaction with the Mad Magazine people, when we asked for permission to use the name MAD. In a very funny letter, they told us that they would take us to court and everything else, but ended the threat with a P.S. at the bottom - "Sure, go ahead." Unfortunately, that letter is lost.

So in 1959, we decided to write a compiler, and at first it was Arden and Graham who did this. I helped, and watched, but it was mainly their work because they'd worked on GAT together. At some point I told them I wanted to get more directly involved. Arden was doing the back end of the compiler; Graham was doing the front end. We needed someone to do the middle part that would make everything flow, and provide all the tables and so on. I said, "Fine. That's my part." So Graham did part 1, Galler did part 2, and Arden did part 3.

A few years later when Bob Graham left to go to the University of Massachusetts, I took over part 1. So I had parts 1 and 2, and Arden had 3, and we kept on that way for several years. We did the MAD compiler in 1959 and 1960, and I think it was 1960 when we went to that famous Share meeting and announced that we had a compiler that was in many ways better and faster than Fortran. People still tell me they remember my standing up at that meeting and saying at one of the Fortran discussions, "This is all unnecessary, what you're arguing about here. Come to our session where we talk about MAD, and you'll see why."

Q: Did they think that you were being very brash, because you were so young?

A: Of course. Who would challenge IBM? I remember one time, a little bit later, we had a visit from a man from IBM. He told us that they were very excited at IBM because they had discovered how to have the computer do some useful work during the 20-second rewind of the tape in the middle of the Fortran translation process on the IBM 704, and we smiled. He said, "Why are you smiling?" And we said, "That's sort of funny, because the whole MAD translation takes one second." And here he was trying to find something useful for the computer to do during the 20-second rewind in the middle of their whole Fortran processor.

In developing MAD, we were able to get the source listings for Fortran on the 704. Bob Graham studied those listings to see how they used the computer. The 704 computer, at that time, had 4,000 36-bit words of core storage and 8,000 words of drum storage. The way the IBM people overcame the small 4,000-word core storage was to store their tables on the drum. They did a lot of table look up on the drum, recognizing one word for each revolution of the drum. If that wasn't the word they wanted, then they'd wait until it came around, and they'd look at the next word.

Graham recognized this and said, "That's one of the main reasons they're slow, because there's a lot of table look-up stuff in any compiler. You look up the symbols, you look up the addresses, you look up the types of variables, and so on."

So we said, fine. The way to organize a compiler then is to use the drum, but to use it the way drums ought to be used. That is, we put data out there for temporary storage and bring it back only once, when we need it. So we developed all of our tables in core. When they overflowed, we stored them out on the drum. That is, part 1 did all of that. Part 2 brought the tables in, reworked and combined them, and put them back on the drum, and part 3 would call in each table when it needed it. We did no lookup on the drum, and we were able to do the entire translation in under a second.

It was because of that that MIT used MAD when they developed CTSS, the Compatible Time-Sharing System, and needed a fast compiler for student use. It was their in-core translator for many years.

MAD was successfully completed in 1961. Our campus used MAD until the middle of 1965, when we replaced the 7090 computer with the System /360. During the last four years of MAD's use, we found no additional bugs; it was a very good compiler. One important thing about MAD was that we had a number of language innovations, and notational innovations, some of which were picked up by the Fortran group to put into Fortran IV and its successors later on. They never really advertised the fact that they got ideas, and some important notation, from MAD, but they told me that privately.

We published a number of papers about MAD and its innovations. One important thing we had was a language definition facility. People now refer to it as an extensible language facility. It was useful and important, and it worked from 1961 on, but somehow we didn't appreciate how important it was, so we didn't really publish anything about it until about 1969. There's a lot of work in extensible languages now, and unfortunately, not a lot of people credit the work we did, partly because we delayed publishing for so long. While people knew about it and built on it, there was no paper they could cite.

in IEEE Annals of the History of Computing, 23(1) January 2001 view details

Resources

- The IBM 650

external link