IPL-V(ID:265/ipl007)

Information Processing Language v5

IPL-V (initially on the Johnniac, then 1958 for IBM 650, 704, 7090, many others later on)

A list processing system whose commands are similar in spirit to those of an assembly language, but for manipulating lists. (Sammett 1966)

Proper release version of IPL. Sammet describes it as a kind of assembly, and indeed recounts how a CDC 3600 was modified to take IPL as assembly (which was used for playing draughts)

People: Hardware:

- JOHNNIAC Rand Corp

Structures:

Related languages

| IPL-IV | => | IPL-V | Evolution of | |

| IPL-V | => | Alphard | Influence | |

| IPL-V | => | AM1 | Influence | |

| IPL-V | => | BASEBALL | Influence | |

| IPL-V | => | BASEBALL | Written using | |

| IPL-V | => | CONNIVER | Incorporated some features of | |

| IPL-V | => | DYSTAL | Influence | |

| IPL-V | => | EOL | Influence | |

| IPL-V | => | FLPL | Influence | |

| IPL-V | => | GPS | Written using | |

| IPL-V | => | GPS | Target language for | |

| IPL-V | => | IPLT-1 | Implementation | |

| IPL-V | => | IPLT-1 | Port | |

| IPL-V | => | IPL-TS | Extension of | |

| IPL-V | => | IPL-VC | Implementation | |

| IPL-V | => | IPL-VI | Evolution of | |

| IPL-V | => | LIPL | Extension of | |

| IPL-V | => | LOLITA | Strong Influence | |

| IPL-V | => | Mem-theory | Influence | |

| IPL-V | => | Nevins | Extension of | |

| IPL-V | => | QUIKSCRIPT | Based on | |

| IPL-V | => | SAD SAM | Written using | |

| IPL-V | => | SDSC | Target language for | |

| IPL-V | => | SLIP | Influence | |

| IPL-V | => | TIPL | Evolution of |

References:

in [JCC 13] Proceedings of the Western Joint Computer Conference, May, 1958 view details

in [ACM] CACM 2(02) February 1959 view details

in Behavioral Science 5(1) January 1960 view details

GREEN, BERT F., JR. The Newell-Shaw-Simon p Cramming language. Behavioral Sci. 5, 1 (January 19 94-98.

This article is an informal description of the IPL-V l guage of Newell-Shaw and Simon. The use of list struct is described in terms of a hypothetical program to p bridge. The main processes of IPL-V are mentioned, the use of recursive subroutines is described. Some apLIcations are listed.

John McCarthy, Cambridge, Mass.

in ACM Computing Reviews 2(01) January-February 1961 view details

in [ACM] CACM 3(04) April 1960 view details

in [JCC 19] Proceedings of the Western Joint Computer Conference, May 1961 view details

in [JCC 19] Proceedings of the Western Joint Computer Conference, May 1961 view details

in [JCC 19] Proceedings of the Western Joint Computer Conference, May 1961 view details

in [ACM] CACM 4(01) (Jan 1961) view details

in [ACM] CACM 6(03) (Mar 1963) view details

The introduction describes some of the problems which are said to need the techniques described here, e.g., theorem proving, chess playing etc. The first actual example which the author uses to illustrate some of the detailed elementary operations is the calculation of Ackermann's function

A(m, n) = A(m -- 1, A(m, n -- 1)), 4(m, O)

= A(rm -- 1,1), .4(0, n) = n + 1 (a recursive function which is not primitive recursive). The manual then goes on to describe list structures, i.e., lists of lists and considers a program to evaluate some of the properties of a contract bridge hand (a list of four suits) such as the point count and the number of quick tricks. After this comes a description of some packaged operations for scanning lists and list structures and presenting the entries (or rather their addresses) in turn. Part one concludes with the description of a program for a psychological learning experiment. An "organism" is set up which accepts a range of stimuli and reacts to them by producing various responses. The organism modifies itself in an attempt to receive the "pleasant" stimuli and avoid the "unpleasant." The "experimenter" who controls the stimuli endeavors to "train" the organism to exhibit a certain pattern of responses. While not bearing much resemblance to human behavior, the problem illustrates the way to organize such stimulation programs.

It will be interesting to see how large special-purpose programming systems such as this are influenced in the next few years by the development of ALGOL, which is almost certain to become increasingly used for all kinds of purposes. In the meantime, however, this very complete programming system should have a useful life.

in ACM Computing Reviews 3(03) May-June 1962 view details

in ACM Computing Reviews 3(03) May-June 1962 view details

The manual is divided into two self-contained sections, corresponding to the two original papers; consequently, the bibliography occurs at the end of the first section and the index refers to the second section only. Section I, entitled "The Elements of IPL Programming," introduces push-down lists, list structures and recursive routines as they occur in the framework of the IPL system. Many examples help to illustrate these techniques and, as well as being carefully explained, include much of the reasoning followed in writing them. The section ends with the complete formulation, programming, and coding of a model that attempts to simulate a learning organism, and although this is an interesting example of the application of IPL in this field, one feels that the 50 pages could have been put to better use.

Section II is the reference manual for IPL-V. The system is based almost entirely on list structures, a routine being a list structure of basic IPL instructions. A program is written as a hierarchy of routines, a push-down list being used for communication between them. There are eight types of basic instruction supplemented by a large group of built-in routines ranging from simple list operations to auxiliary-store transfers. Specifications of nearly all these basic processes are given in detail. Error-diagnosis facilities are comprehensive and should be very useful in practice.

We are told that IPL-V interpreters are available on several computers, but these are not mentioned by name. There are several references to sections on "machine systems" dealing with the hardware arrangements, but no such sections appear in this document and no indication is given as to where these might be found. This is disappointing since, for example, it would be useful to have some idea of the speed of IPL instructions in relation to a particular machine.

In general, this is an interesting and well produced document which is successful in its aim at introducing the principles of list processing as well as being a complete programming manual for IPL-V.

in The Computer Bulletin June 1962 view details

in ACM Computing Reviews 3(04) July-August 1962 view details

in ACM Computing Reviews 3(04) July-August 1962 view details

in ACM Computing Reviews 3(04) July-August 1962 view details

in ACM Computing Reviews 3(04) July-August 1962 view details

in ACM Computing Reviews 3(04) July-August 1962 view details

in Feigenbaum, E. and Feldman, J. (eds.) "Computers and Thought" MIT Press, Cambridge, MA, 1963 view details

in [ACM] CACM 6(03) (Mar 1963) view details

This report describes several list structures and some important aspects of their implementation. It describes and compares FLPL, IPL-V, LISP and SLIP. It also gives examples of the use of slip in manipulating power series.

in [ACM] CACM 6(03) (Mar 1963) view details

in Proceedings of the 19th ACM national conference January 1964 view details

Newell, Allen; Tonge, Fred M.; Feigenbaum, Edward A.; Green, Bert F., JR.; AND Meale, George H. "Information Processing Language-V manual" (2 ed.) Prentice-Hall, Englewood Cliffs, N. J., 1964, 267 pp. $7.95.

The second edition of this manual differs from the first considerably in its introductory material but only slightly in its formal definition of the IPL-V language. That is, Part I (The Elements of IPL Programming) has been largely rewritten, but Part II (Programmers' Reference Manual) has been modified only through minor additions and corrections. The first edition of the manual was reviewed by R. A. Brooker [ CR, 3, 3 (May-June 1962), Rev. 1931 ] . The IPL-V language is included in a recently published survey of four list processing languages [ Dobrow and Bertram, "A comparison of list-proceasing computer languages," Comm. ACM 7, 4 (April 1964), 231-240 ] .

IPL-V may be regarded as the assembly language for an imaginary list-processing machine. The language is accepted by an interpreter which runs on an existing machine and causes that machine to simulate a list-processing machine. The IPL-V language is widely implemented; the manual cites implementations on twelve distinct computers. The instruction repertoire is extensive and general, and is appropriate for a wide variety of applications. The IPL-user has access to excellent introductory material (including the manual under review) and a considerable library of operational programs.

However, IPL-V was one of the first list-processing languages to be implemented and standardized, and the language is less convenient to use than more recent languages. Specifically, it has the characteristics of a "regional" assembly language for a small computer with limited input/output format. In most cases, an identifier must consist of a letter followed by one to four digits, and the use of mnemonics is virtually impossible; for example, the IPL-V instruction for "halt and proceed" is "J7". In addition to these notational inconveniences, IPL-V has a complexity of logical design which compares unfavorably with the design of more recent list-processing systems. The authors comment on this matter: "A language is to be used, and it must remain constant so that projects undertaken in its terms can cumulate.''

Those who are actively involved in writing or studying IPL-V programs will welcome this new edition of the manual for IPL-V. Those who are undertaking new and independent projects in list processing should consider the advantages of using a more recent list-processing language, SLIP, which is a descendant of IPL-V [Weizenbaum, "Symmetric list processor," Comm. ACM 6, 9 (Sept. 1963), 524-544, CR 5, 1 (Jan.-Feb. 1964), Rev. 5023 ] .

Carlos Christensen Boston. MASS

in ACM Computing Reviews 5(05) September-October 1964 view details

in ACM Computing Reviews 5(05) September-October 1964 view details

As others have done, the author separates the functions of pedagogy and definition. With respect to the primer, he admits that the "most serious deficiency is undoubtedly the lack of a set of problems of graded difficulty." A very good point made is that a reference manual should include a glossary of technical terms in addition to the index.

A number of ideas are tossed out for digestion by the reader, ranging from existing implementations to future possibilities: The keeping of manuals on tape where facilities exist for updating; a "teach" program to determine whether the results of programming exercises are correct. Languages should be designed specifically to ease the task of documentation. Languages are evolving systems and should not be stifled by standardization or official control; they should be defined in such a way as to allow easy self-manipulation.

in ACM Computing Reviews 5(01) January-February 1964 view details

Time-Sharing Applications

To illustrate the "general purpose" nature of the Time-Sharing System, we focus on two interesting programming systems currently operating on TSS as service systems for the user. The first, IPL-TS, is a complete list-processing system for the Information Processing Language V developed by Newell, Simon, and Shaw. The second, TINT, is an on-line Teletype INTerpreter for the JOVIAL algebraic language developed by SDC. When the Time-Sharing System is equipped with these two programming-language systems, the user is immediately provided with a familiar programming system to ease his transition to programming for time-sharing, and allowed to use, with little or no modification, any code he may have previously written in IPL-V or JOVIAL for other machine systems. Extract: On-Line Program Composition

On-Line Program Composition

Both IPL-TS and TINT allow the user to write symbolic programs on-line and to execute them immediately, by themselves or in conjunction with previously coded routines. With IPL-TS, the programmer uses the special system routine, Linear IPL (LIPL),* which accepts IPL code on-line in a symbolic, linear, parenthesis format convenient for keyboard input. Figure 6 presents an example of LIPL being used to compose and execute Ackermann's function on-line. TINT, which was developed specifically for on-line program composition, accepts JOVIAL statements on-line in the same linear format used for compiler input.

The ability to program on-line frees the programmer from having to concern himself with all the formalities of punched card accounting. With experience and facility, he programs online directly from his thoughts or, for more difficult problems, directly from a flow diagram, circumventing such time-consuming tasks as program-coding-sheet preparation, key punching, card sorting, editing, and prestoring. The time saved by the programmer can be applied to other coding tasks or to quality review of his current code.

No programmer, of course, could compose a large program at one sitting with either of these systems, but this is a human, not a system, limitation; LIPL has no upper bound, and TINT'S 600-statement limit effectively exceeds a human's short-term comprehension. Optimally, these systems should be used for programs that can be written and debugged m one or two sittings (usually under 100 IPL instructions or 50 JOVIAL statements).

There are three immediate consequences of this practical size limitation. First, many non-trivial, one-shot programs, such as for statistical computations, can be coded, debugged, and executed at one sitting. Often a programmer himself will refrain from writing such programs, knowing the time and effort involved. Figure 7 shows the Teletype communication resulting from an exercise using TINT as a "desk calculator" for computing the standard deviation of a set of research data. Second, large programs take on a modular structure; that is, large programs become a concatenation of numerous smaller programs and subroutines. Third, programmers begin to amass personal libraries of short utility subroutines, which they use to build larger programs. Clearly, consequences two and three would not exist, except in trivial cases, if it were not possible to work one day with code developed en prior days. Both IPL-TS and TINT provide this capability.

TINT may accept symbolic input from magnetic tape, and can integrate this input with on-line Teletype input when so directed by the user. Thus the results of one day's coding can be filed on tape for later use. An alternative, if the symbolic JOVIAL statements have been executed and debugged, is to compile the code and save the binary output on a binary library tape, thus, again, integrating previous work with current code; however, the binary library approach has greatest value when used for utility routines.

IPL-V is essentially a language of subroutines (composed from an inventory of some 200 system subroutines called J routines or primitives). Programs written in IPL-V are usually modular hierarchies of subroutines. Therefore, on-line composition of IPL-V programs is a natural extension of the language, and many alternatives for continuity of programming across many days of operation already exist within the language. For example, the programmer may "fire" a J166 (Save For Restart) at any time and continue from that point at a later date, or he may load a program from symbolic tape using the loader or J165 (Load Routines and Data) and continue using LIPL on-line.

Therefore, the attributes of IPL-TS and TINT, when combined with a programmer's imagination and skill during on-line program composition, reduce significantly the tedious, uncreative tasks of code preparation and increase productivity. This point is particularly apparent to all programmers who have been required to debug code that they wrote several days earlier, and that has grown "stale" while it was being keypunched, compiled, and executed. Instead of expending additional time and energy becoming reacquainted with his code before he can correct his errors, the programmer can, by composing the code on-line and executing it immediately, debug while the code is still fresh in his mind.

* LIPL was designed and coded by R. Dupchak while cons'iltant to the RAND Corporation, Santa Monica

Extract: On-Line Program Debugging

On-Line Program Debugging

The particular ability of IPL-TS and TINT to detect, locate, and correct program errors online is perhaps their greatest asset, since it leads to substantial decrease in program turnaround time. In effect, IPL-TS and TINT increase the programmer's debugging efficiency by allowing him to check out more code per day than would be possible with non-time-sharing operation.

Extract: Error Detection

Error Detection is the first step in debugging any program. Errors may be classed as either grammatical errors in language or format, or logical errors in code execution. The generator screens out most grammatical errors for TINT, and either the loader or LIPL performs the same task for IPL-TS. Logical-error detection, however, is a more difficult task, even with IPL-TS and TINT. The advantage of these systems for error detection is their responsiveness to the programmer. He may choose to develop on-line, special-purpose debugging tools to suit his individual preference, or he may use those debugging tools provided by the system. For example, IPL-TS currently provides an error trap for some twenty illegal IPL operations resulting from faulty program logic; when such errors occur, IPL-TS attempts to provide the programmer with as much information as possible to help him correct his error. First, an error message is sent to the programmer to inform him of the error's occurrence and of its nature. Second, a special system routine, Trace Dump (discussed below), provides him with a "back trace" of the code leading up to the error to help him locate the cause of the error. Finally, the system pauses at a breakpoint, to allow him time to correct the error. However, all three steps may be altered, since the IPL-TS error trap mechanism is designed with a "thin skin" to allow the programmer to substitute his own trapping action in lieu of that provided by the system.

With TINT, logical-error detection is left more to the imagination of the programmer. TINT allows the programmer to insert a PRINT statement, with numerous item names as arguments, at any point in his program. When it encounters this statement during program execution, TINT responds by printing on the user's Teletype the current values of all specified items. In this fashion, the programmer may take item snapshots at critical points in his program The power of the PRINT statement for logical-error detection is amplified when combined with the TINT READ statement The READ statement is the converse of the PRINT statement When TINT encounters this statement during program execution, the programmer must insert the current values of prespecified items By judicious use of the READ and PRINT statements, the programmer can repeatedly exercise a program with different initial conditions and review his results with input/output transfer-function analysis

Thus, on-line user program communication increases a programmer s debugging efficiency by increasing his ability to detect program errors It is typical for a programmer, checking out new code with IPL-TS or TINT, to detect and correct half a dozen program errors in the first hour of operation, such error correction might easily have required a week with conventional programming systems Extract: Error location

Error location

The pinpointing of the erroneous code is often considered no different from error detection This may be true for grammatical errors but is far from true for logical errors The knowledge that an error exists does not, in and of itself, narrow the search for the error's location The user of IPL TS, there fore, is provided with a description of the system detected error and the aforementioned back trace of the code leading up to the error Back tracing by the system is performed in the debugging mode by the special system routine Trace Dump, which prints a full trace of up to the last 100 interpretation cycles, in reverse order (last cycle first) The number of previous cycles printed is controllable on-line Experience shows that the location of an error can usually be found within the first five cycles printed and that it is rarely necessary to go deeper than ten cycles back For logical errors not detected by the system the programmer has available all the standard IPL-V Monitor Point functions, m addition IPL TS extends these functions to include breakpoint operation as a programmer initiated option The option may be invoked at load time or during program execution In addition, the IPL primitive J7 (Halt) has been implemented as an alternative breakpoint mechanism When a breakpoint is encountered by IPL-TS, the programmer is notified and requested to enter the name of any regionally denned routine, which is then executed immediately Upon completion of the routine, the programmer is again queried He may continue to fire routines at the breakpoint or he may exit back to the prior program, the context of which has remained undisturbed.

Breakpoints are not a panacea for locating erroneous code, however, they do provide additional control flexibility at critical points in a program In fact, the user of TINT must lelj almost exclusively on breakpoint logic for locating erroneous code the aforementioned READ and PRINT statements are in effect breakpoint statements For elusive errors these statements may be used to bracket groups of JOVIAL statements, and in extreme cases, individual JOVIAL statements TINT also provides a STOP statement, which is also a breakpoint statement When the interpreter encounters the STOP statement, the program is suspended until directed by the user to continue The user may also reexecute his program from a STOP breakpoint, or he may enter new code or edit prior code before continuing TINT'S STOP statement is analogous to the IPL-TS J7 (Halt) primitive.

Extract: Error correction

Error correction in symbolic code with either IPL TS or TINT is essentially on line program composition LIPL allows the IPL programmer to erase, extend, or modify selectively any user routine existing in the system TINT, similarly, allows the programmer to edit any JOVIAL code written, on a statement-by-statement basis

Here, again, the programmer's control over his program is effectively increased He can correct code in several minutes instead of the several days typical with most computer installations

in [AFIPS JCC 25] Proceedings of the 1964 Spring Joint Computer Conference SJCC 1964 view details

The size of this book needs to be somewhat discounted because most of it is set in pica type, at four lines per vertical inch, and the pages are provided with generous margins. It is well laid out, clearly written, and has a bibliography of 62 pertinent references. It consists of two parts; namely, a learning manual and a reference manual. The learning manual does not require prior knowledge of any form of computer programming. It contains a section, "Organizing Complete Tasks," which would be of value to persons learning other forms of programming. The learning manual contains exercises and solutions for some of them and is suitable for selfteaching. There is an index, which applies to the reference manual only.

For those already acquainted with IPL-V, the differences between the first and second editions are :

1. Conventions and definitions in the initial manual have been changed at only six minor points, all associated with loading and monitoring. These may impose minor modifications to existing programs. About 30 basic processes have been added. All the changes and extensions are listed in four pages of the manual and are also incorporated in their appropriate places in Part Two, the Reference Manual.

2. Part One, the section for those learning the language, has been largely rewritten. Exercises, with sample solutions for some of them, have been added.

For those meeting IPL-V for the first time, if you have wondered about such expressions as list-processing, list structures, Newell-Shaw-Simon, push-down, pop-up, and recursion, this is a good source of relevant information. IPL-V, which was developed mainly by the RAND Corporation, is designed for non-arithmetic processing of symbolic information, especially information which can be organized in the form of lists. A list element consists of a data portion and the location of the next list element. Programs for computers having a "plus-one" instruction format, such as IBM 650, are lists of this type. The introduction gives the developments in programming which have led to IPL, explains the basic idea of lists, and gives some areas in which IPL has been used. Theorem proving, game playing, natural language processing, and artificial intelligence are examples thereof.

IPL-V is one of several list-processing languages now in use. The "V" indicates the fifth version in its series. IPL-V is a pseudo-code; that is, it is similar to a machine code, and it is processed by an interpretive program, which can be considered as an IPL-V computer. IPL-V is a lower level language than a symbolic assembly is, in some respects. For example, IPL symbolic names consist of only one alphabetic character followed by up to four numbers, allowing very slight mnemonic possibilities.

The power of the language lies in the large number of basic processes or subroutines which are available, processes which are useful in list-processing and in organizing and debugging a program. There are provisions for tracing, dumping, saving for restart, and dividing a program into more than one memory load.

Thirteen IPL-V implementations or 12 different computers are listed as available at the time of publication. Input-output conventions are peculiar to the different machine systems and are not covered in the IPL-V manual. They would have to be looked up before actual running of an IPL program. System questions, such ' as how to add new processes, are also peculiar to the particular implementation and are not covered in this manual.

in [AFIPS JCC 25] Proceedings of the 1964 Spring Joint Computer Conference SJCC 1964 view details

CHAPIN, NED.

An implementation of IPL-V on a small computer.

Proc. ACM 19th Natl. Conf., Phila., Pa., Aug. 1964, (6 PP.) ACM, New York, $5.

This paper describes the implementation of a sub-set of the routines of IPL-V for a small computer (IBM-1620 with 20,000 positions of core storage). The paper includes the objectives established prior to implementation, the major decisions made during implementation, and a comparison of the completed implementation with other IPL-V implementations.

A history of the implementation of a specific language for a specific computer, such as in this paper, is necessarily of restricted interest and temporary value. Within these limita

tions, the paper is useful as a well-organized and easily-read implementation history.

>Carlos Christensen, Boston, Mass.

in ACM Computing Reviews 6(04) July-August 1965 view details

in ACM Computing Reviews 6(04) July-August 1965 view details

in ACM Computing Reviews 6(04) July-August 1965 view details

Purpose of QUIKSCRIPT

QUIKSCRIPT provides, within the 20-GATE algebraic language in use at Carnegie Institute of Technology, a simulation capability of the sort offered by SIMSCRIPT and also a rudimentary list-processing capability) One reason for desiring this capability was a wish to experiment with an easily modifiable Si~iSCRirT-like language, to test alternative timing features, list-processing notions, and other aspects of simulation-oriented systems. A second reason was that it appeared easier to program a particular large-scale simulation, then under consideration, by first creating QUIKSCRIPT rather than by proceeding directly. This contention appears to have been borne out.

To provide this capability quickly and inexpensively, it was decided to program the entire system in 20-GATE, SO that QUIKSCRIPT would be a set of GATE subroutines. This design decision, which eliminates the use of a preprocessor or any loader other than the GATE compiler, leads to most of the "rough edges" recognizable in the system described here and also to the exclusion of some useful features of SIMSCRIPT. (For those SIMSCRIPT features included, a general attempt was made to keep them as close to SIMSCRIPT as possible, given the above ground rule.) The system and the method of implementation were specified in less than two evenings. Programming and initial debugging took less than a man-month.

in [ACM] CACM 8(06) June 1965 view details

in ACM Computing Reviews 7(03) May-June 1966 view details

in Cybernetics. New York. 2. 1966, March-April view details

in Cybernetics. New York. 2. 1966, March-April view details

in [ACM] JACM 13(03) July 1966 view details

in [ACM] JACM 13(03) July 1966 view details

1. Linguistic basis for language computation (string analysis). 2. Procedure. 3. Implementations in IPLV & FAP (IBM 7094), organization of grammars as BNF definitions and "restrictions" (= constraints on parse trees), treatment of conjunctions.

in Advances in Computers, Vol. 8 FL Alt and M Rubinoff (Eds.), Academic Press, New York, 1967 view details

in Computers & Automation 16(6) June 1967 view details

[ Book ] FOSTER, J. M.

List processing.

American Elsevier Publ., New York, 1967, 54 pp. $4.50.

This tiny (54 pages) monograph on list processing is a beautiful introduction to techniques which have been used for some time in many applications. Among the topics included are: 1) the representations of lists (both in the computer and externally); and 2) operations on lists, garbage collection, and typical list languages. One short chapter is also devoted to an example of list processing used in a program to perform syntactic analysis of language. All the examples in the book are programmed in an extended A~Go~ with added list processing functions, and are carefully chosen to illustrate important points about list processing. The book's principal weakness is its lack of depth, which is not really to be expected in such a brief monograph. The summary of the list processing languages IPL-V, LISP, FLIP, FLPL, and COMIT are too brief to give any real feeling for these languages to people who have not seen them before. But these are minor quibbles with a very fine book which will broaden the outlook of people who think of computers only as tools to manipulate numbers, and will allow them to begin to appreciate what can be done with list processing techniques for symbol manipula

tion. D. a. Bobrow, Cambridge, Mass.

in ACM Computing Reviews 9(01) January 1968 view details

History And Bibliography

Linear lists and rectangular arrays of information kept in consecutive memory locations were widely used from the earliest days of stored-program computers, and the earliest treatises on programming gave the basic algorithms for traversing these structures. [ For example, see J. von Neumann, Collected Works, vol. V, 113-116 (written 1947); M. V. Wilkes, D. J. Wheeler, S. Gill, The Preparation of Programs for an Electronic Digital Computer (Reading, Mass.: Addison-Wesley, 1951), subroutine V-1. ] Before the days of index registers, operations on sequential linear lists were done by performing arithmetic on the machine language instructions themselves, and this type of operation was one of the early motivations for having a computer whose programs share memory space with the data they manipulate.

Techniques which permit variable-length linear lists to share sequential locations, in such a way that they shift back and forth when necessary, as described in Section 2.2.2, were apparently a much later invention. J. Dunlap of Digitek Corporation developed these techniques in 1963 in connection with the design of a series of compiler programs; about the same time the idea independently appeared in the design of a COBOL compiler at IBM Corporation, and a collection of related subroutines called CITRUS was subsequently used at, various installations. The techniques remained unpublished until after they had been independently developed by Jan Garwick of Norway; see BIT 4 (1964), 137-140.

The idea of having linear lists in non-sequential locations seems to have originated in connection with the design of computers with drum memories; the first such computer was developed by General Electric Corporation during 1946-1948, and the IBM 650 was the most notable later machine of this type. After executing the instruction in location n, such a computer is usually not ready to get its next instruction from location n + 1 because the drum has already rotated past this point. Depending on the instruction being performed, the most favorable position for the next instruction might be n + 7 or n + 18, etc., and the machine can operate up to six or seven times faster if its instructions are optimally located rather than consecutive. [ For a discussion of the interesting problems concerning best placement of these instructions, see the author's article in JACM 8 (1961), 119-150. ] Therefore the machine design provides an extra address field in each machine language instruction, to serve as a link to the next instruction. Such a machine is called a "one-plus-one-address computer," as distinguished from MIX which is a one-address computer." The design of one-plus-one-address computers is apparently the first appearance of the linked-list idea within computer programs, although the dynamic insertion and deletion operations which we have used so frequently in this chapter were still unknown.

Linked memory techniques were really born when A. Newell, C. Shaw, and H. Simon began their investigations of heuristic problem-solving by machine. As an aid to writing programs that searched for proofs in mathematical logic, they designed the first " listprocessing" language IPL-II in the spring of 1956. (IPL stands for Information Processing Language.) This was a system which made use of links and included important concepts like the list of available space, but the concept of stacks was not yet well developed; IPL-III was designed a year later, and it included "push down" and "pop up" for stacks as important basic operations. (For references to IPL-II see "The Logic Theory Machine," IRE Transactions on Information Theory IT-2 (Sept. 1956), 61-70; see also Proc. Western Joint Comp. Conf. (Feb. 1957), 218-240. Material on IPL- III first appeared in course notes given at the University of Michigan in the summer of 1957.)

The work of Newell, Shaw, and Simon inspired many other people to use linked memory (which was often at the time referred to as NSS memory), mostly for problems dealing with simulation of human thought processes. Gradually, the techniques became recognized as basic computer programming tools; the first article describing the usefulness of linked memory for "down-to-earth" problems was published by J. W. Carr, III, in CACM 2 (Feb. 1959), 4-6. Carr pointed out in this article that linked lists can readily be manipulated in ordinary programming languages, without requiring sophisticated sub-routines or interpretive systems. See also G. A. Blaauw, IBM J. Res. and Dev. 3 (1959), 288-301.

At first, one-word nodes were used for linked tables, but about 1959 the usefulness of several consecutive words per node and "multilinked" lists was gradually being discovered by several different groups of people. The first article dealing specifically with this idea was published by D. T. Ross, CACM 4 (1961), 147-150; at that time he used the term "plex" for what has been called a "node" in this chapter, but he subsequently has used the word "plex" in a different sense to denote a class of nodes combined with associated algorithms for their traversal.

Notations for referring to fields within nodes are generally of two kinds: the name of the field either precedes or follows the pointer designation. Thus, while we have written "INFO(P)" in this chapter, some other authors write, for example, "P.INFO ". At the time this chapter was prepared, the two notations seemed to be equally prominent. The notation adopted here has been used in the author's lectures since 1962 and it has also been independently devised by numerous other people, primarily because it is natural to use mathematical functional notation to describe attributes of a node. (For example, see the article by N. Wirth and C. A. R. Hoare, CACM 9 (1966), 413-432.) Note that "INFO(P)" is pronounced "info of P" in conventional mathematical verbalization, just as f(x) is rendered "f of x." The alternative notation P.INFO has less of a natural flavor, since it tends to put the emphasis on P, although it can be read "P's info" the reason INFO(P) seems to be more natural is apparently the fact that P is variable, but INFO has a fixed significance when the notation is employed. By analogy, we could consider a vector A = (A [ l ] , A [ 2 ] , ..., A [ l00 ] ) to be a node having 100 fields named 1, 2,..., 100. Now the second field would be referred to as "2(P)" in our notation, where P points to the vector A; but if we are referring to the jth element of the vector, we find it more natural to write A [ j ] , putting the variable quantity "j" second. Similarly it seems most appropriate to put the variable quantity "P' second in the notation INFO(P).

Perhaps the first people to recognize that the concepts "stack" (last-in-first-out) and "queue" ( first-in-first-out) are important objects of study were cost accountants interested in reducing income tax assessments; for a discussion of the "LIFO" and "FIFO" methods of pricing inventories, see any intermediate accounting textbook, e.g., C. F. and W. J. Schlatter, Cost Accounting (New York: Wiley, 1957), Chapter 7. In 1947 A. M. Turing developed a stack, called Reversion Storage, for use in subroutine linkage (see Section 1.4.5). No doubt simple uses of stacks kept in sequential memory locations were common in computer programming from the earliest days, since a stack is such a simple and natural concept. The programming of stacks in linked form appeared first in IPL, as stated above; the name "stack" stems from IPL terminology (although "pushdown list" was the more official IPL wording), and it was also independently introduced in Europe by E. W. Dijkstra. "Deque" is a term introduced by E. J. Schweppe.

The origin of circular and doubly linked lists is obscure; presumably these ideas occurred naturally to many people. A strong factor in the popularization of these techniques was the existence of general List-processing systems based on them [principally the Knotted List Structures, CACM 5 (1962), 161-165, and Symmetric List Processor, CACM 6 (1963), 524-544, of J. Weizenbaum ] .

Various methods for addressing and traversing multidimensional arrays of information were developed independently by clever programmers since the earliest days of computers, and thus another part of the unpublished computer folklore came into existence. This subject was first surveyed in print by H. Hellerman, CACM 5 (1962), 205-207. See also J. C. Gower, Comp. J. 4 (1962), 280-286.

Tree structures represented explicitly in computer memory were described for the first time in applications to algebraic formula manipulation. The A-1 compiler language, developed by G. M. Hopper in 1951, used arithmetic expressions written in a three-address code; the latter is equivalent to the INFO, LLINK, and RLINK of a binary tree representation. In 1952, H. G. Kahrimanian developed algorithms for differentiating algebraic formulas represented in the A-1 compiler language; see Symposium on automatic Programming (Washington, D.C.: Office of Naval Research, May 1954), 6-14.

Since then, tree structures in various guises have been studied independently by many people in connection with numerous computer applications, but the basic techniques for tree manipulation (not general List manipulation) have seldom appeared in print except in detailed description of particular algorithms. The first general survey was made in connection with a more general study of all data structures by K. E. Iverson and L. R. Johnson [ IBM Corp. research reports RC-390, RC-603, 1961; see Iverson, A Programming Language (New York: Wiley, 1962), Chapter 3 ] . See also G. Salton, CACM 5 (1962), 103-114.

The concept of threaded trees is due to A. J. Perlis and C. Thornton, CACM 3 (1960), 195-204. Their paper also introduced the important idea of traversing trees in various orders, and gave numerous examples of algebraic manipulation algorithms. Unfortunately, this important paper was hastily prepared and it contains many misprints. The threaded lists of Perlis and Thornton actually were only "right-threaded trees" in our terminology; binary trees which are threaded in both directions were independently discovered by A. W. Holt, A Mathematical and Applied Investigation of Tree Structures (Thesis, U. of Pennsylvania, 1963). Postorder and preorder for the nodes of trees were called "normal along order" and "dual along order" by Z. Pawlak, Colloquium on the Foundation of Mathematics, etc. (Tihany, 1962, published by Akademiai Kiado, Budapest, 1965), 227-238. Preorder was called "subtree order" by Iverson and Johnson in the references cited above. Graphical ways to represent the connection between tree structures and corresponding linear notations were described by A. G. Oettinger, Proc. Harvard Symp. on Digital Computers and their Applications (April, 1961), 203-224.

The history of tree structures as mathematical entities, together with a bibliography of the subject, is reviewed in Section 2.3.4.6.

At the time this section was written, the most widespread knowledge about information structures was due to programmers' exposure to List processing systems, which have a very important part in this history. The first widely used system was IPL-V (a descendant of IPL-III, developed late in 1959); IPL-V is an interpretive system in which a programmer learns a machine-like language for List operations. At about the same time, FLPL (a set of FORTRAN sub-routines for List manipulation, also inspired by IPL but using subroutine calls instead of interpretive language) was developed by H. Gelernter and others. A third system, LISP, was designed by J. McCarthy, also in 1959. LISP is quite different from its predecessors: programs for it are expressed in mathematical functional notation combined with "conditional expressions" (see Chapter 8), then converted into a List representation. Many List processing systems have come into existence since then, of which the most prominent historically is J. Weizenbaum's SLIP; this is a set of subroutines for use in FORTRAN programs, operating on doubly linked Lists.

An article by Bobrow and Raphael, CACM 7 (1964), 231-240, may be read as a brief introduction to IPL-V, LISP, and SLIP, and it gives a comparison of these systems. An excellent introduction to LISP has been given by P. M. Woodward and D. P. Jenkins, Comp. J. 4 (1961), 47-53. See also the authors' discussions of their own systems, which are each articles of considerable historical importance: "An introduction to IPL-V" by A. Newell and F. M. Tonge, CACM 3 (1960), 205-211; "A FORTRAN-compiled List Processing Language" by H. Gelernter, J. R. Hansen, and C. L. Gerberich, JACM 7 (1960), 87-101; "Recursive functions of symbolic expressions and their computation by machine, I" by John McCarthy, CACM 3 (1960), 184-195; "Symmetric List Processor" by J. Weizenbaum, CACM 6 (1963), 524-544. The latter article includes a complete description of all of the algorithms used in SLIP. In recent years a number of books about these systems have also been written.

Several string manipulation systems have also appeared; these are primarily concerned with operations on variable-length strings of alphabetic information (looking for occurrences of certain substrings, etc.). Historically, the most important of these have been COMIT (V. H. Yngve, CACM 6 (1963), 83-84) and SNOBOL (D. J. Farber, R. E. Griswold, and I. P. Polonsky, JACM 11 (1964), 21-30). Although string manipulation systems have seen wide use and although they are primarily composed of algorithms such as we have seen in this chapter, they play a comparatively small role in the history of the techniques of information structure representation; users of these systems have largely been unconcerned about the details of the actual internal processes carried on by the computer. For a survey of string manipulation techniques, see S. E. Madnick, CACM 10 (1967), 420-424.

The IPL-V and FLPL systems for List-processing did not use either a garbage collection or a reference count technique for the problem of shared Lists; instead, each List was "owned" by one List and "borrowed" by all other Lists which referred to it, and a List was erased when its "owner" allowed it to be. Hence, the programmer was enjoined to make sure no List was still borrowing any Lists that were being erased. The reference counter technique for Lists was introduced by G. E. Collins, CACM 3 (1960), 655-657; see also the important sequel to this paper, CACM 9 (1966), 578-588. Garbage collection was first described in McCarthy's article cited above; see also CACM 7 (1964), 38, and an article by Cohen and Trilling, BIT 7 (1967), 22-30.

Unfortunately, too few people have realized that manipulation of links is not a magic, complex process that must be done by fancy List manipulation routines. There is still a widespread tendency to feel that the advantages of link manipulation can be obtained only by using a large List-processing system; actually, as we have seen in many examples throughout this chapter, it is quite easy to design linking algorithms which operate more efficiently than a general List-processing subroutine could possibly do. The author hopes that these detailed examples will help to convey a better-balanced attitude towards the use of structural links in data.

Dynamic storage allocation algorithms were in use several years before published information about them appeared. A very readable discussion is given by W. T. Comfort, CACM 7 (1964), 357-362 (an article written in 1961). The "boundary-tag' method, introduced in Section 2.5, was designed by the author in 1962 for use in a control program for the B5000 computer. The "buddy system" was first used by H. Markowitz in connection with the SIMSCRIPT programming system in 1963, and it was independently discovered and published by K. Knowlton, CACM 8 (1965), 623-625; see also CACM 9 (1966), 616-625. For further discussion of dynamic storage allocation, see the articles by Iliffe and Jodeit, Comp. J. 5 (1962), 200-209; Bailey, Barnett, and Burleson, CACM 7 (1964), 339-346; Berztiss, CACM 8 (1965), 512-513; and D. T. Ross, CACM 10 (1967), 481-492.

A general discussion of information structures and their relation to programming has been given by Mary d'Imperio, "Data Structures and their Representation in Storage," Annual Review of Automatic Programming 5 (Oxford: Pergamon Press), in press. This paper is also a valuable guide to the history of the topic, since it includes a detailed analysis of the structures used in connection with twelve List processing and string manipulation systems. See also the proceedings of two symposia, CACM 3 (1960), 183-234 and CACM 9 (1966), 567-643, for further historical details. (Several of the individual papers from these proceedings have already been cited above.)

An excellent annotated bibliography, which is primarily oriented towards applications to symbol manipulation and algebraic formula manipulation but which has numerous connections with the material of this chapter, has been compiled by Jean E. Sammet, Comput. Rev. 7 (July-August 1966), B1-B31.

in ACM Computing Reviews 9(01) January 1968 view details

in Bobrow, D. G. (ed) "Symbol Manipulation Languages and Techniques", Proceedings of the IFIP Working Conference on Symbol Manipulation Languages. North-Holland Publishing Co., Amsterdam, 1968 view details

in Bobrow, D. G. (ed) "Symbol Manipulation Languages and Techniques", Proceedings of the IFIP Working Conference on Symbol Manipulation Languages. North-Holland Publishing Co., Amsterdam, 1968 view details

[321 programming languages with indication of the computer manufacturers, on whose machinery the appropriate languages are used to know. Register of the 74 computer companies; Sequence of the programming languages after the number of manufacturing firms, on whose plants the language is implemented; Sequence of the manufacturing firms after the number of used programming languages.]

in Bobrow, D. G. (ed) "Symbol Manipulation Languages and Techniques", Proceedings of the IFIP Working Conference on Symbol Manipulation Languages. North-Holland Publishing Co., Amsterdam, 1968 view details

in [ACM] CACM 15(06) (June 1972) view details

in Computers & Automation 21(6B), 30 Aug 1972 view details

in ACM Computing Reviews 15(04) April 1974 view details

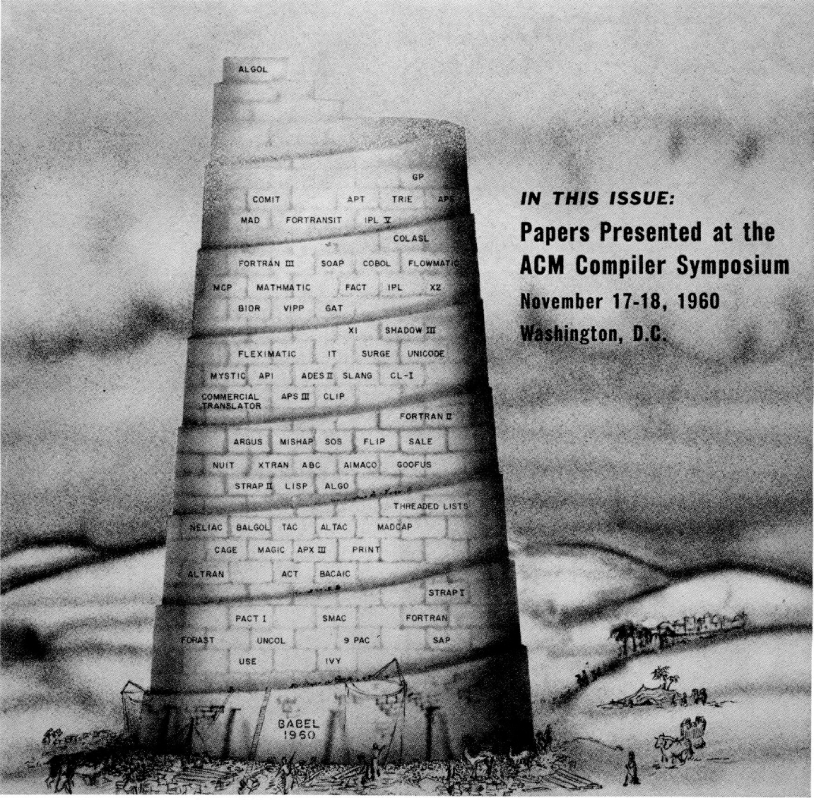

The exact number of all the programming languages still in use, and those which are no longer used, is unknown. Zemanek calls the abundance of programming languages and their many dialects a "language Babel". When a new programming language is developed, only its name is known at first and it takes a while before publications about it appear. For some languages, the only relevant literature stays inside the individual companies; some are reported on in papers and magazines; and only a few, such as ALGOL, BASIC, COBOL, FORTRAN, and PL/1, become known to a wider public through various text- and handbooks. The situation surrounding the application of these languages in many computer centers is a similar one.

There are differing opinions on the concept "programming languages". What is called a programming language by some may be termed a program, a processor, or a generator by others. Since there are no sharp borderlines in the field of programming languages, works were considered here which deal with machine languages, assemblers, autocoders, syntax and compilers, processors and generators, as well as with general higher programming languages.

The bibliography contains some 2,700 titles of books, magazines and essays for around 300 programming languages. However, as shown by the "Overview of Existing Programming Languages", there are more than 300 such languages. The "Overview" lists a total of 676 programming languages, but this is certainly incomplete. One author ' has already announced the "next 700 programming languages"; it is to be hoped the many users may be spared such a great variety for reasons of compatibility. The graphic representations (illustrations 1 & 2) show the development and proportion of the most widely-used programming languages, as measured by the number of publications listed here and by the number of computer manufacturers and software firms who have implemented the language in question. The illustrations show FORTRAN to be in the lead at the present time. PL/1 is advancing rapidly, although PL/1 compilers are not yet seen very often outside of IBM.

Some experts believe PL/1 will replace even the widely-used languages such as FORTRAN, COBOL, and ALGOL.4) If this does occur, it will surely take some time - as shown by the chronological diagram (illustration 2) .

It would be desirable from the user's point of view to reduce this language confusion down to the most advantageous languages. Those languages still maintained should incorporate the special facets and advantages of the otherwise superfluous languages. Obviously such demands are not in the interests of computer production firms, especially when one considers that a FORTRAN program can be executed on nearly all third-generation computers.

The titles in this bibliography are organized alphabetically according to programming language, and within a language chronologically and again alphabetically within a given year. Preceding the first programming language in the alphabet, literature is listed on several languages, as are general papers on programming languages and on the theory of formal languages (AAA).

As far as possible, the most of titles are based on autopsy. However, the bibliographical description of sone titles will not satisfy bibliography-documentation demands, since they are based on inaccurate information in various sources. Translation titles whose original titles could not be found through bibliographical research were not included. ' In view of the fact that nany libraries do not have the quoted papers, all magazine essays should have been listed with the volume, the year, issue number and the complete number of pages (e.g. pp. 721-783), so that interlibrary loans could take place with fast reader service. Unfortunately, these data were not always found.

It is hoped that this bibliography will help the electronic data processing expert, and those who wish to select the appropriate programming language from the many available, to find a way through the language Babel.

We wish to offer special thanks to Mr. Klaus G. Saur and the staff of Verlag Dokumentation for their publishing work.

Graz / Austria, May, 1973

in ACM Computing Reviews 15(04) April 1974 view details

in [ACM] ACM Computing Surveys (CSUR) 8(1) March 1976 view details

In late 1954, the authors began a collaboration with J. C. (Cliff) Shaw in research on complex information processing with computers. The initial goal was to develop a computer program that could learn to play chess, but in the autumn of 1955 this target was displaced by the interim goal of writing a program that could prove theorems in the propositional calculus of Principia Mathematica. Newell and Shaw, both on the staff of the Rand Corporation in Santa Monica, had access to the newly completed JOHNNIAC computer there, while Simon, on the faculty of Carnegie Institute of Technology, was a consultant to Rand.

In order to achieve our goals, we decided that it was essential to devise a programming language capable of handling large, complex symbol structures whose size and configuration would be extremely difficult to predict in advance, and would have to be modified during run time. Our solution was to organize memory in terms of list structures and to construct a language designed to operate on lists. By the end of 1955, the first list-processing language, IPL-II, had taken shape; early in the following year it was operating on the JO INNIAC and a theorem-proving system had been coded in it.

Meanwhile, Newell had moved to Pittsburgh in order to take a doctoral degree there, having interrupted his graduate education when he began work at Rand some years earlier. In Pittsburgh, he retained his affiliation with Rand, and the research continued vigorously, but with Shaw and Newell on opposite ends of a teletype wire that connected them across the continent -- a sort of prehistoric network with human node processors similar to Arpanet IMPs.

On the campus of Carnegie Institute of Technology, a few faculty members had been exposed, in the early 1950s, to the new electronic computers. As we have seen, one of these was Simon, another was Charles C. Holt, an economist and engineer. Both were associated with the Graduate School of Industrial Administration (GSIA), a "new- look" business school established in 1949 with a strong emphasis on the developing tools of operations research and management science. Holt Newell, and Simon thought the time ripe to bring a computer to the CIT campus, and were successful in persuading the dean of GSIA, G. L. Bach, and the dean of Science and Engineering, Richard Teare, to underwrite the cost. Since the electrical engineers on campus were mainly concerned with avoiding responsibility for maintaining such a machine if it arrived, and most mathematicians and scientists could not see how or why they would use one, there was no objection to locating the computer in the basement of GSIA.

Choosing an appropriate computer called for consultation with other universities that already had one, and consultation led to Alan Perlis at Purdue, with the result that an IBM 650 and Alan arrived in Pittsburgh in the spring and summer, respectively, of 1956. Perlis has told his own story of the 650 at Carnegie in the preceding article in this issue. Here, we need only record our deep gratitude for his imaginative and productive leadership of computing at the university during the decade and a half he was our colleague.

By the spring of 1956, a number of graduate students, enrolled in Simon's course on Mathematical Methods in Social Science, were considering doing theses on complex information processing (alias artificial intelligence). These included Edward A. Feigenbaum, Julian Feldman, Robert K. Lindsay, Fred M. Tonge, and soon afterward Geoffrey Clarkson. Although we could provide them with some access to the Rand machines (Tonge wrote his thesis program in IPL-IV on the JOHNNIAC), it became imperative that we bring up a list-processing language for student and faculty use at Carnegie. Today, a programmer might have second thoughts about putting a list-processing language on a machine with only 2000 words of highspeed memory. When we remember that the highspeed store of the JOHNNIAC was only 4096 words (supplemented by a drum with about 10,000 words of usable capacity), the memory limits of the 650, while severe, seemed manageable.

IPL-V, the language developed for the IBM 650, "started out in late 1957 to be a 'modified copy' of IPL-IV (then being implemented on JOHNNIAC) (Newell 1963, p. 86). An initial running system was produced under Newell's direction in early 1958, mainly by Carleton B. Hensley and Tonge (Hensley, Newell, and Tonge 1958). Meanwhile, since the Rand Corporation had acquired an IBM 704, it was decided that the language should be designed to run on both the 650 and the 704. The revised language, with Newell, Tonge, Feigenbaum, Bert Green, Jr., and George Mealy as its principal designers, was described, in June 1958, in a preliminary version of the IPL-V manual, and the system became operational on the 704 at the end of the summer of 1959 (Newell 1963).

Thus, IPL-V having been coded for both 650 and 704, and provided with a manual (first edition, 1961; second edition, 1964), became the first list-processing language to be made available for public use. Subsequently, IPL-V was brought up on a substantial number of other computers and continued for a decade to be an important language, both for research in artificial intelligence and cognitive science and for teaching the basic concepts of list processing.

A glance at the pioneering research that is collected in Feigenbaum and Feldman's Computers and Thought (1963) shows that the IPL-V system on the 650 at Carnegie made important contributions to the foundations of AI and cognitive science. Among the programs written at that time were Feigenbaum's EPAM, a simulation of verbal learning processes, Feldman's program for simulating human decisions in the binary choice experiment, Kenneth Laughery's program for concept formation, Lindsay's SAD SAM, an early program with natural language and reasoning capabilities, and Clarkson's simulation of the investment decisions of a bank trust officer. As can be seen from this list, most of this research focused on the simulation of human cognitive processes.

Although these programs were written in IPL-V, and at least partially debugged on the 650 at Carnegie, most experience in running them on actual tasks was gained on other machines. Both the small memory of the 650 and its brief tenure at Carnegie after IPL-V became available prevented extensive runs of large programs with it. So the greatest significance of the machine in the history of AI was as the instigator and test bed of the first public list-processing language, and as an instrument for teaching list processing to the first generation of students in cognitive science.

The availability of IPL-V on the 650, and of a carefully written manual describing the language (Newell et al. 1961; 1964), contributed much also to the diffusion of knowledge of list-processing techniques to other university campuses and AI research groups. Because it could be run on a wide variety of computers of the 1960s, someone quipped that IPL-V was a machine-independent language, and that the machine it was independent of was the 650.

An interesting, but probably undecidable, historical question is whether IPL-V made a significant contribution to the set of concepts that were some years later labeled "structured programming." At the time IPL-V was produced, mainstream systems programmers and researchers on algebraic programming languages paid little attention to list-processing languages, which were generally regarded as esoteric and unbearably wasteful of machine time and memory. It was probably Chapter II of Knuth's memorable Fundamental Algorithms (1969) that first gave them a measure of credibility outside the AI community. Therefore, although a strong case can be made that the central principles of structured programming were developed and employed extensively by users of list-processing languages, almost from the day those languages became available, the principles were likely largely unknown to the developers of algebraic languages who independently reinvented them.

The IPL-V manual is quite explicit in its advocacy of top-down programming and independent closed subroutines. A few brief quotes will indicate the explicitness of its conceptions in this domain.

One programming strategy, often called the "top- down" approach, is to divide each large process, no matter how complicated, into a small number of subprocesses. Each of these subprocesses is given a name, and its function -- the processing it accomplishes -- is defined precisely by specifying exactly what inputs it requires and what outputs it produces. How the subprocess will carry on this processing does not matter at this stage. (Kelly and Newell 1964, pp. 104-105)

Once any process is coded, attention can be directed to developing and coding each of its subprocesses, using exactly the same strategy of decomposing these into subprocesses. Ultimately, subprocesses are reached that can be defined directly in terms of the IPL primitive processes, so that the decomposition comes to a stop. Although apparently at each stage all the complexities are being relegated to the subprocesses and only codes for trivial processes are being written, it will be found at last that nothing complicated remains to be done at the bottom of the hierarchy. (1964, p. 105)

Another principle may be called the principle of isolation. The flexibility in hierarchical organization depends on each subroutine being isolated from the rest of the program, except for a small number of well-defined connections.... Concretely, one subroutine should not link to another. (1964, p. 109)

The top-down approach (with some needed qualifications, not quoted here), the characterization of processes solely in terms of inputs and outputs, hierarchical structure, and wariness of COTOS are all here, quite explicitly. Nearly a quarter-century later, the principles of programming enunciated in the IPL-V manual sound as modern and relevant as when they were written. It can be seen that the IBM 650 at Carnegie Institute of Technology played a significant role in the early research on artificial intelligence and cognitive science, not so much because it provided computing cycles -- although it did that, too -- as because it provided the occasion for developing the first widely used list-processing language, and a facility for training many early computer scientists in the concepts and skills required for using computers to do complex information processing.

in Annals of the History of Computing, 08(1) January 1986 (IBM 650 Issue) view details