BALGOL(ID:371/bal004)

Burroughs Algorithmic Language

Algebraic Language one pass compiler for the Burroughs 220.

Called ALGOL but as a pre-emptive measure, as it was produced before IAL report even came out.

Places Hardware:

- Burroughs 220 Burroughs

Related languages

| Burroughs Algorithmic Compiler | => | BALGOL | Evolution of | |

| IAL | => | BALGOL | Influence | |

| BALGOL | => | Algol 205 | Evolution of | |

| BALGOL | => | SUBALGOL | Extension of |

References:

On the console of the Burroughs 220 at the Stanford University Computation Center is a sign which reads: "The purpose of computing is insight, not numbers."

Despite this noble philosophy of Richard Hamming's, the Computation Center considers itself a problem-solving "factory," and although the goal of an efficient computing job shop is not an uncommon one, Computation Center Director George Forsythe feels that the Burroughs Algebraic Compiler (BALGOL) has allowed his center to achieve new lows in button-pushing.

Stanford's 220 has a 10K memory, five tape units, and a one-in, two-out Cardatron card-handling subsystem. The Computation Center also has a 650, which sees limited use by those familiar with its language and who want to spend the time programming it.

Pointing to the IN and OUT boxes outside the 220 room, Fcrsythe says, "This is our computing machine, as far as the students are concerned. That's all they need to know."

The compiling speeds of the one-pass BALGOL compiler?50 to 100 ALGOL statements per minute, which generate an average of 500 machine-language instructions per minute-make it possible for the Center to run an average of about 120 BALGOL problems per working day, plus additional work. The top production figure for one day on BALGOL runs was 288 problems in 16 hours.

According to Forsythe, some large-scale computers?20 to 30 times as fast internally as the 220-take three times as long to compile using FORTRAN. This is partly because their FORTRAN compiler?for even the simplest programs ?polishes and polishes . . . and thus requires two to three minutes for the simplest kind of problem.

FOR a computation center like Stanford's?interested in hundreds of problems a day?this is not the solution, says Forsythe. "Our goal," he adds, "is to convert every student at Stanford?get them out of the stone age and into the computing age."

The manner in which BALGOL has helped the Stanford Computation Center move towards this goal is reflected in the low "overhead"?18 seconds per problem. Of this, Forsythe points out, 11 seconds is for supervisory printout and could be eliminated.

BALGOL is described by Forsythe as something between ALGOL 58 and ALGOL 60: "It has most of the important ALGOL features and might be called ALGOL 59. Its language is rich and flexible-you are not limited in what you can say. Too, it has the capacity of easily enlarging itself-of defining new ^entities which are easily incorporated into the language."

Used with a "load-and-go" compiler, it features semiautomatic segmentation. This permits the main body of the program to become a master routine which controls operation sequence and the memory assignment of program segments.

Approximately 95% of the Stanford programs for the 20 are written in BALGOL, with the remainder mostly written in BLEAP, a symbolic assembly language.

Forsythe also points out that BALGOL is easy to teach. The Computation Center conducts 10-hour BALGOL programming courses which are offered in two-hour sessions over one school week. So far, about 10 such courses have been offered to some 300 students. The Computation Center also offered a special pre-school course to 50 members of the engineering faculty this fall.

BALGOL has also found its way into Math. 136, a general introduction to programming. For this course, Forsythe is using the 220 as a grading machine for problems written in BALGOL. To his program deck, the student adds a card which calls in a grader program from magnetic tape. The grader provides the data for each case in turn, to the student's program, which provides the solutions. The solutions are evaluated by the grader, which then summarizes the students' scores for all cases, and prints out comments. Students usually get two to three-hour service. "The computer is an ideal teaching machine." says Forsythe.

The grader program is based on that developed by Perils and Van Zoeren at Carnegie Institute of Technology.

In addition to its use by students, BALGOL has played a key role in work being done by the members of the University's recently established Computer Science Division, of which Professor Forsythe is also the head.

Professor John G. Herriot of the Computer Science Division is using BALGOL in his work on the solution of boundary value problems by methods of kernel function.

This involves a great deal of calculation ... so much that Herriot considers the work impractical without computers and compilers such as BALGOL. Using the Burroughs compiler, he was able to do the analysis and programming over a period of three to four months, working on it part-time. .

With the help of a graduate assistant, Herriot is also using BALGOL in the solution of polynomial equations. They are investigating in particular, with Professor Hans Maehly, the Laguerre method of solving polynomial equations. Also, a BALGOL procedure has been written for solving differential equations by the Adams method. This incorporates an automatic change of step size and flexible output, permitting the printout of points at any desired interval.

Other work in progress includes finding particular solutions of more general partial differential equations than that of Laplace. "Here BALGOL has been especially helpful in translating equations to a program for the machine," says Herriot.

John Welsch, Alan Shaw and James Vandergraft of the Computation Center have prepared magnetic tape handling routines for use with BALGOL. These include external procedures for reading, searching and writing on mag tape, and give the programmer a much larger memory with which to work.

Welsch has completed a routine which allows a compiled program to be stored on magnetic tape for later running without recompiling. The Center has also developed an operating system which will allow programs to be compiled and run without operator intervention.

A great deal of work is also being done on matrix problems?inversion and the determining of eigenvalues for both symmetrical and non-symmetrical matrices. This work will be published in the language of ALGOL 60.

At one time, consideration was given to the possibility of a mechanical translation from BALGOL to ALGOL 60, but the translation is straight-forward enough so that this was deemed unnecessary.

The Computation Center is also active in assisting other academic departments in their use of the Burroughs 220. The Medical School's Department of Psychiatry uses the computer in connection with its study of the learning process, especially as it is affected by removal of parts of the brain. Equipment operated by monkeys controls paper tape punches, on which monkey responses are recorded. The paper tape serves as input to the 220, which maintains an updated record of all experiments on magnetic tape. Wade Cole of the Computation Center admits this is a "simple-minded" use of a computer, but says, "It's a beginning in a field which has seen very little sophisticated use of computers."

Working with Drs. J. G. Toole and J. von der Groeben of the Medical School's Department of Cardiology, Professor Forsythe has developed a program on the reduction of vector electrocardiograph data. Future medical school uses of the 220 include diagnosis, simulation, and on-line reduction of experimental data.

The Computation Center has also collaborated with the Mechanical Engineering department on the numerical solution of non-linear partial differential equations for the laminar flow of an incompressible fluid. The 220 is also performing data reduction for radio-telescope signals generated in the University's radio science laboratories.

Another data reduction task will involve the work of Alphonse Juilland of the department of Modern European Languages, in his studies of the relationships of the Romance Languages to Latin. Professor Juilland is investigating language structure from phonetic and lexicographical .standpoints, and will use the computer to record and compare the basic structural units of some 500,000 words of given Romance languages.

Other uses of the 220 include a program being developed by Professor R. V. Oakford of Industrial Engineering for the optimal scheduling of high school classes, given the | available classrooms, courses, sections, and the number of students. The social sciences also make frequent use of the * 220 for various statistical work and data reduction.

Finally, the 220 is used on the third shift by the First * National Bank of San Jose for 40 hours a week for demand, deposit accounting.

According to Cole, "BALGOL has played a key role in permitting experts in various disciplines to communicate with the computer. It allows the problem sponsor to program the job himself in language with which he is familiar . . . and in the process helps remove the old communication barriers between the man with the problem and the man with the machine." Professor Forsythe adds, "BALGOL allows research to go on where it belongs."

Equally important, of course, is the high-volume efficiency it brings to the closed shop operations. "The real payoff," says Forsythe, "is the ability to compile programs rapidly and to take out the errors in the source language." This latter is accomplished by the compiler, which flags violations of the rules of the language, and lists them without interruption of the compiling process. Thus a "linguistically correct" program can usually be achieved in two or three compilations. It is also possible to detect logical and other programmer errors through the addition of temporary output statements, which are easily added or removed, since the program is normally recompiled every time it is run.

Additional monitoring facilities, dumps and traces have , ,, also been added to the compiler by Burroughs, which is bringing out a new edition this month.

For Chief of Operations Al Collins, the value of BALGOL can be directly measured in dollars. "The Burroughs Algebraic Compiler is worth $10,000 a day," he , says. The estimate is based on the fact that the 220 is' in the compile mode four hours a day ... at a compiling rate of 500 instructions a minute, this represents 120,000 commands a day.

"If only one out of five commands compiled is of value ?and that's a conservative estimate?this represents 24,-000- instructions, equivalent to the output of approximately 200 programmers, who represent a daily cost?including overhead?of $10,000. Another way of looking at it is that BALGOL commands cost approximately 8^ each, compared to $8.00 a machine-language command."

"But no matter how you look at it," concludes Collins with a smile, "one thing is certain: a slow computer .and a fast compiler have combined to make this one happy shop."

in Datamation 7(12) Dec 1961 view details

in [ACM] CACM 4(01) (Jan 1961) view details

"The computation center also has the responsibility of supplementing the classroom instruction of the CSD - the educational role ? by teaching programming and making available machine time," Forsythe said. The CSD last spring offered 18 hours of instruction, including what has been called "the second most popular course on campus": Use of Automatic Digital Computers (three credit hours). Other courses are Computer Programming for Engineers, Numerical (and Advanced Numerical) Analysis, Intermediate (and Advanced) Computer Programming, and such seminar-type courses as Computer Lab, Advanced Reading and Research, and Computer Science Seminar. Selected Topics in Computer Science this fall will cover computing with symbolic expressions and the LISP language ... in the winter, a mathematical theory of computation . . . and spring, artificial intelligence.

Last year, 876 students were enrolled in CSD courses, of whom more than 50 per cent were graduate students. (Total enrollment at Stanford is approximately 10,000, of whom 5,000 are graduate students.) During the current school year, 600-700 are expected in introductory programming courses, and 70 in the master's degree program. A PhD in numerical analysis is also offered, five graduates having been produced so far, and work has begun on a similar program in computer sciences.

The majority of programs, and almost all students' work, has been written in BALGOL (Burroughs Algebraic Language), for which the 7090 has a translator written at Stanford and based on a previous translator for the 220. In timing runs, BALGOL was found to compile three to 10 times faster than FORTRAN, although it is five to 10 per cent slower on the object program. In a test case, a problem of 150 equations (150 unknowns) had a compile-and-go time of 2.1 minutes in FORTRAN, and 2.5 minutes in BALGOL; the latter time was reduced to 1.6 minutes by coding the inner loop in machine language. The same problem in B5000 ALGOL ran 3.2 minutes, a respectable figure considering that the 90's memory is almost three times faster than the 5000's. In another test, a 7090 FORTRAN program with a run time of two minutes was translated and run on the 5000 in four minutes.

In a quite different test, involving the sorting of the columns of a two-dimensional array, the following times were found:

run time total time

B5000 ALGOL 405 sec. about 425 sec.

7090 FORTRAN 47 sec. 72 sec.

7090 BALGOL 71 sec. 84 sec.

Student utilization of the 90 during the last school year, however, was only 22 per cent; sponsored and unsponsored research projects took up the remainder ? 52 and 28 per cent, respectively. With the installation of the 5000, the campus language will be switched to ALGOL. "The new machine will be used for the job-shop work," Forsythe says, "for which it is ideal. That will make the 90 available for experiments in time-sharing and other research."

This, then, is the third area of activity of a university computing center ? research which will increase man's understanding of the computing sciences. Under Forsythe's direction, Stanford computer activities have moved from under its heavy numerical analysis orientation toward more immediate or popular research ? time-sharing and list processing: The recruitment of John McCarthy from MIT and the recent courtesy appointment of GE's Joe Weizenbaum as research associate signify the development of a CSD faculty resembling Forsythe's projections.

McCarthy was one of four who delivered a time-sharing paper at the last Spring Joint Computer Conference. The system reported on then includes five operator stations on-line with an 8K PDP-1. The PDF is connected to a drum memory with which it swaps 4K words as each user's main frame time comes up. Thus, as far as the user is concerned, he faces a 4K machine. Stanford's PDP is being replaced in February with a 20K model with 12 stations, each with a keyboard and display console. Early next year, McCarthy says, the system should be on the air, and experimentation undertaken in preparation for its use with the 7090 and in the computer-based learning and teaching lab. To be established under a one-megabuck Carnegie grant, the lab's physical plant will be an extension of Pine Hall.

Says Forsythe, "If you can see two or three years ahead, you're doing well in this field. But we're all looking toward the wide availability of computers to people. Our long-range goal is to continue development of the computer as an extension of the human mind?and enable almost anybody with a problem to have access to computers, just like the availability of telephone service."

McCarthy is also working on the Advice Taker system in his three-year ARPA-funded research in artificial intelligence. Designed to facilitate the writing of heuristic programs, the Advice Taker will accept declarative sentences describing a situation, its goals, and the laws of the situation, and deduce from it a sentence telling what to do.

"Out of optimism and sheer necessity of batch processing, we dream that computers can replace thinkers," says Forsythe. "This has not yet been realized, but there have been valuable by-products from this work." Forsythe and Prof. John G. Herriot have grants from the Office of Naval Research and the National Science Foundation, respectively, for work in numerical analysis.

Forsythe, associate director R. Wade Cole, and Herriot are all numerical analysts. Herriot returned last summer from a year's sabbatical which he spent teaching numerical analysis under a Fulbright grant at the Univ. of Grenoble in France, and completing his recently-published book, "Methods of Mathematical Analysis and Computation" (Wiley).

"When we get the new PDP, I hope to improve my chess and Kalah-playing programs," the heavily-bearded, bespectacled McCarthy says. His chess program can use considerable improvement, he adds, and the enlarged PDP will give him this opportunity.

The most popular program, however, is the PDP's "space war," written by the programmer Steve Russell, who worked under McCarthy at MIT and was brought to Stanford from Harvard. Aptly described as the ideal gift for "the man. who has everything," space war is a two-dimensional, dynamic portrayal of armed craft displayed on the console scope. Each player utilizes four ! Test Word switches on the console to control the speed and direction of his craft, the firing of "bullets" and, with the fourth, the erasure of his craft from the CRT (to avoid being hit ? a dastardly way out). With each generation of images on the scope, each player's limited supply of "fuel" and "bullets" is pre-set, and a new image is automatically, generated after a player scores a hit. Much to the consternation of the CSD staff, the game is a hit with everyone; by executive fiat, its use has been restricted to non-business hours.

The young and jovial Russell, whose character and demeanor fit the nature of space war, is presently working onLISP-2.

in Datamation 7(12) Dec 1961 view details

in Invited papers view details

There are many who feel that Algol, the

standard international Algorithmic language,

accepted by Mathematicians throughout the world,

is the proper language for instruction and use

by students. Balgol which has been used extensively

at Stanford is an example, but Balgol is

an early version, and is far from standard Algol.

in Proceedings of the 19th ACM national conference January 1964 view details

To go on with the Algol development, the years 1958-1959 were years in which many new computers were introduced, The time was ripe for experimentation in new languages. As mentioned earlier there are many elements in common in all Algebraic languages, and everyone who introduced a new language in those years called it Algol, or a dialect of Algol. The initial result of this first attempt at the standardization of Algebraic languages was the proliferation of such languages in great variety.

A very bright young programmer at Burroughs had some ideas about writing a very fast one pass compiler for Burroughs new 220 computer. The compiler has come to be known as Balgol.

A compiler called ALGO was written for the Bendix G15 computer. At Systems Development Corporation, programming systems had to be developed for a large command and control system based on the IBM military computer (ANFSQ32). The resulting Algebraic language with fairly elaborate data description facilities was JOVIAL (Jules Schwartz' own Version of the International Algebraic Language). By now compilers for JOVIAL have been written for the IBM 7090, the Control Data 1604, the Philco 2000, the Burroughs D825, and for several versions of IBM military computers.

The Naval Electronics Laboratory at San Diego was getting a new Sperry Rand Computer, the Countess. With a variety of other computers installed and expected they stressed the description of a compiler in its own language to make it easy, among other things, to produce a compiler on one computer using a compiler on another. They also stressed very fast compiling times, at the expense of object code running times, if necessary. The language was called Neliac, a dialect of Algol. Compilers for Neliac are available on at least as great a variety of computers as for JOVIAL.

The University of Michigan developed a compiler for a language called Mad, the Michigan Algorithmic Decoder. They were quite unhappy at the slow compiling times of Fortran, especially in connection with short problems typical of student use of a computer at a University. Mad was, originally programmed for the 704 and has been adapted for the 7090 It too was based on the 1958 version of Algol.

All of these languages derived from Algol 58 are well established, in spite of the fact that the ACM GAMM committee continued its work and issued its now well known report defining Algol 60.

in [AFIPS JCC 25] Proceedings of the 1964 Spring Joint Computer Conference SJCC 1964 view details

As President of the Association for Computing Machinery I welcome you to this Conference on Programming Languages and Pragmatics. Many thanks are due to the System Development Corporation for sponsoring the conference. Even more thanks are due to the computer scientists around the world who created the subject of programming systems and brought, it to the point where it merits a third major conference of this type.

You will recall that the first conference was held in Princeton in August, 1963 on the syntax of languages. A second conference on the semantics of languages was held in Vienna in September, 1964. And now this week's conference takes up the pragmatics of languages.

I am glad that it is the meeting on pragmatics to which I am privileged to address a welcome, for I am fundamentally a user of computers. As a numerical analyst, I mostly want to get my work done and my problem solved. As a teacher of elementary programming in Stanford's Computer Science Department I am most concerned that the languages be practically usable to the learner, and that the systems permit me to use the computer to help the stu-dent. I hope that pragmatics deals with those questions! Let me be specific: The two most pleasant language systems for the general scientific user that I happen to know of are both in the ALGOL 58 family: they are the Michigan MAD system and the BALGOL system for the Burroughs 220 computer (later expanded at Stanford into SUBALGOL). Let me concentrate on BALGOL, which I know better. In addition to the general features of ALGOL which most of you know, the BALGOL system had two splendid features for a user like me:

(1) The combination of language and compiler had Lhe accommodating property of "making do" in spite of programming errors. In presence of some stupid error, like the omission of a paren, the system did its best to remedy the error, write you a warning about it, and then go on to complete the compilation and enter execution. This usually meant that we could learn about the run-tim behavior of at least that part of our program which preceded syntactical error. This feature always struck me as a very reasonable and pragmatically satisfactory approach to the customer. I have been grieved to find that our ALGOL 60 compilers have not been able to supply this service. When I ask our people about it, they tell me that, ALGOL 60 isn't the kind of language you can do this with.

If this is so, it seems to represent a large pragmatic deficiency in ALGOL 60. Is ALGOL 60 a language which can deal gracefully only with programming perfection, but collapses in the face of error? Whether the answer in yes or no, I hope you will put this property of accommodation to error into your list of useful characteristics for systems to have.

(2) The other nice property of the BALGOL system was a twofold one. First, the compiler could be persuaded to generate a relocatable machine-language procedure from a BALGOL procedure declaration. (Mr. Roger Moore was the persuader.) Second, Burroughs furnished customers not just a compiler, but actually a compiler generator, so that we could at any time create a new compiler with any library for which we could furnish machine-language relocatable procedures.

With these two features together, I could write teaching and grading programs in BALGOL separately for each problem. A small amount of operator work would enable these to be compiled into the compiler's library, where the students could have access to them in a most convenient way.

I was quite disappointed to find that none of the compilers on our IBM 7090 or Burroughs B-5000 systems were able to duplicate this convenient feature, for various reasons I do not understand.

I am not here to sell you the obsolete Burroughs 220 computer system. But I am trying to illustrate some good pragmatic properties of a certain system. Such a system was conceived by a team which surely had the user's point of view clearly in front of them. And I just happen to think that the computer user is a mighty important person, and a person who is all too easily forgotten in this world of systems experts.

If computing is to become the public utility that so many of you speak of, then computer folk had better start emulating successful utilities people. Let me cite the Bell Telephone System as one successful utility, however you look at it. You will note that its changes are made gradually, after very substantial customer research and shake-down periods. You will note that the steps from research to operation are many, slow, and carefully controlled. I have heard it said that the Bell Telephone Laboratories has hundreds of people with thousands of ideas for changing the telephone system, and that any one of these people could utterly bankrupt the service, if he were left free to put his own research ideas into practice! So the Bell System has ways to keep the flow of research ideas orderly.

Let us hope that computer scientists use their imaginations and devise all kinds of interesting things in their research. But let us also devote substantial research time to finding out what languages and systems are best suited to the men with the problems to be solved. And let us strive for decision processes which will permit us to adopt the best systems, and for enough orderliness to keep change gradual. Computing has become too important in our civilization to be aJlowed the privilege of disorder in its operational progress.

I wish you the greatest of success in your conference on pragmatics!

in [ACM] CACM 9(03) March 1966 includes proceedings of the ACM Programming Languages and Pragmatics Conference, San Dimas, California, August 1965 view details

An important step in artificial language development centered around the

idea that i t is desirable to be able to exchange computer programs between

different computer labs or at least between programmers on a universal level.

In 1958, after much work, a committee representing an active European computer

organization, GAMM, and a United States computer organization, ACNI,

published a report (updated two years later) on an algebraic language called

ALGOL. The language was designed to be a vehicle for expressing the processes

of scientific and engineering calculations of numerical analysis. Equal stress was

placed on man-to-man and man-to-machine communication. It attempts to

specify a language which included those features of algebraic languages on

which it was reasonable to expect a wide range of agreement, and to obtain a

language that is technically sound. In this respect, ALGOL Set an important

precedent in language definition by presenting a rigorous definition of its syntax.

ALGOL compilers have also been written for many different computers.

It is very popular among university and mathematically oriented computer

people especially in Western Europe. For some time in the United States, it will

remain second to FORTRAN, with FORTRAN becoming more and more like

ALGOL.

The largest user of data-processing equipment is the United States Government.

Prodded in Part by a recognition of the tremendous programming investment

and in part by the suggestion that a common language would result only

if an active Sponsor supported it, the Defense Department brought together

representatives of the major manufacturers and Users of data-processing equipment

to discuss the problems associated with the lack of standard programming

languages in the data processing area. This was the start of the conference on

Data Systems Languages that went on to produce COBOL, the common business-

oriented language. COBOL is a subset of normal English suitable for expressing

the solution to business data processing problems. The language is

now implemented in various forms on every commercial computer.

In addition to popular languages like FORTRAN and ALGOL, we have

some languages used perhaps by only one computing group such as FLOCO,

IVY, MADCAP and COLASL; languages intended for student problems, a

sophisticated one like MAD, others like BALGOL, CORC, PUFFT and various

versions of university implemented ALGOL compilers; business languages in addition

to COBOL like FACT, COMTRAN and UNICODE; assembly (machine)

languages for every computer such as FAP, TAC, USE, COMPASS; languages to simplify problem solving in "artificial intelligence," such as the so-called list

processing languages IPL V, LISP 1.5, SLIP and a more recent one NU SPEAK;

string manipulation languages to simplify the manipulation of symbols rather

than numeric data like COMIT, SHADOW and SNOBOL; languages for

command and control problems like JOVIAL and NELIAC; languages to simplify

doing symbolic algebra by computer such as ALPAK and FORMAC;

a proposed new programming language tentatively titled NPL; and many,

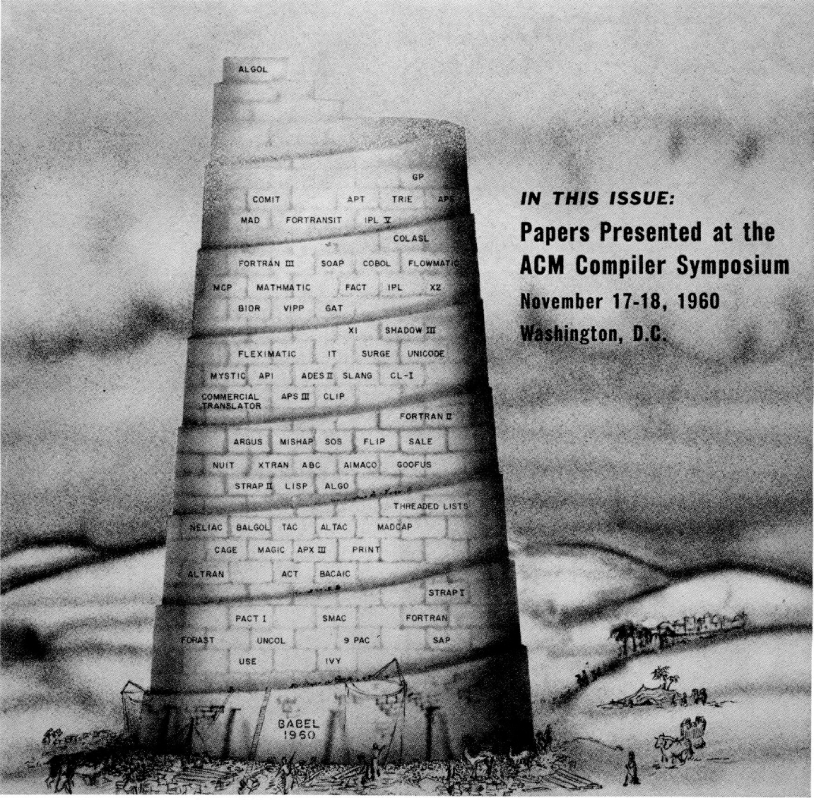

many, more. A veritable tower of BABEL!

in [ACM] CACM 9(03) March 1966 includes proceedings of the ACM Programming Languages and Pragmatics Conference, San Dimas, California, August 1965 view details

in [ACM] CACM 9(03) March 1966 includes proceedings of the ACM Programming Languages and Pragmatics Conference, San Dimas, California, August 1965 view details

in [ACM] CACM 9(03) March 1966 includes proceedings of the ACM Programming Languages and Pragmatics Conference, San Dimas, California, August 1965 view details

in [ACM] CACM 15(06) (June 1972) view details

Turner: The one significant thing, I think, that came out of the 220 was the BALGOL compiler, which was done earlier than the 5000. [BALGOL-Burroughs Algebraic Compiler for the B 220 (BAC220).] That was all done and working nicely, and they actually even had an operating system working on that machine. When I arrived in 1960 on the ALGOL project, the machine was viewed as a machine that would execute ALGOL, that would have a virtual store (if you could call it that at the time), that would have dynamic modularity, that would be (in terms of the memories and all the rest of the components you could put on -- including processors) a multiprocessing, multiprogramming type of machine. All of those things and all of those goals were designed into the machine by the time I arrived in 1960. So the 220, we'd have to say, was not a commercial success, but it did provide a vehicle for Burroughs to get into the language-processing business. BALGOL started off to be the International Algebraic Language [IAL], which was later called ALGOL 58.

Rosin: Was it done in response to customer requests or at Burroughs's instigation?

MacDonald: Things at that time weren't done in response to external stimuli. [Laughter.] They were internally created in an atmosphere that was highly creative, highly charged, and largely running on its own steam.

Galler: And not necessarily market driven, then?

MacDonald: No, they weren't. Absolutely not. Because, you see, things were so advanced that there weren't any external stimuli. They didn't exist at the time to a large extent.

Galler: How did you justify the effort within Burroughs?

MacDonald: Didn't have to. Nobody else in Burroughs knew what was going on. As long as you stayed away from the administrative restrictions, you did what you pleased. That's oversimplified, but the atmosphere was very much that way. Very unique. I've never seen it since.

Extract: BALGOL

Rosin: We heard earlier that there was some agreement that ALGOL was going to become the standard scientific language in the user community. Inside Burroughs, who championed that belief, and who, if anybody, resisted that belief?

Lonergan: I was going to comment more generally about that. I think in looking back one could say we were fairly naive. On the other hand, at that stage of things, it hadn't become as patently obvious, as it has since, that IBM will not adopt industry standards unless it's to their economic advantage to do so, and it seldom is. They prefer to go their own way. They now have a 20-year history of doing this. That wasn't clear then; now it is. They dragged their feet the same way they did with ASCII. If it's not to their advantage, they won't really support standards.

Dahm: I think it was a very complex thing in some respects. I remember when I was a summer employee in 1959, I was working on the BALGOL compiler with a fellow named Joel Erdwin, who was the project leader. We were busily trying to do our version of IAL, when one day Bob Barton came along with a FORTRAN manual in his hand. It was a really nice, well-done FORTRAN manual by IBM. He said, "Why don't you guys do this language because then you wouldn't have to write a manual?" We rejected that as being not a very good reason for picking a language. [Laughter.] SO, basically, I would say that the decision that the compiler we would do would be ALGOL as opposed to FORTRAN was made by a summer employee and a project leader. I don't know that anyone else was really involved in making that decision.

Galler: With a manual, you have a specification of the language. We should all understand it's not writing a manual, just for the record.

Dahm: Sure. There existed a specification for AL, too, in the sense of a document that was published in 1958 that described it. It turned out that there were some things that were almost impossible to implement.

Galler: Did you expect your commercial customers to use ALGOL?

Turner: No.

Galler: Then how was this machine going to be sold to commercial customers?

Turner: Well, we had a COBOL planned.

Galler: What was the balance between the emphasis on the two languages?

Hale: If you look at the machine, clearly it was an ALGOL machine. And it was a passing attempt to add some hardware facilities for COBOL which, to be generous, one could only say they did work -- not very well, however. I don't mean to imply there were any changes made in the initial design of the machine. Character Mode, at least for my association, was always in the machine.

MacKenzie: I believe that Character Mode showed up in the machine about the time the horrendous aspect of this being an ALGOL-only machine began to dawn on some people. Bill went to Detroit with the proposal and kind of bounced a little bit on that one. He came back and we ended up with a Character Mode on the machine so it could now do COBOL (and some things like that) better. So that melding was going on to try to make it, I think, more acceptable.

Kreuder: That really was pretty much of an add-on.

King: It was in the B 3000. The 3000 was a word machine and a character machine, and the 4000 was a word and character machine from day one. The problem is we didn't know what was required for COBOL. We just said, A lot of COBOL appears to be manipulation of data, editing, and such. We don't know what exactly is needed for it." I kept asking, and nobody could give me good answers.

Extract: ESPOL and Extended ALGOL

Language Levels

All programming for this computing system is done in one of its three higher language levels. Applicational programs are written in B 5500 Extended ALGOL, COBOL, or FORTRAN. The programming system components themselves are written entirely at the Extended ALGOL level. The Disc File Master Control Program (MCP) is written in ESPOL -- a special- purpose variant of Extended ALGOL which was defined to permit the expression of control algorithms and other important miscellany necessary for the construction of this operating system and the intrinsic functions of its compilers.

All the remaining programming system components are written in Extended ALGOL -- the same language available to application programmers. With respect to this explanation of the employment of the single-level store concept, the following should be remembered: this computing system's compilers are but Extended ALGOL programs. The action described is the same, be it for a compiler or an application program.

Segmentation

Segmentation of programs is implied by the programmer. The Extended ALGOL compiler automatically segments at the language's block level, the COBOL compiler at the paragraph level. The compilers do not allocate storage for their generated code, which is placed in the disc file library either temporarily or permanently, depending on the contents of the job call control card. Instead, storage is dynamically allocated by the MCP at run time. Core storage for program segments or arrays is not actually allocated until some execution reference is attempted. Program segments which are not referenced do not have core storage allocated for them. Similarly, even though an array is declared in a program, storage is allocated for it only on a row-at-a- time basis as the first reference to an element is attempted in the execution. The operating principle is simple. Incremental core storage is bound to a given program only as dynamically required. Program or data space is allocated to the program only as it is actually needed, and is returned when the need no longer exists.

Extract: ESPOL and Extended ALGOL

First Observation

Observations on two sets of programs are presented, in the following paragraphs, which demonstrate the effectiveness of the single level store implementation produced in the B 5500 computing system. The first set of two programs was run three times; only the operating conditions were varied on the successive runs. Specifically: 1. The programs were run serially, using a single processor and 16K of core memory. 2. The programs were again run serially, using a single processor and 24K of core. 3. Finally, the programs were run concurrently using two processors and 24K of core memory. (A second processor may be employed if it is present in the hardware configuration. In that event, both processors share the same common memory complex. The two processors are never assigned to work exclusively on distinct programs. Programs are "mixed" and processors are assigned by the MCP to do a bit of this, a bit of that, and so on.)

The program running times were observed for each of the three operating conditions. A brief qualification of the total observation is made, however, before the running times are presented. The backing store was made up of 450,000 words of disc storage and two magnetic drums. Either of the two programs runs nearly processor-bound in the serial operating conditions.

One program was the ESPOL compiler, which was called upon to compile an experimental version of the MCP. The ESPOL compiler itself occupies about 6,100 words of storage in the same disc file library used for the storage of application programs and files. The data to the compiler was the symbolic MCP -- about 12,000 card images.

The other program in this first set was the Extended ALGOL compiler, which was called upon to compile an experimental version of itself. The Extended ALGOL compiler occupies about 8,000 words of storage in the disc file library. The data to this compiler for the run comprised about 9,000 card images.

Many thousands of words of internal data were generated during the execution of each of the two programs. In summary, the total primary storage demands were thus quite substantial for each program.

The observed running times for these two programs are presented in Fig. 1. Note that, for the serial operating conditions, only slightly less total time (681 vs. 703 seconds) was recorded for the 24K core memory case than that recorded for the 16K. This fact supports the conclusions that either of the programs, by itself, would run "comfortably" in a 16K configuration. (Although the times are not shown, it has been observed that three to five times longer is required to process very similar data when the compilers are run in a 12K core memory.)

Recorded on the third line of Fig. 1 is the total elapsed time which was required to run the two programs concurrently employing two processors and a 24K core memory. The elapsed times observed for each program are given within parentheses, since in this operating condition they are not expected to add up to the total time. The total time to execute both programs was only about 6% greater than the time observed for the longest of the two programs run serially in a 16K core memory.

As noted above, either program runs nearly processor-bound. Only a slight reduction, perhaps 10'c in total time, might be anticipated if the two programs were run concurrently employing a single processor and 24K core memory. Of course, a substantial reduction of total time should be anticipated when two processors are employed, if enough core memory is available. Thus the primary significance of this first set of observations is not the substantial time reduction recorded. It lies, rather, in the fact that the reduction was realized when only 8K of additional core memory was made available.

Extract: Software Considerations

Software Considerations

Editor's Note: The discussion then focused on programming language issues, such as ALGOL, COBOL, how to implement them, and on the Master Control Program (MCP) that was to manage the B 5000.

Rosin: We heard earlier that there was some agreement that ALGOL was going to become the standard scientific language in the user community. Inside Burroughs, who championed that belief, and who, if anybody, resisted that belief?

Lonergan: I was going to comment more generally about that. I think in looking back one could say we were fairly naïve. On the other hand, at that stage of things, it hadn't become as patently obvious, as it has since, that IBM will not adopt industry standards unless it's to their economic advantage to do so, and it seldom is. They prefer to go their own way. They now have a 20-year history of doing this. That wasn't clear then; now it is. They dragged their feet the same way they did with ASCII. If it's not to their advantage, they won't really support standards.

Dahm: I think it was a very complex thing in some respects. I remember when I was a summer employee in 1959, I was working on the BALGOL compiler with a fellow named Joel Erdwin, who was the project leader. We were busily trying to do our version of IAL, when one day Bob Barton came along with a FORTRAN manual in his hand. It was a really nice, well-done FORTRAN manual by IBM. He said, "Why don't you guys do this language because then you wouldn't have to write a manual?" We rejected that as being not a very good reason for picking a language. [Laughter.] So, basically, I would say that the decision that the compiler we would do would be ALGOL as opposed to FORTRAN was made by a summer employee and a project leader. I don't know that anyone else was really involved in making that decision.

Galler: With a manual, you have a specification of the language. We should all understand it's not writing a manual, just for the record.

Dahm: Sure. There existed a specification for AL, too, in the sense of a document that was published in 1958 that described it. It turned out that there were some things that were almost impossible to implement.

Galler: Did you expect your commercial customers to use ALGOL?

Turner: No.

Galler: Then how was this machine going to be sold to commercial customers?

Turner: Well, we had a COBOL planned.

Galler: What was the balance between the emphasis on the two languages?

Hale: If you look at the machine, clearly it was an ALGOL machine. And it was a passing attempt to add some hardware facilities for COBOL which, to be generous, one could only say they did work -- not very well, however. I don't mean to imply there were any changes made in the initial design of the machine. Character Mode, at least for my association, was always in the machine.

MacKenzie: I believe that Character Mode showed up in the machine about the time the horrendous aspect of this being an ALGOL-only machine began to dawn on some people. Bill went to Detroit with the proposal and kind of bounced a little bit on that one. He came back and we ended up with a Character Mode on the machine so it could now do COBOL (and some things like that) better. So that melding was going on to try to make it, I think, more acceptable.

Kreuder: That really was pretty much of an add-on.

King: It was in the B 3000. The 3000 was a word machine and a character machine, and the 4000 was a word and character machine from day one. The problem is we didn't know what was required for COBOL. We just said, A lot of COBOL appears to be manipulation of data, editing, and such. We don't know what exactly is needed for it." I kept asking, and nobody could give me good answers.

Nobody had clear ideas on what we needed to have in a system to make it handle COBOL better. The only thing we could put in was a Character Mode. We said, "That will help, and we don't know what else to do."

MacDonald: But the intent was to make it both; it's just that we were all too dumb to know how to do it right.

Waychoff: On the selection of ALGOL: At that time, programming in higher-level languages was still a very highly controversial subject. Most people were programming in assembly language. Burroughs decided that high-level languages were the way to go, that efficient compilers could be written, and then we simply selected the best language around, and that was ALGOL. We didn't consider at all that there was the huge customer base in FORTRAN and the momentum that we d have to overcome. ALGOL was simply a better language.

So far as accommodating COBOL, when I joined the project, the ALGOL symbols in the character set -- for multiplication and the assignment operator, implication, equivalence -- all got wiped out in favor of COBOL characters, such as dollar sign, percent sign, and some other things.

MacKenzie: I think you should also remember that there were also uncertainties as to what the commercial data processing language might become at the time, although the CODASYL activity had been organized, if I remember right, in 1958 or 1959. There was still a great deal of debate about COMTRAN, COBOL, things of that ilk; so there was that basis for uncertainty. I agree completely with what Lloyd was saying - - people in Burroughs did know what they were doing in that sense. We thought that the things people wanted to do off that objective list could not be done with FORTRAN because of implications of the language. I think that probably played a role in people's minds about the model language that they wanted to use.

Oliphint: In terms of deciding about FORTRAN or ALGOL and this sort of thing, I think there was a general feeling, which probably turned out to be naive, that if we did a good system that was designed well and fit all together and accomplished these goals, it didn't make too much difference what the programming language was, that it would be a good enough system, acceptable and sellable to people regardless of what the programming language was. That didn't really seem to be, from a customer acceptance point of view, a major consideration. Just that ALGOL fit with the design better, so it was the natural choice.

Dahm: I wasn't actually at Burroughs at the time the instruction set was designed, but my belief has always been that the instruction set was in fact designed to be the target of an ALGOL compiler, and that was a revolutionary thing to do. In fact, if you look at almost all machines that are designed today, their instruction sets are not really well designed from the point of view of being the target of a compiler.

So I think it was an extraordinarily revolutionary thing that still has not really been adopted throughout the industry: the idea of picking a language and then designing a machine that is really well suited for that language. In fact, that was kind of a Burroughs design philosophy for a number of years after this. The B 350O, for example, was a machine that was designed with the explicit idea of being the target of a COBOL compiler. Then, later on, Burroughs designed the B 1700 with the idea that one could change the instruction set depending upon what particular language the processor was processing at this moment. I think those are very, very revolutionary ideas, and in some sense, the industry as a whole has never really picked up on these ideas, which I think is a shame.

King: Very early on there was a rule set that code would never be generated by a human; code would only be generated by a compiler. We didn't have to give any consideration whatsoever to make it easy for a programmer to generate machine language; it was all going to be done by compiler. Further, there was no consideration given to an operator in the sense of operating the machine like they did with the early [IBM] 701s and 704s and the [Burroughs] 205s and 220. It was always going to be operated under control of a control program. You couldn't run it without that control program. Running it under human control or running it with human-generated code was not a design requirement.

MacDonald: Backing up both of those, there was really a policy atmosphere set up so that the system would be software driven, not just in terms of compilation, but also in terms of MCP. The whole thing was going to be driven from software and from the application end. The hardware was intended to be a vehicle for both operating system and compilers -- not totally realized, but remarkably well realized for that period of time, I think. Echoing what Dave said, I haven't seen it since. (Maybe Lloyd's doing it at this point. I hope he is.)

MacKenzie: Somewhat facetiously, I'd say that the genius of that decision about compiler code only was one that had a very profound effect. Namely, it took the hardware engineering people in a sense out of the system business. Before that, a great deal of their domain was the machine-language instruction set -- the interaction with customers on the machine-language instruction set. SO, in a sense, the higher- level system became a domain of programming people rather than hardware engineering people.

Kreuder: The system got rid of one of the world's largest-diameter headaches -- namely, the design of the control console with all those flashing lights, because that was the thing that every vice-president thought he knew something about. [Laughter.]

Dahm: I think one of the things that was very revolutionary was the whole idea that all of the software could also be written in a high- level language. I remember when Bob Barton told me that we were going to have to do this ALGOL compiler in ALGOL, and I said, "That's crazy. You can't possibly do that." He said, "Why not?" I really could never give him a good answer why not, so we had to do it. [Laughter.]

Barton: In the interest of history, I don't think that was a really revolutionary idea at the time. There was a group in IBM, I think led by Bob Bemer, that attempted to do the same with -- maybe it was IAL, I'm not sure. I don't think that was a revolutionary idea at all.

Rosin: In a product, Bob? There were several people in the world who were using high-level languages to process ...

Barton: My only capacity was as a consultant. I'm just trying to say some of the things I know that are external to the whole B 5000 thing. I think I am qualified to say something about those facts.

Galler: We heard a very strong statement that this was an ALGOL machine and then COBOL was brought in, etc. Paul Colen, who isn't here, sent me a statement. He says that a lot of the decisions were made because "They wouldn't play in Peoria." Now, was that a phrase that was used at that time? And what did it mean?

Lonergan: I've heard the phrase, but not in that context.

Kreuder: It wasn't used then. He was referring to the idea that a delay-line memory wouldn't be salable. I think that's the context.

Dent: Many of the things that were talked about didn't play at all for a long time! [Laughter.] Trying to sell a machine where neither the operator nor the user nor the programmer knew where the program was in memory was very difficult.

Rosin: As I understand from what you all have said, there was an early decision that this was going to be an ALGOL machine. A decision was made sometime afterward that there would be a strong effort also to accommodate COBOL. At some time later in the history of this machine, a decision was made that there would be a FORTRAN processor of some kind or other. Why was that decision made? And would the architecture of the B 5000 have been significantly different had FORTRAN been included from the beginning?

Kreuder: I can tell you that the decision was made in Paul Leebrick's hotel room at an NCC [National Computer Conference 1. Brad was there. Leebrick gathered us all together, and he said, Men, we've got to have a FORTRAN compiler. I was full of about 50 jillion reasons why it couldn't be done, and Brad interrupted me and said, "It's no biggie. [Laughter. I How soon do you have to have it?" [Laughter.] Then later, "typical" Brad says, "By the way, Paul, which particular FORTRAN are you interested in?" [Laughter.]

MacKenzie: Apparently the FORTRAN translator had originally been planned in the project to be written in ALGOL to translate FORTRAN to ALGOL. Fran Crowder was working on it in Product Planning. Lloyd, your section had it, if you remember. The event that Norm's talking about was probably in 1965, and Joe Hootman and other people will tell you there was a tremendous frustration in the marketing organization because of the lack of FORTRAN.

In a sense I think it was a conscious decision on the part of people not to have FORTRAN because of what the implications of having it would mean in terms of the way it would be sold and potentially comprising the system. I know if I said such a thing as Norm was saying, it's because Lloyd Turner told me at that time that it could be done. [Laughter.] It turned out that it was not a big or a difficult thing to do at that point in time. I know in a strictly personal sense -- right, wrong, or indifferent -- I never wanted to see it on there, because to me it had implications of trying to sell a machine head-on in FORTRAN shops. I personally thought it would be a terrible mistake and not the kind of thing to be trying to do with this wonderful machine called the 5000, which had a lot of other objectives.

Turner: Richard and I had just come from the field, and we had done a FORTRAN compiler. In fact, my arithmetic algorithm that I used was yours, Bernie. And, of course, we knew all the ridiculous constraints that one has in implementing FORTRAN with static storage allocation. That was diametrically opposed to one of the basic design criteria of the 5000. No matter how much you tried to optimize it, it was not going to run well.

We tried our best to discourage writing a compiler, so we had to say, "Well, we'll give you something that will get you by and give you a [preprocessing] translator." It worked reasonably well for a translator with all its inefficiencies. And then later on, we actually did the compiler after I left. I have to say that even on the 6000 series which followed, FORTRAN performance was always, and always had to be, very poor because the system was built to do something different. In fact, it wasn't that it wasn't designed to do that; the design was at odds with running FORTRAN.

Creech: I know for myself, and I believe for the people I associated with, there was a sincere -- although in hindsight naive -- belief that ALGOL was such a superior language that it would take the world by storm.

Oliphint: Oh, absolutely.

King: Yes.

Creech: We believed that. We were wrong.

Rosin: Was there a significant difference between the Burroughs 5000 ALGOL and ALGOL 6O, which was accepted to be the standard?

MacKenzie: No. [General consensus in background.]

Dahm: B 5000 ALGOL was a superset of the ALGOL 60 report.

Rosin: What were the important differences?

Creech: We had I/O! [Laughter.]

Oliphint: There was a string procedure added to handle the Character Mode operation generally associated with I/O, if I recall.

Rosin: Didn't you have to add the ability to manipulate fields within a word in order to generate a machine-language version of an original ALGOL program?

Dahm: Yes. That was an extension we made. The capability of referring to a partial field of a word.

Turner: It's called "Extended ALGOL" in that sense.

Waychoff: We went to great lengths to implement ALGOL 60 as published. We did make a lot of extensions. I remember Lloyd resisted practically every extension unless there was a very good reason for it, and I learned something from him on that point. But even to the extent of the evaluation of a FOR statement; if the expressions in a FOR statement had side effects, we would get exactly the answers that were intended by published ALGOL 60 because of our evaluation se4uence.

Galler: What about ESPOL [Executive Systems Problem Oriented Language]? What was it? What made that different from ALGOL?

Turner: Well, after we finished our third version of the compiler, we had it so that it would compile any program of any size into a memory module. We had a disk file coming which was a better backup device than the drums, and so we had to do another operating system. So we embarked on the Disk File MCP. In three weeks, we took the ALGOL compiler that we had, which was Extended ALGOL, and made an implementation language out of it by taking out the storage allocation.

Dahm: Probably the most significant addition to ALGOL to make ESPOL was the ability to address all of memory as an array, which was necessary in order for the operating system to be able to do storage allocation. But other than that, it was basically just the same as the Extended ALGOL that we used for writing the compilers.

MacKenzie: One thing to remember: the drum MCP that Clark did was not written in a higher-level language. It was written in a thing called OSIL [Operating System Implementation Language], which was a pretty primitive assembler.

Galler: I was about to ask about OSIL. We were always told there was no assembler on this machine.

Oliphint: There was.

MacKenzie: We tried hard to keep it a secret. [Laughter.]

Creech: The assembler ran on the 220. It did not run on this machine. There wasn't an assembler on this machine.

Rosin: Were later versions of MCP then written in ALGOL?

Turner: Yes, and ESPOL.

Oliphint: The Disk File MCP was written in ESPOL.

Turner: After that, there was no more assembler.

Rosin: Did you talk with your customers about the MCP? Did anybody say, "That's a great goal, but we don't know how to do it; therefore, let's back off?"

Dent: I think that was true, but I'm not sure anybody said it.

King: At that time we saw computers such as [IBM] 704s delivered with 4K of memory, initially. Then what happened to them? These shops expanded them to 32K, but a lot of programs still ran in 4K and couldn't use the expanded part of the system. They had to get more production out, but they couldn't go back and rewrite the programs. So they ran the 704s for a good portion of the day using a small portion of the system, and they couldn't do a thing about it! That was a crime. They couldn't expand in a real sense.

Rosin: So one of the goals for multiprogramming was to make more effective use of the hardware resources, not necessarily to provide a more flexible environment for the end user?

King: Correct. Get more production.

Dent: You ought to hear a little bit about the original drum MCP. It wasn't a particularly successful project, but I think we learned a lot doing it, and that's what really brought the 5500 into being. I think you can establish what was wrong with the drum MCP, what was wrong with the machine I B 5000], and what things we had to fix; we did that as a second pass, and it was renamed [B 5500] for commercial reasons.

Oliphint: As we've said earlier, there was always the idea from the beginning that there would be an operating system to run the machine, that it wouldn't be the stand-alone kind of thing that depended on operators. In the early days in Product Planning, when we were starting to decide how to do things and whether to do something in hardware or software, a saying got pretty widespread: "Oh, the MCP will take care of that." [Laughter.] As we thought of more and more things that needed to be done -- how to do this, how to do that -- that was the answer.

MacKenzie: You used to say that before you were doing the MCP!

Oliphint: That's right! Little did I know that when the MCP was going to do it, I was going to have to figure out a way. Then I guess the time really came when we had to sit down and figure out how the MCP was going to do those things that it had to do. That's all a long time ago; it's hard to remember how that all happened.

Galler: What began to go wrong?

Oliphint: One of the things that happened was that we fell behind in doing the MCP. Tape assignment was one of the things the MCP was going to do. You didn't have to decide which unit a tape got mounted on. You put a tape on, and the system recognized automatically what the label was, and so on. But in the early days, we didn't have all that working, and so you had to put a particular thing on tape unit E, I seem to recall.

So we had to make compromises in doing a sort of an interim version of the MCP that didn't do multiprogramming, that didn't do many of the things it was supposed to do because time was getting close, and there were just a lot of things not working. I remember being horrified in the context we were in, where we had all talked about and agreed that this system wouldn't work without software, to suddenly find out one day that a 5000 was going to be shipped. I couldn't believe it! I don't know how, when, or where that happened, but the stuff wasn't ready.

MacKenzie: Some things never change. [Laughter.]

Oliphint: There was no way that anybody would be happy with the product. And I really couldn't believe that we were really going to ship one without the software, because all our advertising, everything anybody knew, said that it wouldn't work without software. I believe it was something on the order of six months to a year after first shipping the product before we got an MCP that would do a lot of the things we talked about: multiprogramming, automatic tape assignment.

MacKenzie: You're right in the general sense of things easing in. The first shipment was on April Fool's Day 1963. The ALGOL compiler was operational, and, while perhaps there were a lot of errors in it, there was enough MCP to support much ALGOL compiler operation. It turned out that the second system that went to NASA was, to my knowledge, the only system that did not have software requirements written into the contract. It was the standard contract of the type I suppose that the government was issuing. It seems to me that the first release of the COBOL compiler was September or October of 1963.

During this time more and more MCP capability was becoming available. Sometime much earlier than October, some kind of multiprocessing, or multiprogramming capability as we called it then, began to operate. By the time the Marathon system got installed in October or November, it had a reasonably good working version of COBOL. It was improved tremendously after that, but that release had been made in October. So things were gradually picking up. By the end of the year, there were several systems that were on rental; perhaps customers were a little bit unhappy about paying rent on stuff like that. NASA had been the first one that actually went on rental.

Dent: I think there were several things that caused this situation. The first was that our tools were really quite poor. We were doing things in assembly language. In spite of all the other wonderful things we'd heard about high-level language, the operating-system people were doing things in assembly language on a previous machine to help the bootstrap process, and nobody had spent a lot of time with the assembler in the first place.

The job of the operating system was very badly underestimated, and that's partly because of this tendency to say, "Oh well, the MCP will do it."

When I got there, I could not find a list of things that the MCP was supposed to do. Everybody had their own idea of what was supposed to happen, but we didn't even know how big the job was. I don't remember there being a formal spec for the MCP. I remember being very envious of the compiler group because, just by the definition of the ALGOL language, they had a spec for what they had to do. You could almost go through the pages and count the syntactic definitions and see how many subroutines you had to have. There was no such thing for the MCP.

There were other problems. Most of the people working on it had not learned to deal with the concept of multiprogramming or the concept of loss of control of the processor. So there were lots of bugs because somebody would save a variable and go off to do something, like perhaps get a little more storage, not realizing that that might result in loss of control of the processor. You had to realize that while your program was doing I/O, somebody else might do an overlay or some other job would come in, so you had to get storage to save that variable. You have to learn to think that way before you can write code that has hope of working. And we weren't thinking that way.

There were some other rather basic problems with the machine. Of course, we have already alluded to the underestimation of the memory size required. I think the original spec was that the operating system was supposed to run in 512 words or something, which turned out to be wrong by.

MacKenzie: Well, the whole thing was supposed to go in 2000 words, compilers and operating system. The other half could be used for programs.

Oliphint: The compilers all got too big. [Laughter.]

Dent: By the time we finally got all this automatic overlaying thing to work, it became obvious that there wasn't enough room on secondary storage to overlay things. I mean we literally had some job situations that ran out of memory -- where the machine stopped and said, "I'm sorry; I'm out of memory, and I don't know what to do about it." There were lots of things in memory that could be overlayed, but no place to put them.

We really didn't have a good mass storage device. Brad alluded to the business of this mass file being a new concept ... you could leave your programs on the machine. I can remember before that if you were a programmer and you went to use a machine, you got the card deck out of your desk, you went in, you ran the machine, and when you were finished you took it back lest it be thrown into the garbage can.

But on this first drum MCP, we stored programs -- even parts of programs and things that had been compiled -- on library tapes, which are not very good devices to do that sort of thing. In fact, we wore out a few tape units. We had to keep the directories on the tapes themselves, and if you remember the IBM format, you couldn't overwrite. So it was a little tricky trying to update the directory on the tape.

There were these kinds of problems that I think we realized and we fixed, and it became to some extent part of the definition of the 5500. This included saying, "Look, we've got to apply some of this compiler technology to help ourselves write the operating system."

Conceptual Innovations

Editor's Note: The longest segment of the day's discussion centered around the sources of the many innovative concepts finally embodied in the B 5000 and the B 5500. During this segment, an apparently longstanding clash of views between Barton and King was reawakened. The dispute appears to be based on personality differences as much as anything else; Barton takes on the role of a philosopher dealing in abstractions and idealizations, while King gives the impression of being more concerned with very concrete ideas.

Galler: We have put some of the planning into context. I'd like to get to the specific innovations and ideas that went into this machine, the background of some of those ideas, and how they came together. Let me list some innovative things: the stack concept, descriptors and segmentation, the idea of high-level language only, all of the things that we've talked about with the MCP, modularity, the multiprocessor aspect, etc.

Are there any other strong aspects that ought to be on a list of innovations? There were many others, I know.

Turner: No SYSGEN [sys(tem) gen(eration)]!

King: Dynamic storage allocation.

Turner: Compilation technique and recursive descent.

Creech: Programming development technology.

MacKenzie: I think programming system technology is probably a better term.

Turner: The syntax chart, which they're still using today, in some form, even in Pascal.

Rosin: What inspired that, and who did it?

Turner: Richard and I worked on that in 1961.

Waychoff: I remember that quite well. Lloyd and I were working together; Bob Barton was spending some time with us also at the very beginning of the ALGOL project. I was thumbing back and forth through my published ALGOL, from the Communications of the ACM, time after time and wearing out copy after copy of it looking at the metalinguistic definitions.

I said, "What we need here is something like a flowchart to describe this." Bob said, "Well, this is a recursive language." I remember he drew a square on the blackboard with lines leading out and then back in again saying that's all it would show. So I dropped it, and a couple of days later I brought up the same thing again. Then Lloyd immediately saw what I was trying to get at, and he came up with the idea of two different shapes; we called them pickles and boxes, where the metalinguistic variable is defined here or the metalinguistic variable is only used here and defined somewhere else. I think that was one of the most significant contributions that we made on this. Because by staring at that, then the notion of recursive descent compilation becomes quite obvious.

Galler: What about prior work in this area?

[Waychoff or Turner]: Well, Ned Irons had been out to visit us and he had a recursive-ascent technique that went up to the leaves and headed back to the trunk, and that seemed to me like a terribly pedestrian way to do things. We had all these nice flowcharts to do it the other way, and it was more natural, it seemed to me, to start at the trunk and make the decision and then go on down from there. We knew that the idea we had built into recursive procedures and the language was recursive -- there was certainly a connection there that just couldn't be overlooked.

That's sort of how we decided to do it that way. And that's exactly the way the compiler was built. If you look at that funny road map of the extended ALGOL language, that was very close to an overall flowchart of the entire syntax of the compiler.

Galler: I think at the time Irons was one of the first to begin to formalize the process of doing it. You apparently disagreed with how he did it.

Turner: Yes. His compilation technique turned out to be quite different from ours. Certainly, looking at how he did things helped us make some decisions on how to implement things. We had a lot easier job than he had; trying to implement all of the procedures that are in ALGOL without any stacks would have been very difficult. Most of the people in Europe who wrote the compilers had to simulate the stack.

Galler: Let's talk about the stack a little bit. How did that come in?

King: When we started out, Bob Barton had this idea of doing a system oriented around Polish notation, using a very condensed form of addressing and a rather elaborate indirect addressing scheme. When you use Polish notation, it implies a stack. So we started out with that.

We also then had a second stack. I was very interested in a system called PERM that had a means of subroutine control that was recursive and was able to pass parameters and control the passing of parameters recursively. So we ended up putting in a second stack for that. So we had two stacks -- one for data and one for control. The two stack system went on for awhile; it was sort of clumsy.

The first stack, as originally proposed, was going to be a set of registers. But that created certain problems, because if you have a set of registers, when you change context you've got to dump all those registers. We worked on that and came up in one session with what we called the deep stack. The deep stack said, "To hell with all those registers; we'll have two registers that are going to be the arithmetic registers, and then everything else is in memory." So we solved what we called "the deep stack problem."

We still had the two stacks -- the data stack and the control stack. It was Jack Merner who brought those together and said, "Why have two stacks? Why don't you just use them together." And that's really what came about in the long run.

Rosin: We'd like to know more about the concept of a stack for arithmetic processing and also this PERM system that you mentioned. Where did they come from?

King: I don't know where Bob got the Polish notation. Did it derive from the BALGOL compiler?

Dahm: NO, it preceded the BALGOL compiler.

Barton: Well, I'm not sure that I can say anything along those lines except to tell in retrospect what I learned in later years about the genesis of some of the ideas. I can trace every idea back to -- not quite to Babbage, but close. And that's a fact. But I don't know the time frames about the genesis of ideas because I was, for instance, very poorly informed about what was going on in Europe. I was pretty well informed about what was going on in the United States. Bernie would be surprised, but he had a lot of indirect influence on what happened. You may remember my early visits to Michigan while you and Bruce Arden were working on what became MAD [Michigan Algorithm Decoder]. I was learning a lot on those visits about what was possible. Not necessarily how to do it, but what was possible.

Dahm: I think I remember pretty clearly. In the summer of 1958, before Bob Barton went to Burroughs, he worked at Shell, and I worked for him that summer; that was my first involvement with computers. I remember his describing to me at that time this really neat little algorithm he had for turning an arithmetic expression into machine code, and how it was all inspired by Lukasiewicz notation, which he had run across in a book on symbolic logic. He also described to me how one could possibly build a machine with a built-in stack to handle this in a more automatic fashion. Now, that was before we were ever at Burroughs. I remember that clearly in 1958.

Barton: I think that's essentially correct. And, incidentally, it ties into Michigan again. I was getting ready to go to a Michigan summer conference, and I remember the program said, "Some knowledge of symbolic logic is helpful though not essential." So I went over to our library, and found Copi's Symbolic Logic on the shelf. I often read books in funny order; I sometimes turn to the index. In the index my eye fell on "Polish notation," and I thought, "What in the world is that?" I turned to that page and there was a description relative to logic, and in the next 30 seconds, I saw it was a simple way of translating arithmetic expressions. I'd seen the article by Backus, and I thought it was unintelligible: too involved. I saw, I think next, the idea of handling iterations that way, which, by the way, was not done in that machine [B 5000].

I saw the use for interrupts, which we were kind of conscious of in our context at Shell. By the way, of course, the machine could have been used for interrupts that way, but wasn't; everybody overlooked it. Bob Creech remembers learning about this in the early days of the 6500. There was something there that people didn't fully appreciate. Also for subroutines.

The business of different kinds of stack linkages and combinations of stacks -- I honestly don't know what the history of that was. I think there was a lot of doodling done by a lot of people.

We were well acquainted with using linked lists as a storage allocation means at Shell. We were kind of well informed there. Who was the "we"? Well, there was Joel Erdwin, who was responsible for the BALGOL compiler, which, I'd like to get on the record, was a brilliant job of programming. Dave Dahm has already told you that he was involved in the beginning of that during the thinking stage. Merner was involved in it all the way through. My opinion is that it was Erdwin's masterpiece.

I want to correct Dave Dahm's statement about my trying to get them to do FORTRAN. [Laughter.] It's correct to a certain extent in that the job that I had taken, under generally misleading conditions, called for doing an impossible FORTRAN, which would also include conversion of assembly language from the 7090 -- or whatever the machine was at the time -- automatically. I knew it couldn't be done, but that was my responsibility.