GP(ID:77/gp:001)

Generalized Programming Compiler

for Generalized Programming Compiler

First general library programming system developed by Anatol Holt and William Turanski at UNIVAC in 1951 (coeval with A0 etc)

"The system differs from other compilers, however, in that it aims to provide the programmer with a set of services equally applicable to the solution of any problem--services, in other words, which are claimed to be altogether "general purpose." Therefore, the system contains no special design features which make it better adapted to one area of computation than to another."

Renamed FLEXMATIC by the marketing division

Places

People: Hardware:

Related languages

| GP | => | Automatic network calculation | Visual language for | |

| GP | => | FLEXIMATIC | Renaming | |

| GP | => | GPX | Evolution of |

References:

Automatic coding is a means for reducing problem costs and is one of the answers to a programmer's prayer. Since every problem must be reduced to a series of elementary steps and transformed into computer instructions, any method which will speed up and reduce the cost of this process is of importance.

Each and every problem must go through the same stages:

Analysis,

Programming,

Coding,

Debugging,

Production Running,

Evaluation

The process of analysis cannot be assisted by the computer itself. For scientific problems, mathematical or engineering, the analysis includes selecting the method of approximation, setting up specifications for accuracy of sub-routines, determining the influence of roundoff errors, and finally presenting a list of equations supplemented by definition of tolerances and a diagram of the operations. For the commercial problem, again a detailed statement describing the procedure and covering every eventuality is required. This will usually be presented in English words and accompanied again by a flow diagram.

The analysis is the responsibility of the mathematician or engineer, the methods or systems man. It defines the problem and no attempt should be made to use a computer until such an analysis is complete.

The job of the programmer is that of adapting the problem definition to the abilities and idiosyncrasies of the particular computer. He will be vitally concerned with input and output and with the flow of operations through the computer. He must have a thorough knowledge of the computer components and their relative speeds and virtues.

Receiving diagrams and equations or statements from the analysis he will produce detailed flow charts for transmission to the coders. These will differ from the charts produced by the analysts in that they will be suited to a particular computer and will contain more detail. In some cases, the analyst and programmer will be the same person.

It is then the job of the coder to reduce the flow charts to the detailed list of computer instructions. At this point, an exact and comprehensive knowledge of the computer, its code, coding tricks, details of sentinels and of pulse code are required. The computer is an extremely fast moron. It will, at the speed of light, do exactly what it is told to do no more, no less.

After the coder has completed the instructions, it must be "debugged". Few and far between and very rare are those coders, human beings, who can write programs, perhaps consisting of several hundred instructions, perfectly, the first time. The analyzers, automonitors, and other mistake hunting routines that have been developed and reported on bear witness to the need of assistance in this area. When the program has been finally debugged, it is ready for production running and thereafter for evaluation or for use of the results.

Automatic coding enters these operations at four points. First, it supplies to the analysts, information about existing chunks of program, subroutines already tested and debugged, which he may choose to use in his problem. Second, it supplies the programmer with similar facilities not only with respect to the mathematics or processing used, but also with respect to using the equipment. For example, a generator may be provided to make editing routines to prepare data for printing, or a generator may be supplied to produce sorting routines.

It is in the third phase that automatic coding comes into its own, for here it can release the coder from most of the routine and drudgery of producing the instruction code. It may, someday, replace the coder or release him to become a programmer. Master or executive routines can be designed which will withdraw subroutines and generators from a library of such routines and link them together to form a running program.

If a routine is produced by a master routine from library components, it does not require the fourth phase - debugging - from the point of view of the coding. Since the library routines will all have been checked and the compiler checked, no errors in coding can be introduced into the program (all of which presupposes a completely checked computer). The only bugs that can remain to be detected and exposed are those in the logic of the original statement of the problem.

Thus, one advantage of automatic coding appears, the reduction of the computer time required for debugging. A still greater advantage, however, is the replacement of the coder by the computer. It is here that the significant time reduction appears. The computer processes the units of coding as it does any other units of data --accurately and rapidly. The elapsed time from a programmer's flow chart to a running routine may be reduced from a matter of weeks to a matter of minutes. Thus, the need for some type of automatic coding is clear.

Actually, it has been evident ever since the first digital computers first ran. Anyone who has been coding for more than a month has found himself wanting to use pieces of one problem in another. Every programmer has detected like sequences of operations. There is a ten year history of attempts to meet these needs.

The subroutine, the piece of coding, required to calculate a particular function can be wired into the computer and an instruction added to the computer code. However, this construction in hardware is costly and only the most frequently used routines can be treated in this manner. Mark I at Harvard included several such routines — sin x, log10x, 10X- However, they had one fault, they were inflexible. Always, they delivered complete accuracy to twenty-two digits. Always, they treated the most general case. Other computers, Mark II and SEAC have included square roots and other subroutines partially or wholly built in. But such subroutines are costly and invariant and have come to be used only when speed without regard to cost is the primary consideration.

It was in the ENIAC that the first use of programmed subroutines appeared. When a certain series of operations was completed, a test could be made to see whether or not it was necessary to repeat them and sequencing control could be transferred on the basis of this test, either to repeat the operations or go on to another set.

At Harvard, Dr. Aiken had envisioned libraries of subroutines. At Pennsylvania, Dr. Mauchly had discussed the techniques of instructing the computer to program itself. At Princeton, Dr. von Neumman had pointed out that if the instructions were stored in the same fashion as the data, the computer could then operate on these instructions. However, it was not until 1951 that Wheeler, Wilkes, and Gill in England, preparing to run the EDSAC, first set up standards, created a library, and the required satellite routines and wrote a book about it, "The Preparation of Programs for Electronic Digital Computers". In this country, comprehensive automatic techniques first appeared at MIT where routines to facilitate the use of Whirlwind I by students of computers and programming were developed.

Many different automatic coding systems have been developed - Seesaw, Dual, Speed-Code, the Boeing Assembly, and others for the 701, the A—series of compilers for the UNIVAC, the Summer Session Computer for Whirlwind, MAGIC for the MIDAC and Transcode for the Ferranti Computer at Toronto. The list is long and rapidly growing longer. In the process of development are Fortran for the 704, BIOR and GP for the UNIVAC, a system for the 705, and many more. In fact, all manufacturers now seem to be including an announcement of the form, "a library of subroutines for standard mathematical analysis operations is available to users", "interpretive subroutines, easy program debugging - ... - automatic program assembly techniques can be used."

The automatic routines fall into three major classes. Though some may exhibit characteristics of one or more, the classes may be so defined as to distinguish them.

1) Interpretive routines which translate a machine-like pseudocode into machine code, refer to stored subroutines and execute them as the computation proceeds — the MIT Summer Session Computer, 701 Speed-Code, UNIVAC Short-Code are examples.

2) Compiling routines, which also read a pseudo-code, but which withdraw subroutines from a library and operate upon them, finally linking the pieces together to deliver, as output, a complete specific program for future running — UNIVAC A — compilers, BIOR, and the NYU Compiler System.

3) Generative routines may be called for by compilers, or may be independent routines. Thus, a compiler may call upon a generator to produce a specific input routine. Or, as in the sort-generator, the submission of the specifications such as item-size, position of key-to produce a routine to perform the desired operation. The UNIVAC sort-generator, the work of Betty Holberton, was the first major automatic routine to be completed. It was finished in 1951 and has been in constant use ever since. At the University of California Radiation Laboratory, Livermore, an editing generator was developed by Merrit Ellmore — later a routine was added to even generate the pseudo-code.

The type of automatic coding used for a particular computer is to some extent dependent upon the facilities of the computer itself. The early computers usually had but a single input-output device, sometimes even manually operated. It was customary to load the computer with program and data, permit it to "cook" on them, and when it signalled completion, the results were unloaded. This procedure led to the development of the interpretive type of routine. Subroutines were stored in closed form and a main program referred to them as they were required. Such a procedure conserved valuable internal storage space and speeded the problem solution.

With the production of computer systems, like the UNIVAC, having, for all practical purposes, infinite storage under the computers own direction, new techniques became possible. A library of subroutines could be stored on tape, readily available to the computer. Instead of looking up a subroutine every time its operation was needed, it was possible to assemble the required subroutines into a program for a specific problem. Since most problems contain some repetitive elements, this was desirable in order to make the interpretive process a one-time operation.

Among the earliest such routines were the A—series of compilers of which A-0 first ran in May 1952. The A-2 compiler, as it stands at the moment, commands a library of mathematical and logical subroutines of floating decimal operations. It has been successfully applied to many different mathematical problems. In some cases, it has produced finished, checked and debugged programs in three minutes. Some problems have taken as long as eighteen minutes to code. It is, however, limited by its library which is not as complete as it should be and by the fact that since it produces a program entirely in floating decimal, it is sometimes wasteful of computer time. However, mathematicians have been able rapidly to learn to use it. The elapsed time for problems— the programming time plus the running time - has been materially reduced. Improvements and techniques now known, derived from experience with the A—series, will make it possible to produce better compiling systems. Currently, under the direction of Dr. Herbert F. Mitchell, Jr., the BIOR compiler is being checked out. This is the pioneer - the first of the true data-processing compilers.

At present, the interpretive and compiling systems are as many and as different as were the computers five years ago. This is natural in the early stages of a development. It will be some time before anyone can say this is the way to produce automatic coding.

Even the pseudo-codes vary widely. For mathematical problems, Laning and Zeirler at MIT have modified a Flexowriter and the pseudo-code in which they state problems clings very closely to the usual mathematical notation. Faced with the problem of coding for ENIAC, EDVAC and/or ORDVAC, Dr. Gorn at Aberdeen has been developing a "universal code". A problem stated in this universal pseudo-code can then be presented to an executive routine pertaining to the particular computer to be used to produce coding for that computer. Of the Bureau of Standards, Dr. Wegstein in Washington and Dr. Huskey on the West Coast have developed techniques and codes for describing a flow chart to a compiler.

In each case, the effort has been three-fold:

1) to expand the computer's vocabulary in the direction required by its users.

2) to simplify the preparation of programs both in order to reduce the amount of information about a computer a user needed to learn, and to reduce the amount he needed to write.

3) to make it easy, to avoid mistakes, to check for them, and to detect them.

The ultimate pseudo-code is not yet in sight. There probably will be at least two in common use; one for the scientific, mathematical and engineering problems using a pseudo-code closely approximating mathematical symbolism; and a second, for the data-processing, commercial, business and accounting problems. In all likelihood, the latter will approximate plain English.

The standardization of pseudo-code and corresponding subroutine is simple for mathematical problems. As a pseudo-code "sin x" is practical and suitable for "compute the sine of x", "PWT" is equally obvious for "compute Philadelphia Wage Tax", but very few commercial subroutines can be standardized in such a fashion. It seems likely that a pseudocode "gross-pay" will call for a different subroutine in every installation. In some cases, not even the vocabulary will be common since one computer will be producing pay checks and another maintaining an inventory.

Thus, future compiling routines must be independent of the type of program to be produced. Just as there are now general-purpose computers, there will have to be general-purpose compilers. Auxiliary to the compilers will be vocabularies of pseudo-codes and corresponding volumes of subroutines. These volumes may differ from one installation to another and even within an installation. Thus, a compiler of the future will have a volume of floating-decimal mathematical subroutines, a volume of inventory routines, and a volume of payroll routines. While gross-pay may appear in the payroll volume both at installation A and at installation B, the corresponding subroutine or standard input item may be completely different in the two volumes. Certain more general routines, such as input-output, editing, and sorting generators will remain common and therefore are the first that are being developed.

There is little doubt that the development of automatic coding will influence the design of computers. In fact, it is already happening. Instructions will be added to facilitate such coding. Instructions added only for the convenience of the programmer will be omitted since the computer, rather than the programmer, will write the detailed coding. However, all this will not be completed tomorrow. There is much to be learned. So far as each group has completed an interpreter or compiler, they have discovered in using it "what they really wanted to do". Each executive routine produced has lead to the writing of specifications for a better routine.

1955 will mark the completion of several ambitious executive routines. It will also see the specifications prepared by each group for much better and more efficient routines since testing in use is necessary to discover these specifications. However, the routines now being completed will materially reduce the time required for problem preparation; that is, the programming, coding, and debugging time. One warning must be sounded, these routines cannot define a problem nor adapt it to a computer. They only eliminate the clerical part of the job.

Analysis, programming, definition of a problem required 85%, coding and debugging 15$, of the preparation time. Automatic coding materially reduces the latter time. It assists the programmer by defining standard procedures which can be frequently used. Please remember, however, that automatic programming does not imply that it is now possible to walk up to a computer, say "write my payroll checks", and push a button. Such efficiency is still in the science-fiction future.

in the High Speed Computer Conference, Louisiana State University, 16 Feb. 1955, Remington Rand, Inc. 1955 view details

in the High Speed Computer Conference, Louisiana State University, 16 Feb. 1955, Remington Rand, Inc. 1955 view details

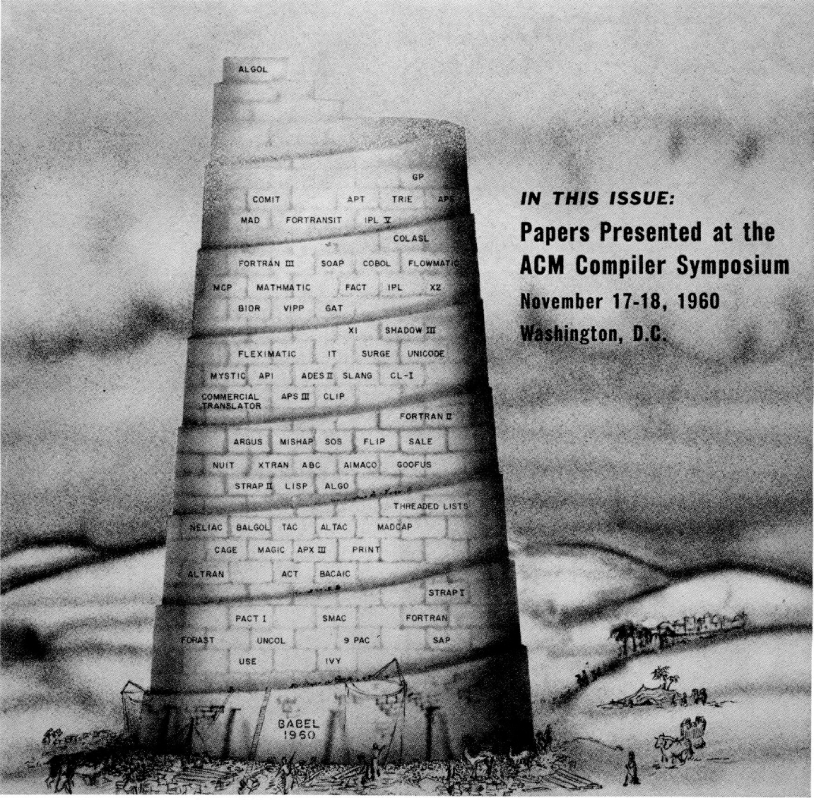

Let me elaborate these points with examples. UNICODE is expected to require about fifteen man-years. Most modern assembly systems must take from six to ten man-years. SCAT expects to absorb twelve people for most of a year. The initial writing of the 704 FORTRAN required about twenty-five man-years. Split among many different machines, IBM's Applied Programming Department has over a hundred and twenty programmers. Sperry Rand probably has more than this, and for utility and automatic coding systems only! Add to these the number of customer programmers also engaged in writing similar systems, and you will see that the total is overwhelming.

Perhaps five to six man-years are being expended to write the Alodel 2 FORTRAN for the 704, trimming bugs and getting better documentation for incorporation into the even larger supervisory systems of various installations. If available, more could undoubtedly be expended to bring the original system up to the limit of what we can now conceive. Maintenance is a very sizable portion of the entire effort going into a system.

Certainly, all of us have a few skeletons in the closet when it comes to adapting old systems to new machines. Hardly anything more than the flow charts is reusable in writing 709 FORTRAN; changes in the characteristics of instructions, and tricky coding, have done for the rest. This is true of every effort I am familiar with, not just IBM's.

What am I leading up to? Simply that the day of diverse development of automatic coding systems is either out or, if not, should be. The list of systems collected here illustrates a vast amount of duplication and incomplete conception. A computer manufacturer should produce both the product and the means to use the product, but this should be done with the full co-operation of responsible users. There is a gratifying trend toward such unification in such organizations as SHARE, USE, GUIDE, DUO, etc. The PACT group was a shining example in its day. Many other coding systems, such as FLAIR, PRINT, FORTRAN, and USE, have been done as the result of partial co-operation. FORTRAN for the 705 seems to me to be an ideally balanced project, the burden being carried equally by IBM and its customers.

Finally, let me make a recommendation to all computer installations. There seems to be a reasonably sharp distinction between people who program and use computers as a tool and those who are programmers and live to make things easy for the other people. If you have the latter at your installation, do not waste them on production and do not waste them on a private effort in automatic coding in a day when that type of project is so complex. Offer them in a cooperative venture with your manufacturer (they still remain your employees) and give him the benefit of the practical experience in your problems. You will get your investment back many times over in ease of programming and the guarantee that your problems have been considered.

Extract: IT, FORTRANSIT, SAP, SOAP, SOHIO

The IT language is also showing up in future plans for many different computers. Case Institute, having just completed an intermediate symbolic assembly to accept IT output, is starting to write an IT processor for UNIVAC. This is expected to be working by late summer of 1958. One of the original programmers at Carnegie Tech spent the last summer at Ramo-Wooldridge to write IT for the 1103A. This project is complete except for input-output and may be expected to be operational by December, 1957. IT is also being done for the IBM 705-1, 2 by Standard Oil of Ohio, with no expected completion date known yet. It is interesting to note that Sohio is also participating in the 705 FORTRAN effort and will undoubtedly serve as the basic source of FORTRAN-to- IT-to-FORTRAN translational information. A graduate student at the University of Michigan is producing SAP output for IT (rather than SOAP) so that IT will run on the 704; this, however, is only for experience; it would be much more profitable to write a pre-processor from IT to FORTRAN (the reverse of FOR TRANSIT) and utilize the power of FORTRAN for free. Extract: GP and its benefits

A compiler of the GP (Generalized Programming) type is in process for Status the LARC computer. This is a very large effort; certainly more than fifteen people are on the project. Algebraic coding is allowed as an instruction form, and generalized subroutines may be selectively generated for minimization in specific cases. Another interesting aspect of GP is the DuPont effort in rewriting the basic UNIVAC compilers in GP for more generality and easier expansion.

in "Proceedings of the Fourth Annual Computer Applications Symposium" , Armour Research Foundation, Illinois Institute of Technology, Chicago, Illinois 1957 view details

Introduction

It is possible so to standardize programming and coding for general purpose, automatic, high-speed, digital computing machines that most of the process becomes mechanical and, to a great degree, independent of the machine. To the extent that the programming and coding process is mechanical a machine may be made to carry it out, for the procedure is just another data processing one.

If the machine has a common storage for its instructions along with any other data, it can even carry out each instruction immediately after having coded it. This mode of operation in automatic coding is known as 'interpretive'. There have been a number of interpretive automatic coding procedures on various machines, notably MIT's Summer Session and Comprehensive systems for Whirlwind, Michigan's Magic System for MIDAC, and IBM's Speedcode; in addition there have been some interpretive systems beginning essentially with mathematical formulae as the pseudocode, such as MIT's Algebraic Coding, one for the SEAC, and others.

We will be interested, however, in considering the coding of a routine as a separate problem, whose result is the final code. Automatic coding which imitates such a process is, in the main, non-interpretive. Notable examples are the A-2 and B-O compiler systems, and the G-P (general purpose) system, all for UNIVAC, and IBM's FORTRAN, of the algebraic coding type.

Although, unlike interpretive systems, compilers do not absolutely require their machines to possess common storage of instructions and the data they process, they are considerably simpler when their machines do have this property. Much more necessary for the purpose is that the machines possess a reasonable amount of internal erasable storage, and the ability to exercise discrimination among alternatives by simple comparison instructions. I t will be assumed that the machines under discussion, whether we talk about standardized or about automatic coding, possess these three properties, namely, common storage, erasable storage, and discrimination. Such machines are said to possess "loop control".

We will be interested in that part of the coding process which all machines having loop control and a sufficiently large storage can carry out in essentially the same manner; it is this part of coding that is universal and capable of standardization by a universal pseudo-code.

The choice of such a pseudo-code is, of course, a matter of convention, and is to that extent arbitrary, provided it is

(1) a language rich enough to permit the description of anything these machines can do, and

(2) a language whose basic vocabulary is not too microscopically detailed.

The first requirement is needed for universality of application; the second is necessary if we want to be sure that the job of hand coding with the pseudo-code is considerably less detailed than the job of hand coding directly in machine code. Automatic coding is pointless practically if this second condition is not fulfilled.

In connection with the first condition we should remark on what the class of machines can produce; in connection with the second we should give some analysis of the coding process. In either case we should say a few things about the logical concept of computability and the syntax of machine codes.

in [ACM] JACM 4(3) July 1957 view details

in [ACM] JACM 4(3) July 1957 view details

In these remarks, I do not propose to describe an automatic coding system. My aim is, rather, to present an approach to automatic coding or, if you prefer, to present a motivation which has guided us in the development of the three actual systems for Remington Rand computers. The earliest of these three systems, developed for the UNIVAC I, has been known by the name Generalized Programming. (This system was recently renamed FLEXIMATIC by the Remington Rand Sales Office.) In operation for approximately two years, GP is currently employed at a considerable number of commercial installations. The second system, Generalized Programming Extended (GPX), is a new version of FLEXIMATIC for the UNIVAC II; it has considerably increased powers in comparison with its ancestor.

Currently, we are building the third system for the LARC computer. During years of development, the aims we wish to achieve have only gradually become clarified. Therefore, the system now in preparation for the LARC represents the fullest realization so far of these aims. The ensuing discussion will use the term "the system" to refer to an embodiment of the aims to be described.

In point of broad classification, the automatic programming system developed by the UNIVAC Applications Research Center is a compiler. This means that it shares two important characteristics with other systems similarly classified. First, complete solution of a problem with the aid of the system involves two computer steps: (1) translation of an encoding of the problem (in a special form associated with the system) into a computer-coded routine, and (2) actual problem computation. The second characteristic common to all compilers is that during the translating process, reference is made to a collection of packages of information, usually called library subroutines, which contribute in some way to the final result.

The system differs from other compilers, however, in that it aims to provide the programmer with a set of services equally applicable to the solution of any problem--services, in other words, which are claimed to be altogether "general purpose." Therefore, the system contains no special design features which make it better adapted to one area of computation than to another.

This aim, as stated, raises a peculiar question: A general purpose computer is, itself, supposed to provide a code which is equally well adapted to all areas of computation. To claim that it is profitable to build a programming system which contributes equally to all problems is, of course, to claim that some facilities which have "general purpose" standing are not realized in the hardware of a general purpose computer. We believe that such unrealized facilities exist and that they lay the basis for a powerful programming system. The facilities in question are an addition to the computer and, in no sense, a subtraction. Hence, a program in the system code may possibly be a one-to-one image of a computer-coded program.

The general purpose facilities I have in mind fall into two broad categories, the first of which--naming aids used in writing computer instructions--has already been widely recognized in the computer field. Naming aids include symbolic addressing, convenient abbreviations to represent computer instructions, special conveniences in the naming of constants, etc. Such naming aids, while interesting in their own right, have already been exploited to a greater or lesser extent in many existing assembly routines. It is apparent that these conveniences are equally applicable to all programs in which computer instructions must be used.

The second facility for which I claim general purpose standing is the ability to use mnemonic and abbreviated notations to represent computations of some given type. In part, this means the ability to use, in addition to the elementary computer instructions, special purpose instructions which are helpful in a given type of computation. By a few examples, I hope to show you the range of special notations I have in mind.

Suppose, for instance, that I have frequent occasion for evaluating sines of angles and that the computer does not contain a special instruction for sine evaluation. In addition to my usual command vocabulary, which the computer interprets directly, it would be a considerable convenience to write a special command which represents sine computation. Perhaps in different problems I require sines with different degrees of accuracy. In that case, my notation for sine computation may also mention a desired accuracy.

In any event, the special notations which represent the sine computation may be viewed as a special encoding of the sine function which must be translated into computer code before the program may function. Such translation is normally accomplished by reference to a so-called library subroutine, introduced by a compiler at the point at which the special notations were originally written. Sine computation, with or without parameters, exemplifies a large family of cases in which it seems convenient to introduce special notations to represent functions which the computer cannot execute directly.

Another example of special notations not usually considered to have much in common with a sine routine call is the use of algebraic notation to represent the evaluation of algebraic expressions. A little reflection shows, however, that both of these cases have important properties in common. In fact, they are both special notations used as part of coding to represent certain types of computations. In the case of sine with specifiable accuracy, we "code" a sine routine by mentioning the word sine and a chosen accuracy number. In the case of algebra, we "code" an algebraic evaluation by writing the appropriate expression with algebraic notation. In both cases, these special notations must combine with information stored elsewhere before they become coding which is intelligible to the computer.

In the case of special notations for sine, the "other information" is usually thought of as a library subroutine. A compiler then serves to interpret the special notations by finding the library subroutine and, perhaps, by inserting a specified accuracy value into the library coding. In the case of algebra, it is common to think of the "other information" as an algebraic translator which operates as an entity by itself. It is my contention that either special notations for algebra or special notations for sine may both be handled by a general purpose compiler which has broad ability to combine library information with special notations employed in the original coding.

Still other types of special notations which may offer great conveniences in the writing of code for special problems may also be handled by a general purpose compiler. For example, suppose that I have a file of information on which several different types of processings are to be accomplished. This file contains complex items, each of which is subdivided into many fields containing quantities that may be mnemonically named. It would now be a great convenience if, in my coding, I could refer to the quantities contained in this item by mnemonic names which have been agreed upon as appropriate to that file of information.

A general purpose compiler can assist the translation of such special mnemonics with appropriate packages of library information. A package of library information is combined with special notations employed in the original coding to produce coding in another form. The combining agent is a general purpose compiler. The library packages discussed here have wide use and diversity of structure; so-called library subroutines, on the other hand, are only one example of the library packages which a general purpose compiler can use.

In the development of most compiling systems, the designers begin with a special range of problems for which they intend their system to be useful. They invent special notations which they consider naturally adapted to the area of problems in question, and then they construct a translating mechanism with just enough generality to cope with these notations. While a given set of special notations for a given area of problems may, indeed, be very useful and important, the ability to add arbitrary, new notations is even more important than any given set of special notations.

The truth of this may be readily appreciated if we realize that no one set of special notations can be optimally adapted to all ranges of problems. No single computer would be optimally adapted to all types of computation. Furthermore, we cannot even hope, once and for all, to subdivide the entire universe of problems into fixed areas, each with a well-adapted notation. For example, if I have devised one notation satisfactory for business problems and another notation satisfactory for algebra, I am still unable to write convenient coding for problems which lie squarely between these two areas - namely, those problems which involve a lot of data handling and algebraic computation. Even if problems which cross the boundary lines never arose, the inability to add new notations would, nevertheless, be hampering. Suppose, for example, that a notation has been developed to handle all algebraic problems with equal facility.

If, now, in a certain set of problems, I use frequently either a particular algebraic function or a particular succession of steps, I will find that the general algebraic language at my command causes me to write much more than the particular set of problems requires. In short, as soon as the range narrows from all of algebra to a special sub-domain of algebra, the general algebraic notation ceases to be well-adapted. In summary, it is possible to construct a general purpose compiler which (a) provides special conveniences in the writing of computer code (naming aids) and (b) provides the ability to add new notations to my repertoire for instruction writing whenever such notations seem profitable for particular ranges of problems. Of course, profit is a relative thing. In exchange for the advantages bought by having certain new notations available, I must labor to add to the library packages which make translation of these notations possible. Consequently, this central feature of the general purpose compiler is truly meaningful only if the labor required to obtain the use of new notations is comparatively small.

The theoretical foundations for such compiling systems are now being developed at Remington Rand. Early examples, such as GP for UNIVAC I, demonstrate the practical utility of a system designed from this point of view.

in [ACM] CACM 1(05) (May 1958) view details

in Thuring, Bruno "Einfahrung in die Methoden der Programmierung kaufmannischer und wissenschaftlicher Probleme fur elektronische Rechenanlagen" Robert Goller Verlag, BadenBaden, 1958. view details

Bob Bemer states that this table (which appeared sporadically in CACM) was partly used as a space filler. The last version was enshrined in Sammet (1969) and the attribution there is normally misquoted.

in [ACM] CACM 2(05) May 1959 view details

This book is the second volume of two by Thuring; the other is reviewed in (MR 20, 1432). This volume departs from the previous "algorithmic" approach to concentrate on usage of the Univac I digital computer. The first half of the book gives the instruction code and programming details for that machine.

The second half gives a very thorough account of a most important development in digital computer programming, the "GP Compiler" (Generalized Programming) developed by A. Holt and W. Turanski for the Univac I. This latter program is a translator and assembly program (compiler) that allows any program written for the machine to be filed away automatically in a library for later call in by future programs. The mechanics of tying together variables with different names in different programs is described as being performed automatically during the translation and assembly of a "main program" or algorithm. Although other features, including automatic symbolic address assignment, are present, the automatic library making feature is by far the greatest contribution of this procedure. The author gives a thorough account of programs written in this language and their translation, and lists the library for the system at the time of publication.

in ACM Computing Reviews, January-December 1960 view details

in [ACM] CACM 3(11) November 1960 view details

in [ACM] CACM 4(01) (Jan 1961) view details

in [ACM] CACM 6(03) (Mar 1963) view details

in [ACM] CACM 6(03) (Mar 1963) view details

After a count of new noun occurrences, four different functions proportional to the number of new nouns were tried on the text in conjunction with a set of coefficients for weighting. A normalized graph was then prepared plotting the functional values against the sentence number. The most important of the selected sentences show as peaks. Criteria are described for distributing the final selection from these peak sentences throughout the entire text rather than limiting it to the beginning portions of the text where the peaks are higher. Approximately one-fifth of the original sentences are finally extracted.

In evaluating the results of this experiment, the author makes interesting observations on the structure and organization of various types of text.

A UNIVAC I computer and the "Generalized Programming" compiler language were used for the study.

in ACM Computing Reviews 5(05) September-October 1964 view details

Another and quite independent group at Univac concerned itself with an area that would now be called computer-oriented compilers. Anatol Holt and William Turanski developed a compiler and a concept that they called GP (Generalized Programming). Their system assumed the existence of a very general subroutine library. All programs would be written as if they were to be library programs, and the library and system would grow together, A program was assembled at compile time by (he selection and modification of particular library programs and parts of library programs. The program as written by the programmer would provide parameters and specifications according to which the very general library programs would be made specific to a particular problem. Subroutines in the library were organized in hierarchies, in which subroutines at one level could call on others at the next level. Specifications and parameters could be passed from one level to the next.

The system was extended and elaborated in the GPX system that they developed for Univac II. They were one of the early groups to give serious attention to some difficult problems relative to the structure of programs, in particular the problem of segmenting of programs, and the related problem of storage allocation.

Perhaps the most important contribution of this group was the emphasis that they placed on the programming system rather than on the programming language. In their terms, the machine that the programmer uses is not the hardware machine, but rather an extended machine consisting of the hardware enhanced by a programming system that performs all kinds of service and library maintenance functions in addition to the translation and running of programs.

in [AFIPS JCC 25] Proceedings of the 1964 Spring Joint Computer Conference SJCC 1964 view details

in [ACM] CACM 15(07) (July 1972) view details

in [ACM] CACM 15(06) (June 1972) view details

Resources

- Cover