FORTRAN II(ID:28/for057)

FORTRAN v2

1958. Added subroutines.

THis was the first properly distributed FORTRAN. high input from IBM in form of Manuals etc

People:

Related languages

| FORTRAN | => | FORTRAN II | Evolution of | |

| FORTRAN II | => | ALTAC | Extension of | |

| FORTRAN II | => | ALTRAN | Target language for | |

| FORTRAN II | => | APL | Written using | |

| FORTRAN II | => | Atlas Fortran | Extension of | |

| FORTRAN II | => | AUTOMATH | Implementation | |

| FORTRAN II | => | BASIC | Incorporated some features of | |

| FORTRAN II | => | BEEF | Adaptation of | |

| FORTRAN II | => | BMD | Written using | |

| FORTRAN II | => | DART | Simplification of | |

| FORTRAN II | => | DELtran | Compiled to | |

| FORTRAN II | => | EGTRAN | Dialect of | |

| FORTRAN II | => | Extended Fortran | Extension of | |

| FORTRAN II | => | FAP | Evolution of | |

| FORTRAN II | => | Fast FORTRAN | Based on | |

| FORTRAN II | => | FASTRAN | Implementation of | |

| FORTRAN II | => | FLAT | Implementation of | |

| FORTRAN II | => | FLPL | Extension of | |

| FORTRAN II | => | FMS | Extension of | |

| FORTRAN II | => | FORDESK | Extension of | |

| FORTRAN II | => | FORMAC | Extension of | |

| FORTRAN II | => | FORTRAN CEP | Extension of | |

| FORTRAN II | => | FORTRAN II-D | Augmentation of | |

| FORTRAN II | => | FORTRAN III | Evolution of | |

| FORTRAN II | => | FORTRAN-FORTRAN | Extension of | |

| FORTRAN II | => | FORTRUNCIBLE | Influence | |

| FORTRAN II | => | GIF | Translator for | |

| FORTRAN II | => | GOTRAN | Subset | |

| FORTRAN II | => | GTPL | Extension of | |

| FORTRAN II | => | HARTRAN | Implementation of | |

| FORTRAN II | => | IAL | Negative Slight Influence | |

| FORTRAN II | => | LARC Scientific Compiler | Extension of | |

| FORTRAN II | => | Nu-Speak | Augmentation of | |

| FORTRAN II | => | Proposal Writing Language | Extension of | |

| FORTRAN II | => | PSYCO | compiler for | |

| FORTRAN II | => | REFCO III | Extension of | |

| FORTRAN II | => | S1 | Extension of | |

| FORTRAN II | => | SIMSCRIPT | Extension of |

References:

GENERAL INTRODUCTION

The original FORTRAN language was designed as a concise, convenient means of stating the steps to be carried out by the IBM 704 Data Processing System in the solution of many types of problems, particularly in scientific and technical fields. As the language is simple and the 704, with the FORTRAN Translator program, performs most of the clerical work, FORTRAN has afforded a significant reduction in the time required to write programs,

The original FORTRAN language contained 32 types of statements. Virtually any numerical procedure may be expressed by combinations of these statements. Arithmetic formulas are expressed in a language close to that of mathematics. Iterative processes can be easily governed by control statements and arithmetic statements. Input and output data are flexibly handled in a variety of formats.

Extract: General introduction: FORTRAN II

GENERAL INTRODUCTION

The FORTRAN II language contains six new types of statements and incorporates all the statements in the original FORTRAN language. Thus, the FORTRAN II system and language are compatible with the original FORTRAN, and any program used with the earlier system can also be used with FORTRAN II. The 38 FORTRAN II statements are listed in Appendix A, page 59.

The additional facilities of FORTRAN II effectively enable the programmer to expand the language of the system indefinitely. This expansion is obtained by writing subprograms which define new statements or elements of the FORTRAN II language. All statements so defined will be of a single type, the CALL type. All elements so defined will be the symbolic names of single-valued functions. Each new statement or element, when used in a FORTRAN II program, will constitute a call for the defining subprogram, which may carry out a procedure of any length or complexity within the capacity of the computer.

The FORTRAN II subprogram facilities are completely general; subprograms can in turn use other subprograms to whatever degree is required. These subprograms may be written in source program language. For example, subprograms may be written in FORTRAN II language such that matrices may be processed as units by a main program. Also, for example, it is possible to write SAP (SHARE Assembly Program) subprograms which perform double precision arithmetic, logical operations, etc . Certain additional advantages flow from the above concept. Any program may be used as a subprogram (with appropriate minor changes) in FORTRAN II, thus making use, as a library, of programs previously written. A large program may be divided into sections and each section written, compiled, and tested separately. In the event it is desirable to change the method of performing a computation, proper sectioning of a program will allow this specific method to be changed without disturbing the rest of the program and with only a small amount of recompilation time.

There are two ways FORTRAN II links a main program to subprograms, and subprograms to lower level subprograms. The first way is by statements of the new CALL type. This type may be indefinitely expanded, by means of subprograms, to include particular statements specifying any procedures whatever within the power of the computer. The defining subprogram may be any FORTRAN II subprogram, SAP subprogram, or program written in any language which is reducible to machine language. Since a subprogram may call for other subprograms to any desired depth, a particular CALL statement may be defined by a pyramid of multilevel subprograms. A particular CALL statement consists of the word CALL, followed by the symbolic name of the highest level defining subprogram and a parenthesized list of arguments. A FORTRAN II subprogram to be linked by means of a CALL statement must have a SUBROUTINE statement as its first statement. SUBROUTINE is followed by the name of the subprogram and by 2 number of symbols in parentheses. The symbols in parentheses must agree in number, order, and mode with the arguments in the CALL statement used to call this subprogram. A subprogram headed by a SUBROUTINE statement has a RETURN statement at the point where control is to be returned to the calling program. A subprogram may, of course, contain more than one RETURN statement.

The second way in which FORTRAN II links programs together is by means of an arithmetic statement involving the name of a function with a parenthesized list of arguments. The function terminology in the FORTRAN II language may be indefinitely expanded to include as elements of the language any single-valued functions which can be evaluated by a process within the capacity of the computer. The power of function definition was available in the original FORTRAN but has been made much more flexible in FORTRAN II.

As in the original FORTRAN, library tape functions and built-in functions may be used in any FORTRAN II program. The library tape functions may be supplemented as desired. Two new built-in functions have been added in FORTRAN II, and provision has been made for the addition of up to ten by the individual installation. The most flexible and powerful means of function definition in FORTRAN II is, however, the subprogram headed by a FUNCTION statement. The FUNCTION statement specifies the function name, followed by a parenthesized list of arguments corresponding in number, order, and mode to the list following the function name in the calling program. This new facility enables the programmer to define functions in source language in a subprogram which can be compiled from alphanumeric cards or tape in the same way as a main program. Function subprograms may use other subprograms to any depth desired. A subprogram headed by a FUNCTION statement is logically terminated by a RETURN statement in the same manner as the SUBROUTINE subprogram. Subprograms of the function type may also be written in SAP code, or in any other language reducible to machine language. Subprograms of the function type may freely use subprograms of both the subroutine type and the function type without restriction. Similarly, the subroutine type may use subprograms of both the subroutine type and the function type without restriction. The names of variables listed in a subprogram in a SUBROUTINE or FUNCTION statement are dummy variables. These names are independent of the d h g program =d, therefore, need not be the same as the corresponding variable names in the calling program, and may even be the same as non-corresponding variable names in the calling program. This enables a subprogram or group of subprograms to be used with various independently written main programs.

There are many occasions when it is desirable for a subprogram to be able to refer to variables in the calling program without requiring that they be listed every time the subprogram is to be used. Such cross-referencing of the variables in a calling program and in various levels of subprograms is accomplished by means of the COMMON statement which defines the storage areas to be shared by several programs. This feature also gives the programmer more flexible control over the allocation of data in storage.

The END statement has been added to the FORTRAN II language for multiple program compilation, another new feature of FORTRAN 11. This statement acts as an end-of-file for either cards or tape so that there may be many programs in the card reader or on a reel of tape at any one time. Five digits in parentheses follow the END statement. These digits refer to the first five Sense Switches on the 704 Console, allowing the programmer, if he wishes, to indicate to the Translator which of certain options it is to take, regardless of the arranging of the these switches. In an early phase of the FORTRAN II Translator, a diagnostic program has been incorporated which finds many types of errors much earlier during the compilation process, provides more complete information on error print-outs, and reduces the number of stops. Thus, both programming time and machine compilation time are saved.

The object programs, both main programs and subprograms, are stored in 704 memory by the Binary Symbolic Subroutine Loader. The Loader interprets symbolic references between a main program and its subprograms and between various levels of subprograms and provides for the proper flow of control between the various programs during program execution.

Because of the function of the Loader, the programmer need know only the symbolic name of an available subprogram and the procedure which it carries out; he does not need to be concerned with the constitution of the machine language deck, nor with the location of the subprogram in storage. h machine language decks, symbolic references are retained in a set of names, or ??Transfer List, " at the beginning of each program which calls for subprograms.

The symbolic name of each subprogram is also retained on a special card, the fl Program Card, at the front of each subprogram deck. At the beginning of loading, a call for a subprogram is a transfer to the appropriate symbolic name in the Transfer List. Before program execution commences, the Loader replaces the Transfer List names with transfers to the actual locations occupied in storage by the corresponding subprogram entry points.

The order in which the decks are loaded determines the actual locations occupied by the main program and subprograms in storage but does not affect the logical flow of control. The order in which decks are loaded is therefore arbitrary.

in Proceedings of the Symposium on the Mechanisation of Thought Processes. Teddington, Middlesex, England: The National Physical Laboratory, November 1958 view details

Introduction

The present paper describes, in formal terms, the steps in translation employed by the Fortran aritlimetic translator in converting Fortran formulas into 704 assembly code. The steps are described in about the order in which they are; actually taken during translation. Although sections 2 and 3 give a formal description of the Fortran source language, insofar as arithmetic type statements are concerned, the reader is expected to be familiar with Fobtran II, as well as with Sap II and the programming logic of the 704 computer.

in [ACM] CACM 2(02) February 1959 view details

in [ACM] CACM 2(02) February 1959 view details

in [ACM] CACM 4(01) (Jan 1961) view details

Why Translate ?

Source program translation frees the specifications of the new language from the need to be compatible with the old language. Programmers are encouraged to learn the new language and to use it exclusively. Furthermore, the translator is a valuable teaching aid; it provides each programmer with correctly translated versions of his own old programs.

Why Code the Translator in FORTRAN?

Coding in FORTRAN is easier and faster than coding in machine language. The code is easier to read, and this simplifies communication, debugging, maintenance, and modification. Coded in FORTRAN II, SIFT can be converted to work in a FORTRAN IV system, by translating itself. It can be used on any computer which possesses a FORTRAN II compiler, and every computer installation which might be interested in SIFT will certainly have a FORTRAN compiler.

What Does the Translator Have to do?

Most of the incompatibilities between FORTRAN II and FORTRAN IV can be resolved by a simple transliteration, but three areas require more analysis. These are EQUIVALENCE-COMMON interaction, double-precision and complex arithmetic, and Boolean statements. In FORTRAN, EQUIVALENCE is used to specify that a number of variables share the same storage space. COMMON is used to control the assignment of variables to absolute locations, so that separately-compiled routines may refer to the same variables without the use of calling-sequence parameters. In FORTRAN II, the assignment of COMMON variables is modified if some of these variables appear in EQUIVALENCE statements. The order of assignment may be affected, and additional space may be left to allow for overlapping arrays. In FORTRAN IV, assignment of storage for COMMON variables is independent of EQUIVALENCE statements. Therefore, SIFT must perform an EQUIVALENCE analysis, and rewrite the COMMON statement in the revised order, with dummy arrays inserted to allow for overlap. Since FORTRAN II and FORTRAN IV employ different means of referring to individual members of doubleprecision and complex number-pairs, SIFT must tabulate the names of all double-precision and complex variables, generate FORTRAN IV type statements for all such variables, and transliterate all statements which refer to single members of these number-pairs.

FORTRAN II has a means of specifying the computation of the Boolean operations of and, or, and complement on machine words. Although FORTRAN IV has statements which perform Boolean computations on logical variables, which may take on only the values true and false, it has nothing corresponding to the FORTRAN II Boolean computation on full machine words. Therefore, SIFT must replace Boolean statements by statements which call subroutines to perform the Boolean operations.

[...]

To accomplish such translations, SIFT must make the same kind of analysis performed by a compiler when processing arithmetic operations. In addition to these operations (and several more mundane transliteration tasks), SIFT also replaces all numeric input-output unit designators by symbolic designators, and, if requested, replaces the names of certain variables by more readable names supplied by the user.

How Does the Translator Work?

SIFT processing takes place in three steps. The first step copies the input program onto an intermediate tape and builds tables. The second step processes the tables and generates the new statements required to resolve the EQUIVALENCE-COMMON interaction. The third step reads the input program from the intermediate tape and, using the information collected in the first two steps, produces an equivalent output program in FORTRAN IV language.

Several interesting programming techniques were used in SIFT. The problem of writing a FORTRAN program to handle character strings was solved by using an internal character representation in which each character occupies one machine word. Ordinary FORTRAN indexing is then used to scan across an input string. Machine-language subroutines perform conversion between internal and external character representations. The internal character representation uses a character code specially designed for SIFT, as follows

Character Internal Code

0-9 0-9

A - Z 11 - 36

Blank 38

Other Punctuation 39 - 50

Using this code, two FORTRAN statements suffice to scan to the next non-blank character and determine whether that character is a punctuation mark. Each input statement passes through a scan routine, which builds a pointer table. The pointer table indicates the location within the statement of each verb, noun, operation sign, and punctuation mark. All subsequent analysis routines refer to the pointer table, and are thus spared such problems as scanning past blanks and ignoring operation signs within alphanumeric literals. As was mentioned, SIFT must often insert new variable names into the programs it translates. An option is provided to allow the user of SIFT to specify classes of names to be inserted; otherwise, standard classes of names are used. In addition, all entries on a list of variable names to be inserted are compared with all names appearing in the program being translated. Any conflicting name is deleted from the list of names to be inserted.

How Was the Translator Developed?

The SHARE FORTRAN Committee, which represents the largest single body of FORTRAN users, went on record in March, 1961, as favoring a new FORTRAN language which did not contain all of FORTRAN II as a subset. The committee, at that time, expressed its willingness to provide a translator program. In April, 1961, a subcommittee of fifteen members was appointed to study the problem. This subcommittee was divided into three divisions on a geographic basis. The divisions met separately prior to a full subcommittee meeting in June, at which time detailed specifications of the task were prepared, and methods were discussed. The western division completed the final specifications, and three of its members did the coding, which was completed in January, 1962. The geographic proximity of these three (two in Los Angeles, one in San Diego) permitted them to meet several times, although most communication was by telephone and mail. When the translator program was working to the satisfaction of its authors, the SHARE FORTRAN Committee sponsored a field test, with participants from more than twenty computing installations. The results of this field test should be available when this paper will be presented.

in Artificial languages view details

in The Computer Journal 5(2) July 1962 view details

Introduction

Although FORTRAN (in various versions) is one of the most widely used algorithmic languages, with translators existing for upwards of sixteen machines, the language itself has never been given a complete and explicit definition except informally, through the various reference manuals.

Part of the reason for this is that FORTRAN'S position as a "common" language did not occur through official acceptance, but through use, with translators being written to process what people thought was the language. Furthermore, the specifications of the language, being to a large extent machine dependent, or at least largely influenced by the structure of the machine for which the first FORTRAN was written (the IBM 704), were altered for convenience whenever the language could not easily be accommodated by a particular machine. The result, of course, is that each translator implements something closely resembling the same language, but with differences. For example, 650 FORTRAN allows identifiers to be five characters long, while 1604 FORTRAN allows them to be seven characters long, both restrictions being due to convenience in writing the compilers.

In line with the present fad for syntacticizing everything in sight, the present effort was undertaken. The immediate impetus for the work was the existence of PsYco [1], a compiler for ALGOL 60 on the CDC 1604, which requires a complete "syntax table" of the source language in order to do the translation. If such a table could be constructed for FORTRAN, then the same compiler could be used for both languages, with merely a change of tables. Work is presently being done to use PsYco to translate from FORTRAN to 1604 code [2].

To make our frame of reference explicit, the language with which this paper is concerned is that defined in the IBM publication "Reference Manual, 709/7090 FORTRAN Programming System" [3], the system presently used on the IBM 7090. We do not include any features of the language which have to do with the monitor system used with FORTRAN, nor do we include the syntax of Complex

and Double Precision modifications (Column 1 mode indicators "I" and "D", respectively). The semantics of the language is of course a function of the processor, and is not considered here, although it has a tendency to creep into the syntactic specifications. References to quotations from the manual in this paper will be made in the form [Mn], where n is the page number in the manual.

in [ACM] CACM 5(06) June 1962 view details

in [ACM] CACM 5(06) June 1962 view details

in [ACM] CACM 6(11) (Nov 1963) view details

in [ACM] CACM 6(08) (August 1963) view details

in [ACM] CACM 6(08) (August 1963) view details

in [ACM] CACM 6(08) (August 1963) view details

It would be interesting to know whether the author, as a result of this work, has noted where small changes in FORTRAN II might yield large rewards in ease of syntactic description or where the syntax table capability of Psycho needs enlargement.

in ACM Computing Reviews 5(04) July-August 1964 view details

in ACM Computing Reviews 5(01) January-February 1964 view details

The 701 used a rather unreliable electrostatic tube storage system. When Magnetic core storage became available there was some talk about a 701M computer that would be an advanced 701 with core storage. The idea of a 701M was soon dropped in favor of a completely new computer, the 704. The 704 was going to incorporate into hardware many of the features for which programming systems had been developed in the past. Automatic floating point hardware and index registers would make interpretive systems like Speedcode unnecessary.

Along with the development of the 704 hardware IBM set up a project headed by John Backus to develop a suitable compiler for the new computer. After the expenditure of about 25 man years of effort they produced the first Fortran compiler.19,20 Fortran is in many ways the most important and most impressive development in the early history of automatic programming.

Like most of the early hardware and software systems, Fortran was late in delivery, and didn't really work when it was delivered. At first people thought it would never be done. Then when it was in field test, with many bugs, and with some of the most important parts unfinished, many thought it would never work. It gradually got to the point where a program in Fortran had a reasonable expectancy of compiling all the way through and maybe even of running. This gradual change of status from an experiment to a working system was true of most compilers. It is stressed here in the case of Fortran only because Fortran is now almost taken for granted, as if it were built into the computer hardware.

In the early days of automatic programming, the most important criterion on which a compiler was judged was the efficiency of the object code. "You only compile once, you run the object program many times," was a statement often quoted to justify a compiler design philosophy that permitted the compiler to take as long as necessary, within reason, to produce good object code. The Fortran compiler on the 704 applied a number of difficult and ingenious techniques in an attempt to produce object coding that would be as good as that produced by a good programmer programming in machine code. For many types of programs the coding produced is very good. Of course there are some for which it is not so good. In order to make effective use of index registers a very complicated index register assignment algorithm was used that involved a complete analysis of the flow of the program and a simulation of the running of the program using information obtained from frequency statements and from the flow analysis. This was very time consuming, especially on the relatively small initial 704 configuration. Part of the index register optimization fell into disuse quite early but much of it was carried along into Fortran II and is still in use on the 704/9/90. In many programs it still contributes to the production of better code than can be achieved on the new Fortran IV compiler.

Experience led to a gradual change of philosophy with respect to compilers. During debugging, compiling is done over and over again. One of the major reasons for using a problem oriented language is to make it easy to modify programs frequently on the basis of experience gained in running the programs. In many cases the total compile time used by a project is much greater than the total time spent running object codes. More recent compilers on many computers have emphasized compiling time rather than run time efficiency. Some may have gone too far in that direction.

It was the development of Fortran II that made it possible to use Fortran for large problems without using excessive compiling time. Fortran II permitted a program to be broken down into subprograms which could be tested and debugged separately. With Fortran II in full operation, the use of Fortran spread very rapidly. Many 704 installations started to use nothing but Fortran. A revolution was taking place in the scientific computing field, but many of the spokesmen for the computer field were unaware of it. A number of major projects that were at crucial points in their development in 1957-1959 might have proceeded quite differently if there was more general awareness of the extent to which the use of Fortran had been accepted in many major 704 installations.

Extract: Algol vs Fortran

With the use of Fortran already well established in 1958, one may wonder why the American committee did not recommend that the international language be an extension of, or at least in some sense compatible with Fortran. There were a number of reasons. The most obvious has to do with the nature and the limitations of the Fortran language itself. A few features of the Fortran language are clumsy because of the very limited experience with compiler languages that existed when Fortran was designed. Most of Fortran's most serious limitations occur because Fortran was not designed to provide a completely computer independent language; it was designed as a compiler language for the 704. The handling of a number of statement types, in particular the Do and If statements, reflects the hardware constraints of the 704, and the design philosophy which kept these statements simple and therefore restricted in order to simplify optimization of object coding.

Another and perhaps more important reason for the fact that the ACM committee almost ignored the existence of Fortran has to do with the predominant position of IBM in the large scale computer field in 1957-1958 when the Algol development started. Much more so than now there were no serious competitors. In the data processing field the Univac II was much too late to give any serious competition to the IBM 705. RCA's Bizmac never really had a chance, and Honeywell's Datamatic 1000, with its 3 inch wide tapes, had only very few specialized customers. In the Scientific field there were those who felt that the Univac 1103/1103a/1105 series was as good or better than the IBM 701 / 704 /709. Univac's record of late delivery and poor service and support seemed calculated to discourage sales to the extent that the 704 had the field almost completely to itself. The first Algebraic compiler produced by the manufacturer for the Univac Scientific computer, the 1103a, was Unicode, a compiler with many interesting features that was not completed until after 1960, for computers that were already obsolete. There were no other large scale scientific computers. There was a feeling on the part of a number of persons highly placed in the ACM that Fortran represented part of the IBM empire, and that any enhancement of the status of Fortran by accepting it as the basis of an international standard would also enhance IBM's monopoly in the large scale scientific computer field.

The year 1958 in which the first Algol report was published, also marked the emergence of large scale high speed transistorized computers, competitive in price and superior in performance to the vacuum tube computers in general use. At the time I was in charge of Programming systems for the new model 2000 computers that Philco was preparing to market. An Algebraic compiler was an absolute necessity, and there was never really any serious doubt that the language had to be Fortran. The very first sales contracts for the 2000 specified that the computer had to be equipped with a compiler that would accept 704 Fortran source decks essentially without change. Other manufacturers, Honeywell, Control Data, Bendix, faced with the same problems, came to the same conclusion. Without any formal recognition, in spite of the attitude of the professional committees, Fortran became the standard scientific computing language. Incidentally, the emergence of Fortran as a standard helped rather than hindered the development of a competitive situation in the scientific computer field.

in [AFIPS JCC 25] Proceedings of the 1964 Spring Joint Computer Conference SJCC 1964 view details

The family tree of programming languages, like those of humans, is quite different from the tree with leaves from which the name derives.

That is, branches grow together as well as divide, and can even join with branches from other trees. Similarly, the really vital requirements for mating are few. PL/I is an offspring of a type long awaited; that is, a deliberate result of the marriage between scientific and commercial languages.

The schism between these two facets of computing has been a persistent one. It has prevailed longer in software than in hardware, although even here the joining was difficult. For example, the CPC (card-programmed calculator) was provided either with a general purpose floating point arithmetic board or with a board wired specifically to do a (usually) commercial operation. The decimal 650 was partitioned to be either a scientific or commercial installation; very few were mixed. A machine at Lockheed Missiles and Space Company, number 3, was the first to be obtained for scientific work. Again, the methods of use for scientific work were then completely different from those for commercial work, as the proliferation of interpretive languages showed.

Some IBM personnel attempted to heal this breach in 1957. Dr. Charles DeCarlo set up opposing benchmark teams to champion the 704 and 705, possibly to find out whether a binary or decimal machine was more suited to mixed scientific and commercial work. The winner led to the 709, which was then touted for both fields in the advertisements, although the scales might have tipped the other way if personnel assigned to the data processing side had not exposed the file structure tricks which gave the 705 the first edge. Similarly fitted, the 704 pulled ahead.

It could be useful to delineate the gross structure of this family tree for programming languages, limited to those for compilers (as opposed to interpreters, for example).

On the scientific side, the major chronology for operational dates goes like this:

1951, 52 Rutishauser language for the Zuse Z4 computer

1952 A0 compiler for Univac I (not fully formula)

1953 A2 compiler to replace A0

1954 Release of Laning and Zierler algebraic compiler for Whirlwind

1957 Fortran I (704)

1957 Fortransit (650)

1957 AT3 compiler for Univac II (later called Math-Matic)

1958 Fortran II (704)

1959 Fortran II (709)

A fuller chronology is given in the Communications of the ACM, 1963 May, 94-99.

IBM personnel worked in two directions: one to deriving Fortran II, with its ability to call previously compiled subroutines, the other to Xtran in order to generalize the structure and remove restrictions. This and other work led to Algol 58 and Algol 60. Algol X will probably metamorphose into Algol 68 in the early part of that year, and Algol Y stands in the wings. Meanwhile Fortran II turned into Fortran IV in 1962, with some regularizing of features and additions, such as Boolean arithmetic.

The corresponding chronology for the commercial side is:

1956 B-0, counterpart of A-0 and A-2, growing into

1958 Flowmatic

1960 AIMACO, Air Material Command version of Flowmatic

1960 Commercial Translator

1961 Fact

Originally, I wanted Commercial Translator to contain set operators as the primary verbs (match, delete, merge, copy, first occurrence of, etc.), but it was too much for that time. Bosak at SDC is now making a similar development. So we listened to Roy Goldfinger and settled for a language of the Flowmatic type. Dr. Hopper had introduced the concept of data division; we added environment division and logical multipliers, among other things, and also made an unsuccessful attempt to free the language of limitations due to the 80-column card.

As the computer world knows, this work led to the CODASYL committee and Cobol, the first version of which was supposed to be done by the Short Range Committee by 1959 September. There the matter stood, with two different and widely used languages, although they had many functions in common, such as arithmetic. Both underwent extensive standardization processes. Many arguments raged, and the proponents of "add A to B giving C" met head on with those favoring "C = A + B". Many on the Chautauqua computer circuit of that time made a good living out of just this, trivial though it is.

Many people predicted and hoped for a merger of such languages, but it seemed a long time in coming. PL/I was actually more an outgrowth of Fortran, via SHARE, the IBM user group historically aligned to scientific computing. The first name applied was in fact Fortran VI, following 10 major changes proposed for Fortran IV.

It started with a joint meeting on Programming Objectives on 1963 July 1, 2, attended by IBM and SHARE Representatives. Datamation magazine has told the story very well. The first description was that of D. D. McCracken in the 1964 July issue, recounting how IBM and SHARE had agreed to a joint development at SHARE XXII in 1963 September. A so-called "3 x 3" committee (really the SHARE Advanced Language Development Committee) was formed of 3 users and 3 IBMers. McCracken claimed that, although not previously associated with language developments, they had many years of application and compiler-writing experience, I recall that one of them couldn't tell me from a Spanish-speaking citizen at the Tijuana bullring.

Developments were apparently kept under wraps. The first external report was released on 1964 March 1. The first mention occurs in the SHARE Secretary Distribution of 1964 April 15. Datamation reported for that month:

"That new programming language which came out of a six-man IBM/ SHARE committee and announced at the recent SHARE meeting seems to have been less than a resounding success. Called variously 'Sundial' (changes every minute), Foalbol (combines Fortran, Algol and Cobol), Fortran VI, the new language is said to contain everything but the kitchen sink... is supposed to solve the problems of scientific, business, command and control users... you name it. It was probably developed as the language for IBM's new product line.

"One reviewer described it as 'a professional programmer's language developed by people who haven't seen an applied program for five years. I'd love to use it, but I run an open shop. Several hundred jobs a day keep me from being too academic. 'The language was described as too far from Fortran IV to be teachable, too close to be new. Evidently sharing some of these doubts, SHARE reportedly sent the language back to IBM with the recommendation that it be implemented tested... 'and then we'll see. '"

In the same issue, the editorial advised us "If IBM announces and implements a new language - for its whole family... one which is widely used by the IBM customer, a de facto standard is created.? The Letters to the Editor for the May issue contained this one:

"Regarding your story on the IBM/SHARE committee - on March 6 the SHARE Executive Board by unanimous resolution advised IBM as follows:

"The Executive Board has reported to the SHARE body that we look forward to the early development of a language embodying the needs that SHARE members have requested over the past 3 1/2 years. We urge IBM to proceed with early implementation of such a language, using as a basis the report of the SHARE Advanced Language Committee. "

It is interesting to note that this development followed very closely the resolution of the content of Fortran IV. This might indicate that the planned universality for System 360 had a considerable effect in promoting more universal language aims. The 1964 October issue of Datamation noted that:

"IBM PUTS EGGS IN NPL BASKET

"At the SHARE meeting in Philadelphia in August, IBM?s Fred Brooks, called the father of the 360, gave the word: IBM is committing itself to the New Programming Language. Dr. Brooks said that Cobol and Fortran compilers for the System/360 were being provided 'principally for use with existing programs. '

"In other words, IBM thinks that NPL is the language of the future. One source estimates that within five years most IBM customers will be using NPL in preference to Cobol and Fortran, primarily because of the advantages of having the combination of features (scientific, commercial, real-time, etc.) all in one language.

"That IBM means business is clearly evident in the implementation plans. Language extensions in the Cobol and Fortran compilers were ruled out, with the exception of a few items like a sort verb and a report writer for Cobol, which after all, were more or less standard features of other Cobol. Further, announced plans are for only two versions of Cobol (16K, 64K) and two of Fortran (16K and 256K) but four of NPL (16K, 64K, 256K, plus an 8K card version).

"IBM's position is that this emphasis is not coercion of its customers to accept NPL, but an estimate of what its customers will decide they want. The question is, how quickly will the users come to agree with IBM's judgment of what is good for them? "

Of course the name continued to be a problem. SHARE was cautioned that the N in NPL should not be taken to mean "new"; "nameless" would be a better interpretation. IBM's change to PL/I sought to overcome this immodest interpretation.

Extract: Definition and Maintenance

Definition and Maintenance

Once a language reaches usage beyond the powers of individual communication about it, there is a definite need for a definition and maintenance body. Cobol had the CODASYL committee, which is even now responsible for the language despite the existence of national and international standards bodies for programming languages. Fortran was more or less released by IBM to the mercies of the X3. 4 committee of the U. S. A. Standards Institute. Algol had only paper strength until responsibility was assigned to the International Federation for Information Processing, Technical Committee 2. 1. Even this is not sufficient without standard criteria for such languages, which are only now being adopted.

There was a minor attempt to widen the scope of PL/I at SHARE XXIV meeting of 1965 March, when it was stated that X3. 4 would be asked to consider the language for standardization. Unfortunately it has not advanced very far on this road even in 1967 December. At the meeting just mentioned it was stated that, conforming to SHARE rules, only people from SHARE installations or IBM could be members of the project. Even the commercial users from another IBM user group (GUIDE) couldn't qualify.

Another major problem was the original seeming insistence by IBM that the processor on the computer, rather than the manual, would be the final arbiter and definer of what the language really was. Someone had forgotten the crucial question, "The processor for which version of the 360? , " for these were written by different groups. The IBM Research Group in Vienna, under Dr. Zemanek, has now prepared a formal description of PL/I, even to semantic as well as syntactic definitions, which will aid immensely. However, the size of the volume required to contain this work is horrendous. In 1964 December, RCA said it would "implement NPL for its new series of computers when the language has been defined.?

If it takes so many decades/centuries for a natural language to reach such an imperfect state that alternate reinforcing statements are often necessary, it should not be expected that an artificial language for computers, literal and presently incapable of understanding reinforcement, can be created in a short time scale. From initial statement of "This is it" we have now progressed to buttons worn at meetings such as "Would you believe PL/II?" and PL/I has gone through several discrete and major modifications.

Extract: Introduction

The family tree of programming languages, like those of humans, is quite different from the tree with leaves from which the name derives.

That is, branches grow together as well as divide, and can even join with branches from other trees. Similarly, the really vital requirements for mating are few. PL/I is an offspring of a type long awaited; that is, a deliberate result of the marriage between scientific and commercial languages.

The schism between these two facets of computing has been a persistent one. It has prevailed longer in software than in hardware, although even here the joining was difficult. For example, the CPC (card-programmed calculator) was provided either with a general purpose floating point arithmetic board or with a board wired specifically to do a (usually) commercial operation. The decimal 650 was partitioned to be either a scientific or commercial installation; very few were mixed. A machine at Lockheed Missiles and Space Company, number 3, was the first to be obtained for scientific work. Again, the methods of use for scientific work were then completely different from those for commercial work, as the proliferation of interpretive languages showed.

Some IBM personnel attempted to heal this breach in 1957. Dr. Charles DeCarlo set up opposing benchmark teams to champion the 704 and 705, possibly to find out whether a binary or decimal machine was more suited to mixed scientific and commercial work. The winner led to the 709, which was then touted for both fields in the advertisements, although the scales might have tipped the other way if personnel assigned to the data processing side had not exposed the file structure tricks which gave the 705 the first edge. Similarly fitted, the 704 pulled ahead.

It could be useful to delineate the gross structure of this family tree for programming languages, limited to those for compilers (as opposed to interpreters, for example).

in PL/I Bulletin, Issue 6, March 1968 view details

[…]two interpretive conversational languages for the PDP-6 which fulfill the conversational on-line desk calculator aims of FORDESK. These are a version of advanced BASIC, the language developed by Dartmouth College and used by the G.E. time-sharing systems, and a language called AID which is based on the JOSS language developed by the RAND Corporation (J. C. Shaw, 1964).

COMPARISONS WITH BASIC AND AID

BASIC is designed for business and educational problem solving applications as well as for scientific work, while AID is designed chiefly for scientific and engineering problem solving By comparison FORDESK falls between the two, being better suited for most scientific work than BASIC and better suited for business type applications than AID. Also, as it is hoped eventually to implement all of FORTRAN IV, features such as double precision or complex arithmetic that exist in neither BASIC nor AID will become available in FORDESK.

FORTRAN is a harder language to learn than either BASIC or AID as it is further removed from normal English syntax It is interesting to note though, that current FORDESK I users do not seem inclined to spend the time to learn either BASIC or AID, preferring to use FORDESK, with which they are familiar.

In basic design all three languages are much the same, having arithmetic, jump, test and loop instructions but each has some features not found in the others. BASIC has a powerful set of matrix operations which allow matrices to be manipulated in a similar fashion to scalar variables and also some powerful character manipulation instructions AID has a set of functions PROD, SUM, MAX and MIN whose arguments can be specified in an iterative clause similar to mathematical notation but which a FORTRAN programmer would have to translate into a DO loop. AID and BASIC have a syntax better suited to conversational operation. The "up arrow" symbol for exponentiation in BASIC and AID is preferable to the " " used in FORTRAN while AID also has "' !" for absolute value and "[ ]" interchangeable with "( )" for greater legibility.

These features cannot be incorporated into FORDESK as they are not compatible with FORTRAN IV.

Variable names are restricted to one letter in AID and to one letter or one letter followed by a digit in BASIC, restrictions which limit the number of variables that can be used and prevent a user making his names mnemonic. FORDESK I users found the restriction to one letter variable names inconvenient because of these reasons. However, FORDESK IV follows the FORTRAN convention of variable names being up to six alphanumeric characters long with characters after the sixth ignored.

FORDESK has a more extensive set of arithmetic functions than either BASIC or AID and it goes one step further in its debugging facilities by allowing breakpoints to be set in a program. The current version of FORDESK occupies 5K of core as does BASIC, but AID needs 11K of core, thus the use of AID results in increased overheads in the swapping system. FORDESK is designed to operate re-entrantly to further reduce its swapping overheads

FORTRAN statements typed into FORDESK can start immediately after the line number so it is not necessary to space or tab across to represent card image. Rather, statement numbers are delimited by a colon or a tab and similarly comments are indicated by a "C" followed by a colon or tab. The line numbers provided by FORDESK are for reference in FORDESK commands only and are not used as FORTRAN statement numbers. Thus if resequencing of FORTRAN statements is requested, the actual program does not have to be modified. In addition, this will mean that it will be possible to input normal FORTRAN programs from sources other than the teletype.

Having received a statement, FORDESK interprets it immediately and if possible executes it. If an arithmetic statement is executed at this stage, the result is typed out immediately as a guide to the correctness of the user's program. Execution is suspended if a jump instruction is typed which would transfer control to a currently undefined statement number. Execution resumes when the statement number is defined. If an error is detected then FORDESK types a diagnostic message and asks for the same line to be retyped.

The user can return to command mode from text mode by pressing the ALTMODE key on the teletype instead of entering a FORTRAN statement.

in Proceedings of the Fourth Australian Computer Conference Adelaide, South Australia, 1969 view details

in [ACM] CACM 15(06) (June 1972) view details

in SIGPLAN Notices 14(04) April 1979 including The first ACM SIGPLAN conference on History of programming languages (HOPL) Los Angeles, CA, June 1-3, 1978 view details

External link: Online copy

in SIGPLAN Notices 14(04) April 1979 including The first ACM SIGPLAN conference on History of programming languages (HOPL) Los Angeles, CA, June 1-3, 1978 view details

GAM: This has to be the years 1954 and 1955. And around 1956, you got involved with the FORTRAN Project?

BH: We got wind of the FORTRAN Project late in 1955. Dr. Fernbach came into my office and said that John Backus was amenable to having someone from the Lab come to work with him. So, he asked me if I would go and, as usual, if you don't know how to do something, you say, "Yes."

GAM: That's great. That was a career-enhancing assignment. That's a really important thing.

BH: That's right. It's amazing how fast you learn what's going on. In less than three or four months, with the FORTRAN development group, I became a confirmed compiler advocate.

[...]

GAM: When you went to the FORTRAN project, did you have a specific assignment or was it just to go back there and tell them about the kind large codes we were developing?

BH: No, no, no. Each person in the FORTRAN development group had a specific assignment. Holland Herrick, for example, was in charge of all tape I/O; that was his baby. To backtrack, FORTRAN was a table driven scheme.

GAM: Oh?

BH: Many tables. TEIFNO was a typical table of IF and GOTO entries. "TEIFNO" stood for the Table of External Form of the IF Number versus its internal form. There were also "close-up" tables to close everything when finished. There were just tables, tables, tables. Later on, they discovered that the way to compile really fast was to collect everything and assemble it in a single symbol table. But, in those days, we were still learning how to walk and so, initially, it was a table-driven scheme.

One person, Peter Sheridan, had the responsibility for parsing the computer's mathematical equation-generating instructions. That was one of the most important areas of the whole code. And so, in that fashion, each person was responsible for a specific part of the compiler. Roy Nutt, in Hartford, Connecticut, handled all the formatted I/O. He was head of the computing group in Hartford. He took care of all of the background work involving I/O and input/output statements. And so, each person had a hunk of the compiler. When I first got there, I noticed that IBM used the closed-shop technique. You programmed, but you didn't have to see a computer. If you had a problem to run, you would submit your deck and take your turn. You'd come back in the morning and the listings would be on your desk. And that's the way you worked.

BH: I'm forgetting my point here. Everybody had a specific task. And they knew that I was unfamiliar with their schemes, so they would just hand me the code listings. They'd say "Herrick has this problem and we need a flow chart for that". I would read his machine language code and turn it into a flow chart. Then we could see errors and we could make changes to the program. So, now he has something that will serve him as a programming aid without wasting his time chasing around randomly. I worked on what they called the "first-level" documentation. And I made the biggest mistake of my life by not bringing a copy of that home. Now you understand why I missed making my first million dollars.

GAM: Most of us are still working on that, don't worry about it.

BH: Each person was assigned to a major section of the compiler and each person had his own set of tables to work with. And I would put the pieces together and then, somewhere along the line, there was what we call a "Semantics Synthesizer", where it all comes together into a machine language code that would run on the machine. It was a fascinating experience.

GAM: It must have been exciting too.

BH: Oh, it was. They were the nicest group of people you've ever met, all of them. If a computer conference was being held in Washington, D.C., then IBM would notify us and send the whole group to the conference and we'd come back and continue working. It was quite an experience.

GAM: Well that's great. And when you got back from IBM?

BH: I should mention that, one day, John Backus, the head of the group, called me into his office and said he'd like to get my ideas of what I thought of the project. About FORTRAN and in general. I said that, "Well, there¹s one thing that I worry about; you're only putting out a main program. There are no user-defined subprograms. At the Lawrence Livermore Laboratory," I said, "there's some programs with many sub-programs, but you're just putting out a main code. You're allowing for system functions like square roots and science and trigonometric functions, and so on. but we use lots of user defined sub programs, and also, you're assuming that the program can be memory contained but, we almost never get the memory contained with the big problems at Livermore, so we use a program design that can be chewed up into modules. Actually, we almost never get the memory contained with the big problems at Livermore. He said, "Well, I understand that but, in this particular case, I want to prove first that a computer can generate good code, and that a compiler can be written to generate a good program that rivals the efficiency that the hand programmer can produce." And he said, "That's going to be the important thing, I think you can lay it right there." He said, "Then we can get together later on and add all the other factors that need to be added in to make it even more convenient. But, I've got to get over that first hurdle to demonstrate that the compiler can generate good code, and of course, he was right.

GAM: Also, on your first swing back there, you started the idea that if a variable's name began with i, j, k, l, m or n, it's an integer.

BH: Yes, and for all other letters, it was a floating point quantity by default. Well, later on and when we started doing our own compilers, all those features were added. Different groups came up with different ideas when produced the second version of FORTRAN. I think even IBM allowed you to override the i, j, k, l, m, n convention.

GAM: Not at first.

BH: Not at first? OK. And that's what LRLTRAN at Livermore was all about.

GAM: That's one of the things.

BH: Another was mixed-mode arithmetic. In the first FORTRAN, you couldn't do mixed-mode arithmetic. An expression had to evaluate to be a real or an integer. We got around those hurdles by allowing the programmer to declare any main variable to be integer or real. There was quite a bit of awkwardness in the original FORTRAN. Using numbers for statement numbers. You had to number your statements, as opposed to allowing alphanumeric statement numbers. There were a number of other embellishments, such as IF-THEN-ELSE logic, In the 1970's, structured programs tried to avoid the use of statement labels. I don't know if the IF-THEN-ELSE logic was ever put into the IBM FORTRAN. We put it into ours.

GAM: I think an early real mistake in their first FORTAN, which induced us to have bad habits, was the three-way branching IF statement. That wasn't very good.

BH: Oh, sure. Then they introduced IFs where you could have two branches. Less than or greater than.

GAM: Well, true or false.

BH: Yes, it was a logical step.

Extract: Anecdote

BH: But then we really got to going strong. We got to the point where we were all LRLTRAN/FORTRAN and had our own compiler group set up. But I've worked on just about every compiler after they came along from the time I started at the Laboratory.

Let's see, Sam Mendicino and George Sutherland did the first bootstrap. You know FORTRAN/FORTRAN. They used FORTRAN to write a FORTRAN file.

And that was a Godsend. The program meant that there were no more single language programs.

GAM: It grew into LRLTRAN eventually, didn't it?

BH: Yes. That's what I mentioned, at that point around 1965 onward we were sort of on our own. We had our own language, our own version of FORTRAN. We were compatible with standard FORTRAN and still had special features for the Lab.

GAM: I don't remember that. I remember for instance, you guys had something about the Divide that you rounded before it was completed or you didn't put it in the hierarchy of operations in the right place or something.

BH: Well, you'll probably find that in all the conversion estimates, whether or not you round or not. You probably will find that that's a problem that won't go away. In one part of the country they are rounding in some instance, in another part of the country they are not rounding in this case. That's just an incompatibility in the numbering base. For one thing, that's in the binary and when you chop a binary bit that's one-fourth of a decimal digit maybe.

in SIGPLAN Notices 14(04) April 1979 including The first ACM SIGPLAN conference on History of programming languages (HOPL) Los Angeles, CA, June 1-3, 1978 view details

in SIGPLAN Notices 14(04) April 1979 including The first ACM SIGPLAN conference on History of programming languages (HOPL) Los Angeles, CA, June 1-3, 1978 view details

I should talk a little about the history of MAD, because MAD was one of our major activities at the Computing Center. It goes back to the 650, when we had the IT compiler from Al Perlis. Because of the hardware constraints - mainly the size of storage and the fact that there were no index registers on the machine - IT had to have some severe limitations on what could be expressed in the language. Arden and Graham decided to take advantage of index registers when we finally got them on the 650 hardware. We also began to understand compilers a little better, and they decided to generalize the language a little. In 1957, they wrote a compiler called GAT, the Generalized Algebraic Translator. Perlis took GAT and added some things, and called it GATE - GAT Extended.

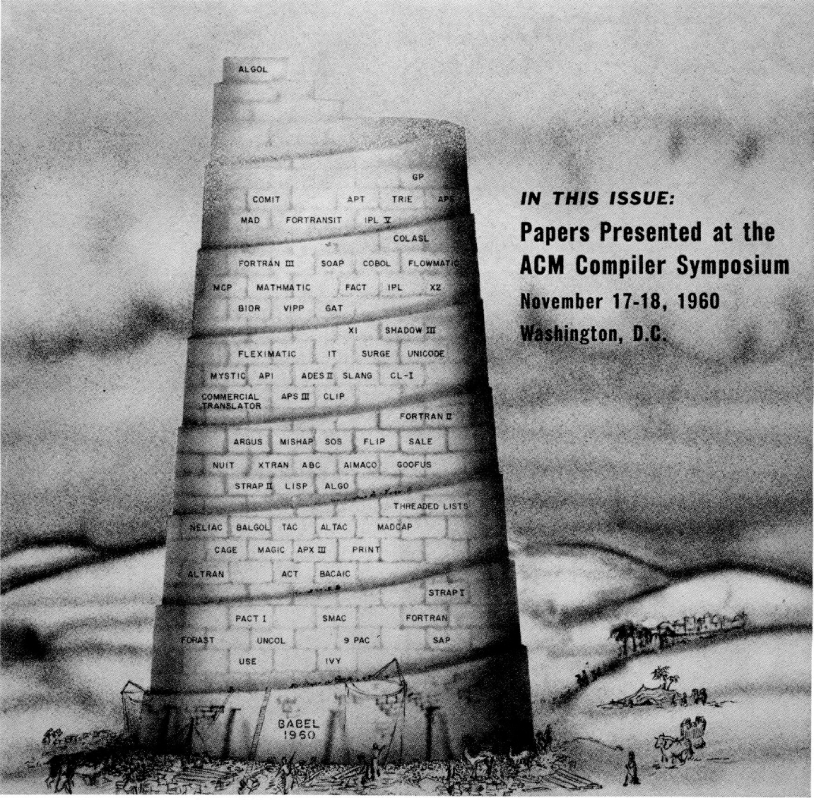

GAT was not used very long at Michigan. It was okay, but there was another development in 1958. The ACM [Association for Computing Machinery] and a European group cooperatively announced a "standard" language called IAL, the International Algebraic Language, later changed to Algol, Algorithmic Language, specifically Algol 58. They published a description of the language, and noted: "Please everyone, implement this. Let's find out what's wrong with it. In two years we'll meet again and make corrections to the language," in the hope that everyone would use this one wonderful language universally instead of the several hundred already developing all over the world. The cover of an issue of the ACM Communications back then showed a Tower of Babel with all the different languages on it.

John Carr was very active in this international process. When he returned to Michigan from the 1958 meeting with the Europeans, he said:

We've got to do an Algol 58 compiler. To help the

process, let's find out what's wrong with the language.

We know how to write language compilers,

we've already worked with IT, and we've

done GAT. Let's see if we can help.

So we decided to do an Algol 58 compiler. I worked with Arden and Graham; Carr was involved a little but left Michigan in 1959. There were some things wrong - foolish inclusions, some things very difficult to do - with the Algol 58 language specification. When you write a compiler, you have to make a number of decisions. By the time we designed the language that we thought would be worth doing and for which we could do a compiler, we couldn't call it Algol any more; it really was different. That's when we adopted the name MAD, for the Michigan Algorithm Decoder. We had some funny interaction with the Mad Magazine people, when we asked for permission to use the name MAD. In a very funny letter, they told us that they would take us to court and everything else, but ended the threat with a P.S. at the bottom - "Sure, go ahead." Unfortunately, that letter is lost.

So in 1959, we decided to write a compiler, and at first it was Arden and Graham who did this. I helped, and watched, but it was mainly their work because they'd worked on GAT together. At some point I told them I wanted to get more directly involved. Arden was doing the back end of the compiler; Graham was doing the front end. We needed someone to do the middle part that would make everything flow, and provide all the tables and so on. I said, "Fine. That's my part." So Graham did part 1, Galler did part 2, and Arden did part 3.

A few years later when Bob Graham left to go to the University of Massachusetts, I took over part 1. So I had parts 1 and 2, and Arden had 3, and we kept on that way for several years. We did the MAD compiler in 1959 and 1960, and I think it was 1960 when we went to that famous Share meeting and announced that we had a compiler that was in many ways better and faster than Fortran. People still tell me they remember my standing up at that meeting and saying at one of the Fortran discussions, "This is all unnecessary, what you're arguing about here. Come to our session where we talk about MAD, and you'll see why."

Q: Did they think that you were being very brash, because you were so young?

A: Of course. Who would challenge IBM? I remember one time, a little bit later, we had a visit from a man from IBM. He told us that they were very excited at IBM because they had discovered how to have the computer do some useful work during the 20-second rewind of the tape in the middle of the Fortran translation process on the IBM 704, and we smiled. He said, "Why are you smiling?" And we said, "That's sort of funny, because the whole MAD translation takes one second." And here he was trying to find something useful for the computer to do during the 20-second rewind in the middle of their whole Fortran processor.

In developing MAD, we were able to get the source listings for Fortran on the 704. Bob Graham studied those listings to see how they used the computer. The 704 computer, at that time, had 4,000 36-bit words of core storage and 8,000 words of drum storage. The way the IBM people overcame the small 4,000-word core storage was to store their tables on the drum. They did a lot of table look up on the drum, recognizing one word for each revolution of the drum. If that wasn't the word they wanted, then they'd wait until it came around, and they'd look at the next word.

Graham recognized this and said, "That's one of the main reasons they're slow, because there's a lot of table look-up stuff in any compiler. You look up the symbols, you look up the addresses, you look up the types of variables, and so on."

So we said, fine. The way to organize a compiler then is to use the drum, but to use it the way drums ought to be used. That is, we put data out there for temporary storage and bring it back only once, when we need it. So we developed all of our tables in core. When they overflowed, we stored them out on the drum. That is, part 1 did all of that. Part 2 brought the tables in, reworked and combined them, and put them back on the drum, and part 3 would call in each table when it needed it. We did no lookup on the drum, and we were able to do the entire translation in under a second.

It was because of that that MIT used MAD when they developed CTSS, the Compatible Time-Sharing System, and needed a fast compiler for student use. It was their in-core translator for many years.

MAD was successfully completed in 1961. Our campus used MAD until the middle of 1965, when we replaced the 7090 computer with the System /360. During the last four years of MAD's use, we found no additional bugs; it was a very good compiler. One important thing about MAD was that we had a number of language innovations, and notational innovations, some of which were picked up by the Fortran group to put into Fortran IV and its successors later on. They never really advertised the fact that they got ideas, and some important notation, from MAD, but they told me that privately.

We published a number of papers about MAD and its innovations. One important thing we had was a language definition facility. People now refer to it as an extensible language facility. It was useful and important, and it worked from 1961 on, but somehow we didn't appreciate how important it was, so we didn't really publish anything about it until about 1969. There's a lot of work in extensible languages now, and unfortunately, not a lot of people credit the work we did, partly because we delayed publishing for so long. While people knew about it and built on it, there was no paper they could cite.

in IEEE Annals of the History of Computing, 23(1) January 2001 view details