FACT(ID:68/fac006)

Fully Automated Compiling Technique

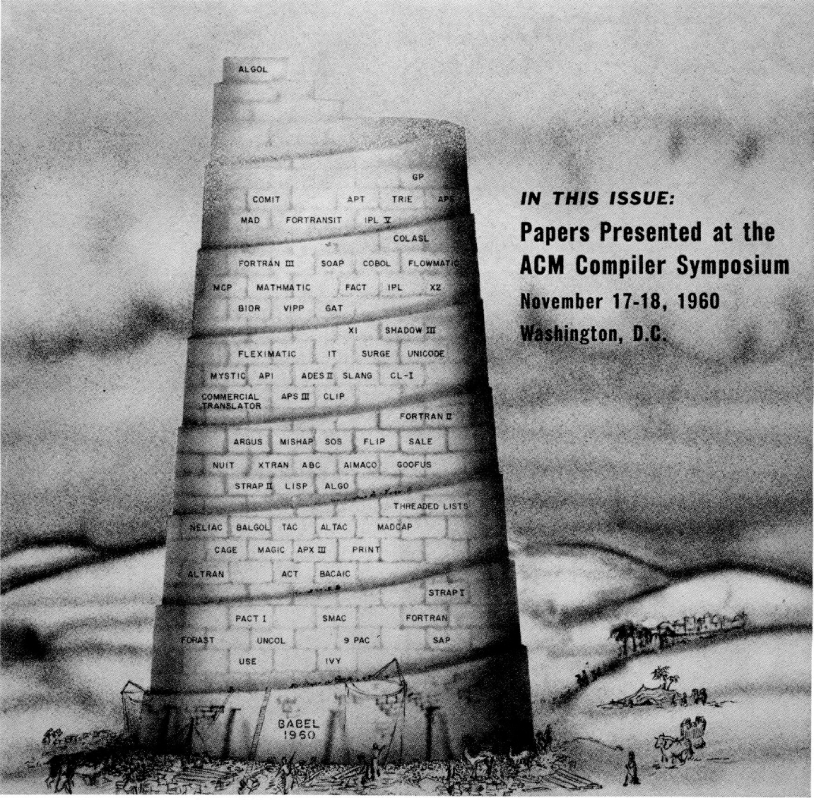

for Fully Automated Compiling Technique. Developed 1958 by Computer Sciences Corporation for Honeywell 800. (AKA Honeywell-800 Business Compiler.)

Acoording to Rosen (1964), began when Honeywell contracted to Computer Usage Co for a FORTRAN compiler. That company formed Computer Sciences Corporation, and (drawing on current data generator languages like SURGE, 9PAC, FLOW-MATIC, GE Hanford and Commercial Translator) developed a data-centered language that exceeded what was to become the COBOL spec.

Important for being the first English-like programming language, and exerted considerable force on COBOL. Most features not included in the 1960 COBOL spec ended up in 1962 COBOL Extended

Also significant in that it was the first time that a hardware company contracted out the development of a language (and that company was formed for the purpose).

FInally significant in that it has a segmented loader, also probably the first, which permitted the program to be loaded one segment at a time. This is turn meant an efficient code execution environment, as many porgrams could be run at the same time

Places

People: Hardware:

- Honeywell 800 Honeywell

Related languages

| 9PAC | => | FACT | Influence | |

| Algebraic Compiler | => | FACT | Sibling | |

| ARGUS | => | FACT | Sibling | |

| COMTRAN | => | FACT | Influence | |

| FLOW-MATIC | => | FACT | Influence | |

| Hanford Mark II | => | FACT | Influence | |

| SURGE | => | FACT | Influence | |

| FACT | => | COBOL | Evolution of | |

| FACT | => | COBOL-61 Extended | Influence | |

| FACT | => | Honeywell-800 Business Compiler | Alias |

References:

Announcing FACT:

Fully Automatic Compiling Technique

New Honeywell 800 Business Compiler Is First To Provide For Input Editing, Sorting, Processing Variable-Length Records, And Report Writing

FACT (fully automatic compiling technique) is a complete automatic programming system for the highly advanced Honeywell 800 transistorized data processing system. It is designed to simplify the preparation of business data processing programs by providing a con-cement problem-oriented language, a highly favorable source-statement-to-machine-instructions ratio, adaptability to a wide range of equipment configurations, and more data processing functions than ever before available in a compiler.

FACT LANGUAGE SHORTENS THE GAP BETWEEN MAN AND MACHINE

FACT lexicon is made up of familiar words of . everyday business usage such as FILE, ENTRY, PROCEDURE, REPORT, DELETE AND UPDATE. Source programs are initiated by combining lexicon words with the names of data units (files, entries, fields) to form ordinary English sentences and paragraphs. FACT accepts programs stated in this language and automatically creates the detailed machine language programs required to direct the data processing system in its work.

FACT also provides complete printed information about its own operation, including program listings, memory assignments and diagnostic data pertaining to source statement errors encountered during compilation. All of these outputs and aids are expressed in language easily understood by the programmer.

FACT -WORKS WITH SMALL. AS WELL AS LARGE SYSTEM CONFIGURATIONS

FACT can compile programs using as few as four magnetic tape units and 4096 words of memory. It can take advantage of any additional equipment that may be available and programs can be compiled on one Honeywell 800 for execution on any other Honeywell 800 system.

The programmer uses environment statements to describe the equipment array available for compilation as well as the array on which the object program is to run. Each object program is compiled to operate as efficiently as possible with the allotted machine units.

WITH FACT, FEWER PEOPLE WRITE MORE PROGRAMS IN LESS TIME

FACT may be used to prepare many different types of programs at many different levels of complexity including: input card reading and editing, creation of data files, data sorting, arithmetic computations, updating of data files, and generation of printed or punched reports based on input data, file data or program results.

The resulting compression of programming time and effort means that a given amount of work can be done with a smaller staff, jobs can be placed on the data processing system faster, programs can be modified more easily to meet changing requirements, and the data processor can be used profitably on a wider range of jobs.

HONEYWELL 8OO CUSTOMERS ARE WRITING PROGRAMS IN FACT LANGUAGE RIGHT NOW

Honeywell Service as well as Honeywell EDP equipment is setting the pace for the industry as evidenced by the fact that customers for Honeywell 800 systems are even now writing programs in FACT language. Experts in the field of automatic programming, including compiler creators as well as users, have quickly recognized the outstanding characteristics of the Honeywell business compiler. If you would like to make your own comparison of FACT features with those of any other compiler, write for a copy of the new 94-page manual, "FACT ? a new business language." Address your request to: Minneapolis-Honeywell, Datamatic Division, Wellesley Hills 81, Massachusetts, or Honeywell Controls Limited, Toronto 17, Ontario.

in [ACM] CACM 3(06) June 1960 view details

in Goodman, Richard (ed) "Annual Review in Automatic Programming" (2) 1961 Pergamon Press, Oxford view details

Honeywell FACT

An advanced business compiler for the Honeywell 800, called FACT, is the acknowledged leader in its field. It is the first compiler to take into account all facets of data processing including editing input information, sorting, creating files, processing variable-length records and generating output reports. Due to the exceptional breadth and power of FACT, an unprecedented percentage of business operations can be programmed for a Honeywell 800 with this system ? And in a fraction of the time previously required.

in Goodman, Richard (ed) "Annual Review in Automatic Programming" (2) 1961 Pergamon Press, Oxford view details

in Goodman, Richard (ed) "Annual Review in Automatic Programming" (2) 1961 Pergamon Press, Oxford view details

The paper by J. Iliffe on "The Use of the GENIE System in Numerical Calculation" describes a mathematical programming language for the Rice University computer. The GENIE language bears a strong resemblance both to ALGOL and to the ADES language developed several years ago by the reviewer. GENIE has some of the best features of both languages. It also embodies some ideas not possessed by either of these systems or any other system of this kind. Perhaps the most important departure from .\LGOL is the extension of the domain of possible values assigned to the symbols in the language. Whereas in ALGOL values are limited to real, integer and Boolean, GENIE permits as additional domains of values other symbol sets and instruction sets. Thus, the value of the expression P(ll, a) could be a set of single-address machine instructions for performing the addition ~ + v. The claim is also made that analytical processes can be performed as well as numerical processes. Another feature, although not original in GENIE, is worth mentioning. This is the use of a table of codewords for assignment of storage to arrays. The use of codewords rather than the ALGOL array declarations (or the dimension statements in FORTRAN) permits a much more flexible and efficient handling of storage assignments for arrays both in the source language and in the compiler.

Extract: Review

The paper by R. F. Clippinger titled "FACT -- A Business- Compiler: Description and Comparison with COBOL and Commercial Translator," must be singled out as an example c(-; the kind of paper which a compendium such as this should strive to present. Clippinger's excellent paper does much more than describe the FACT system for the Honeywell 800. Avoiding the dreary style of the instruction manual which one finds in most papers on programming, Clippinger gives a well-reasoned aoJcarefully thought-out discussion of the general aspects of business data processing, shows how FACT treats each major problem area and explains the technical factors which influenced the particuls~ technique chosen. At the same time, he manages to give a good account of the details of FACT by referring to 17 exhibits placed.

in Goodman, Richard (ed) "Annual Review in Automatic Programming" (2) 1961 Pergamon Press, Oxford view details

in [ACM] CACM 4(01) (Jan 1961) view details

Univac LARC is designed for large-scale business data processing as well as scientific computing. This includes any problems requiring large amounts of input/output and extremely fast computing, such as data retrieval, linear programming, language translation, atomic codes, equipment design, largescale customer accounting and billing, etc.

University of California

Lawrence Radiation Laboratory

Located at Livermore, California, system is used for the

solution of differential equations.

[?]

Outstanding features are ultra high computing speeds and the input-output control completely independent of computing. Due to the Univac LARC's unusual design features, it is possible to adapt any source of input/output to the Univac LARC. It combines the advantages of Solid State components, modular construction, overlapping operations, automatic error correction and a very fast and a very large memory system.

[?]

Outstanding features include a two computer system (arithmetic, input-output processor); decimal fixed or floating point with provisions for double

precision for double precision arithmetic; single bit error detection of information in transmission and arithmetic operation; and balanced ratio of high speed auxiliary storage with core storage.

Unique system advantages include a two computer system, which allows versatility and flexibility for handling input-output equipment, and program interrupt on programmer contingency and machine error, which allows greater ease in programming.

in [ACM] CACM 4(01) (Jan 1961) view details

This is another language to be offered for universal acceptance although it was developed by a single company. It is contemporary with Cobol, which it resembles in many ways, but does perhaps allow a greater flexibility of expression; it lacks, however, function definition. The manual studied was not as detailed as that for Cobol.

in [ACM] CACM 4(01) (Jan 1961) view details

The "commercial" papers are mostly devoted to COBOL. There is a detailed description, by Jean E. Sammet, and a paper of general views on COBOL, by the same author, also "A critical discussion of COBOL," by several members of the British Computer Society. A long paper by R. F. Clippinger describes FACT, a commercial language developed for the HONEYWELL 800, and compares it in considerable detail with COBOL and IBM Commercial Translator, aiming to show the superiority of FACT over these languages. "The growth of a commercial programming language" by H. D. Baeker describes SEAL, a language developed for the Stantec Data Processing System, and again aims to demonstrate its superiority over COBOL.

in The Computer Bulletin June 1962 view details

in [ACM] CACM 6(03) (Mar 1963) view details

in The Computer Journal 5(1) April 1962 view details

COBOL was developed by the COBOL Committee. which is a subcommittee of a layer committee called the "Conference on Data System Languages" which has an Executive Committee and, in addition to the COBOL Committee, a Development Committee. Within the Development Committee are two working groups called the Language Structure Group (whose members are Roy Goldfinger of IBM, Robert Bosak of SDC, Carey Dobbs of Spcrry Rand, Renee Jasper of Navy Management Office, William Keating of National Cash Register, George Kendrick of General Electric, and Jack Porter of Mitre Corporation). and the Systems Group. Both of these groups are interested in going beyond COBOL to further simplify the work of problem preparation. The Systems Group has concentrated on an approach which represents a generalization of TABSOL in GE's GECOM. The driving force behind this effort is Burt Grad.

The Language Structure Group has taken a different approach. It notes that FACT, COBOL, and other current data-processing compilers are "procedure-oriented," that is, they require a solution to the dataprocessing problem to be worked out in detail, and they are geared to the magnetic-tape computer and assume that information is available in files on magnetic tape. sorted according to some keys. The Language Structure Group felt that an information algebra could be defined which would permit the definition of a data-processing problem without postulating a solution. It felt that if this point were reached. then certain classes of problems could be handled by compilers that would, in effect, invent the necessary procedures for the problem solution.

One sees a trend in this direction in FLOW-MATIC, COBOL, Commercial Translator, and FACT, if one notes that a sort can be specified in terms of the ipnput file and the output file, with no discussion of the technique required to create strings of ordered items and merge these strings. Similarly, a report writer specifies where the information is to come from and how it is to be arranged on the printed page, and the compiler generates a program which accomplishes the purpose without the programmer having to supply the details. The FACT update verb specifies a couple of input files and criteria for matching, and FACT generates a program which does the housekeeping of reading records from both files, matching them and going to appropriate routines depending on where there is a match, an extra item, a missing item, etc. A careful study of existing compilers will reveal many areas where the compiler supplies a substantial program as a result of having been given some information about data and interrelationships between the data. The Language Structure Group has, by no means, provided a technique of solving problems once they are stated in abstract form. Indeed, it is clear that this problem is not soluble in this much generality. All the Language Structure Group claims is to provide an information algebra which may serve as a stimulus to the development of compilers with somewhat larger abilities to invent solutions in restricted cases. The work of the Language Structure Group will be described in detail in a paper which will appcar shortly in the Coinnnmications offhe ACM. Extract: Basic concepts

Basic concepts

The algebra is built on three undefined concepts: ENTITY, PROPERTY and VALUE. These concepts are related by the following two rules which are used as the basis of two specific postulates:

(a) Each PROPERTY has a set of VALUES associated with it.

(b) There is one and only one VALUE associated with each PROPERTY of each ENTITY.

The data of data processing are collections of VALUES of certain selected PROPERTIES of certain selected ENTITIES. For example, in a payroll application. one class of ENTITIES are the employees. Some of the PROPERTIES which may be selected for inclusion are EMPLOYEE NUMBER, NAME, SEX, PAY RATE, and a payroll file which contain a set of VALUES of these PROPERTIES for each ENTITY.

Extract: Property spaces

Property spaces

Following the practice of modern algebra, the Information Algebra deals with sets of points in a space. The set of VALUES is assigned to a PROPERTY that is called the PROPERTY VALUE SET. Each PROPERTY VALUE SET contains at least two VALUES U (undefined, not releUant) and II (missing, relevant, but not known). For example, the draft status of a female employee would be undefined and not relevant, whereas if its value were missing for a male employee it would be relevant but not known. Several definitions are introduced :

(1) A co-ordinate set (Q) is a finite set of distinct properties.

(2) The null co-ordinate set contains no properties.

(3) Two co-ordinate sets are equivalent if they contain exactly the same properties.

(4) The property space (P) of a co-ordinate set (Q) is the cartesian product P = V, X V, x Vj X . . . X V,, where V, is the property value set assigned to the ith property of Q. Each point (p) of the property space will be represented by an n-tuple p = (a,, a,, a,, . . ., a,), where a, is some value from C',. The ordering of properties in the set is for convenience only. If n -= 1, then P = V,. If n = 0, then P is the Null Space.

(5) The datum point (d) of an entity (e) in a property space (P) is a point of P such that if (1 -= (a,, al. a,. . . ., a,,), then a, is the value assigned to e from the ith property value set of P for i -I 1. 2, 3, . . ., n. ((l) is the representation of (e) in (P). Thus, only a small subset of the points in a property space are datum points. Every entity has exactly one datum point in a given property space.

Extract: Lines and functions of lines

Lines and functions of lines

A line (L) is an ordered set of points chosen from P.

The span (n) of the line is the number of points comprising the line. A line L of span n is written as: L = (p,, p2. . . ., p,,) The term line is introduced to provide a generic term for a set of points which are related. In a payroll application the datum points might be individual records for each person for five working days of the week. These live records plotted in the property space would represent a line of span five.

A function of lines (FOL) is a mapping that assigns one and only one value to each line in P. The set of distinct values assigned by an FOL is the value set of the FOL. It is convenient to write an FOL in the functional form , f ' ( X ) , where f is the FOL and X is the line. In the example of the five points representing work records for five days work, a FOL for such a line might be the weekly gross pay of an individual, which would be the hours worked times payrate summed over the five days of the week. An Ordinal FOL (OFOL) is an FOL whose value set is contained within some defining set for which there exists a relational operator R which is irreflexive, asymetric, and transitive. Such an operator is "less than" on the set of real numbers. Extract: Areas and functions of areas

Areas and functions of areas

An area is any subset of the property space P; thus the representation of a file in the property space is an area. A function of an area (FOA) is a mapping that assigns one and only one value to each area. The set of distinct values assigned by an FOA is defined to be the value set of the FOA. It is convenient to write an FOA in the functional form f'(X), where f is the FOA and X is the area.

Extract: Bundles and functions of bundles

Bundles and functions of bundles

In data-processing applications, related data from several sources must be grouped. Various types of functions can be applied to these data to define new information. For example, files are merged to create a new file. An area set /A of order n is an ordered n-tuple of areas (A,, A,. . . ., A, = /A). Area set is a generic term for a collection of areas in which the order is significant. It consists of one or more areas which are considered simultaneously; for example. a transaction file and master file from an area set of order 2.

Definition:

The Bundle B -: B(b, /A) of an area set /A for a selection OFOL h is the set of all lines L such that if

(a) /A = (A,, .Az. . . ., A,,) and

(0) L = ( p , . p*, . . .,p,,). where p, is a point of A, for

i = 1,2 , . . . , n,

then b( L) = True.

A bundle thus consists of a set of lines each of order n where 11 is the order of the area set. Each line in the bundle contains one. and only one. point from each area. The concept of bundles gives us a method of conceptually linking points from different areas so that they may be considered jointly. As an example, consider two areas whose names are "Master File" and "Transaction File," each containing points with the property Part Number. The bundling function is the Part Number. The lines of the bundle are tlie pairs of points representing one Master File record and one Transaction File record with the same partner.

A function of a bundle (FOB) is a mapping that assigns an area to a bundle such that

(a) there is a many-to-one correspondence between the lines in the bundle and the points in the area;

(b) the value of each properly of each point in the area is defined by an FOL of the corresponding line of the bundle;

(c) the value set of such an FOL must be a subset of the corresponding property value set.

The function of a bundle assigns a point to each line of the bundle; thus a new Master File record must be assigned to an old master file record and a matching transaction file record.

Extract: Glumps and functions of glumps

Glumps and functions of glumps

If A is an area and g is an FOL defined over lines of

span 1 in A, then a Glump G - G(g, A) of an area A for an FOL g is a partition of A by g such that an ele- ment of this partition consists of all points of A that have identical values f' or g. The function g will be called the Glumping Function for G. The concept of a glump does not imply an ordering either of points within an element, or of elements within a glump. As an example, let a back order consist of points with the three non-null properties: Part Number, Quantity Ordered, and Date. Define a glumping function to he Part Number. Each element of this glump consists of all orders for the same part. Different glump elements may contain different numbers of points. A function of a glump (FOG) is a mapping that assigns an area to a glump such that

(a) there is a many-to-one correspondence between the elements of the glump and the points of the area ;

(b) the value of each property of a point in the assigned area is defined by an FOA of the corresponding element in the glump:

(c) the value set of the FOA must be a subset of the corresponding property value set.

in The Computer Journal 5(3) October 1962 view details

The properties of data

It is, of course. the available properties of the data which to a large extent determine the power of an automatic programming system, and distinguish commercial from mathematical languages.

Consider the function of moving data within the internal store. In a mathematical language the problem is trivial because the unit which may be moved is very restricted, often to the contents of a single machine word. But in a commercial language this limitation is not acceptable. There, data units will occur in a variety of shapes and sizes. for example:

i) Fixed Length Units (i.e. those which on each occurrence will always be of the same length) may vary widely in size and will tend not to fit comfortably into a given number of words or other physical unit of the machine. Generally the move will be performed by a simple loop. but there are some awkward points such as what to fill in the destination if the source is the smaller in size;

ii) Static Variable Length Units (i.e. those whose length may vary when they are individually created but will not change subsequently) are more difficult to handle. Essentially the loop will have two controlling variables whose value will be determined at the moment of execution. There are again awkward points such as the detection of overflow in the destination (and deciding what to do when it occurs, since this will only be discovered at run time);

iii) Dynamically Variable Length Units (i.e. those which expand and contract to fit the data placed in them) are even more difficult. They have all the problems of (ii), together with the need to find and allot space when they expand.

It is clear, therefore, that a simple MOVE is less innocuous than it might seem at first. Actually the above remarks assumed that it was not possible to move data between different classes of units. The absence of this restriction, and the performance of editing functions during the process, can make the whole thing very complicated for the compiler indeed.

The properties of data will have a similar influence on most of the other operators or verbs in the language.

This has particular significance when the desired attribute is contrary to that pertaining on the actual machine. Thus arithmetic on decimal numbers having a fractional part is thoroughly unpleasant on fixed-word binary machines.

Nevertheless, despite these difficulties considerable progress has been made toward giving the user the kind of data properties he requires. Unfortunately this progress has not been matched by an improvement in machine design so that few, if any, of the languages have achieved all of the following.

(a) The arbitrary grouping of different classes of unit, allowing their occurrence to be optional or for the repetition in variable-length lists.

(b) The input and output of arbitrary types of records, or other conglomerations of data units. having flexible formats. editing conventions and representations

(c) The manipulation of individual characters, with the number and position of the characters being determined at run time.

(d) The dynamic renaming or grouping of data units. Yet users do need these facilities. It is not always acknowledged that getting the main files on to the computer, before any processing is done, may constitute the largest single operation in many applications. Furthermore. these files will have a separate independent existence apart from the several programs which refer to them.

Progress has also been made in declaring the data properties in such a way as to imply a number of necessary procedures to the compiler. For example, if one declares both the layout of some record to be printed, and the printed record, the compiler may deduce the necessary conversion and editing processes. It is here, in the area of input and output, that some languages have approached the aim of being problem-orientated. Extract: FACT

FACT

This is the language of Minneapolis-Honeywell and is currently working on their 800, newly installed at Moor House. It is an untidy language, poorly supported by both its general and reference manuals.

But the looks of FACT belie its power. For FACT is a real programming tool which is aimed at important practical problems of the user. It has tackled file processing in a big way, although the rules may be confusing at first, and it has probably the most powerful facilities for input and output of any existing language. Like COBOL it lacks, however. A suitable means of defining new functions.

In comparing FACT to languages developed in the U.K. it must be remembered that it involved an effort of at least an order of magnitude greater than was ever available on a home project of this kind. There are some 220,000 three-address instructions in the compiler, and the mind boggles at how such a program was ever organized and debugged. I believe this has been a problem, and that work still continues on completing final points.

in The Computer Journal 5(2) July 1962 view details

Introduction

It is desirable to begin by defining what we mean by a compiler. In the broadest terms this might be described as a Programming System, running on some computer, which enables other programs to be written in some artificial source language. This result is obtained by the simulation of the artificial machine represented by the source language, and the conversion of such programs into a form in which they can be executed on one or more existing computers.

This definition, however inadequate, at least avoids any artificial distinction between 'Interpreters' and 'Compilers' or 'Translators'. For although these terms are still in widespread use it now appears that the distinction between them is only valid for particular facets of any modern programming language, and even then only as a rather inadequate indication of the moment in time that certain criteria are evaluated.

On the more positive side the definition does bring out a number of points which are sometimes forgotten.

1 . The Compiler as a Program

A compiler is a program of a rather specialized nature. As such it takes a considerable time and effort to write and, more particularly, to get debugged. In addition it often takes a surprising amount of time to run. Nevertheless compilers are concerned with the generalized aspects of programming and have in consequence been the source of several important techniques. These could be applied to a wider field if a sufficient number of programmers would take an interest in such developments.

2. The Three Computers

There are three possible computers involved-the one on which the compiler will run, the artificial computer, and the one which will execute the program (although the first and last are not necessarily distinct). In general the more that the facilities in the computers involved diverge the harder is the task of the compiler. On the other hand the use of a large computer to compile programs for smaller computers can avoid storage problems during compilation, and any consequent restrictions in the language, and make the process more flexible and efficient. This can be readily appreciated from the fact that some COBOL compilers running on small computers take more than forty passes! But the full benefit of large computers used at a distance for this purpose is dependent on cheap and reliable data links, since at the moment they introduce delays in compilation and the reporting of errors.

3. Presentation to the User

Any user of the system must, to be effective, learn how to write programs in the artificial source language which will fit the capabilities of both the artificial and the actual object machine. This requirement has become obscured by the current myths of the so-called 'Natural' or 'English' langu. ages, and the widespread claims that 'anyone can now write programs' are highly misleading. Indeed existing source languages are rather diacult to learn and show no particular indication that they are well adapted toward the task for which they are intended.

A more serious drawback of the 'Natural' language approach is that it hinders or prevents the new facilities being presented in terms of a machine. This is unfortunate because to do so would probably give a better mental image of the realities involved and a better understanding of the rules and restrictions of the language, which tend to be confusing until it is appreciated that they are compiler-, or computer-, oriented. Extract: The Main Requirements of a Commercial Compiler

The Main Requirements of a Commercial Compiler

A major problem in commercial compilers is the diversity of the tasks which they are required to achieve, some of which often appear to have been specified without regard to the complexity they introduce into the compiler compared to the benefit they confer on users. This ambitiousness may be contrasted with that of the authors of ALGOL (Backus et al., 1960) who concentrated on the matters which were considered important and capable of standardization (e.g. general procedures), and largely ignored those considered of lesser importance (e.g. input/output). There is no doubt that this concentration is the chief reason why ALGOL compilers normally work on or about the date intended whilst commercial compilers normally do not. The main tasks which are commonly required are discussed here.

1. Training and Protecting the User

The System must enable intending users who have no previous experience of computers to obtain that experience. This means that the language must include both a 'child's guide to programming' and the facilities which the user will require when he actually comes to writing real and complex programs. In addition the compiler is expected to protect the user, as much as possible, from the results of his own folly. This implies extensive checking of both the source and object program and the production of suitable error reports. But the main difficulty is to provide error protection which is not unduly inefficient in terms of time or restrictive on the capabilities of the user.

2. Data Declarations

The declaration of the properties of the users' data should be separable from the procedures which constitute a particular program, because the data may have an independent existence that is quite distinct from the action of any one program. Thus for example a Customer Accounts File of a distributing company may be originally set up by one program and regularly updated by a number of others. In terms of the 'global' and 'local' concepts of ALGOL this introduces a 'universal' declaration which is valid for all, or a number, of programs.

This requirement means that the compiler should keep a master file of declarations which is accessible to all programs, and at the same time provides the means of extending, amending and reporting on the contents of this file.

3. InputlOutput Data

The input and output data must have a wide range of formats and representations because it is normally intended to allow users to continue with the same media, and the same codes and conventions, which they employ at present. And there is of course no assurance that these conventions were designed, or are particularly suitable, for a computer! This is the kind of requirement which is very hard on the compiler writer.

If the specification of format and any other information is to be made in the data description it must be done in a way that is understandable and not too complicated for the users, which means the compiler may not find it simple to pick up the relevant parameters. The actual process of implementation is a choice of one of the following.

(a) A very generalized routine is built to cover all possibilities. This is safe but inefficient in the average case.

(b) A fairly general routine is built to cover the anticipated range. This is less safe but only slightly more efficient.

(c) Individual routines are constructed for each particular variation. This is very efficient but also very expensive in terms of compiler effort.

(d) A combination is provided of both (a) and (c) which is economical only if a correct guess has been made of the most commonly occurring cases.

But this is of course the type of quandary which arises in several different areas of a compiler.

4. Properties and Manipulation of Data

It must be possible to form data structures during the running of the object program and to be able to manipulate data in a reasonably general way. The latter has been deliberately left indefinite because there is considerable disparity between the different commercial languages as to both the properties and the manipulation of data. The points of distinction are:

(a) Whether the most common unit of data (normally referred to as a Field) should be allowed to vary dynamically in length. The argument in favour of this property centres on such items as postal addresses-these usually vary between twenty to 100 or more characters with an average of forty or less.

This property has a very marked effect on the compiler. Not only must the address determination of such fields be handled in a particular way but other fields may have to be moved round dynamically to accommodate their changes in length.

(b) Whether two or more Lists of fields (i.e. vectors) should be allowed in the unit of data handled sequentially on input and output (normally referred to as a Record). Some types of Record kept by conventional methods are claimed to have this property but it is very difficult for a compiler to handle more than one list economically. The problem is simply one of addressing when the lists grow unevenly.

(c) Whether characters within fields should be individually addressable.

This is the kind of facility which is very important when master files are being loaded on to magnetic tape for the first time. Such loading often constitutes the largest single task of an installation and may be very complex because of the variety of media in which parts of these files were previously held. The addressing of characters even on fixed word machines turns out to be quite simple using a variation of the normal representation of subscripts.

There is a greater emphasis on the efficiency of object programs in Commercial as opposed to Scientific Languages due to the higher frequency of use expected of such programs. Unfortunately this emphasis usually takes the form of rather superficial comparisons in terms of time, whereas on most computers actually available in Europe space is the dominant factor. The distinction between the two is however as valid for a compiled program as any other, the production of open subroutines will take least time and most space whilst closed subroutines, particularly if the calls and parameters are evaluated at object time, will take most time and least space.

6. Operating Characteristics

Little attention is normally given to the operating characteristics of either the Compiler or the Object Program until some user is actually running both. Yet both are important in practice. In regard to the former this involves inter alia the details of loading the compiler and source programs, the options available in the media of the Object Program and the production of reports thereon, and the actions to be taken on the detection of errors. They are all quite trivial tasks for the compiler and usually depend on the time available to add the necessary frills to make the compiler more convenient to the user. The operating characteristics of the Object Program are much more serious and largely dominated by the question of debugging and the action on errors detected through checks inserted by the compiler. There is no doubt that the proper solution to both lies in making all communication between the user and the machine in terms of the source language alone. At the same time the difficulty is obvious because there is no other reason why such terms should be present in the Object Program. In addition the dynamic tracing of the execution of the program in source language is hindered both by any optimization phase included in the compiler, and by the practice of compiling into some kind of Assembly Code.

No existing Commercial Compiler has succeeded in solving this problem. Instead it is customary to print out a full report of the Object Program which is related to the source language statements (e.g. by printing them side by side). Error messages will then refer to that report and special facilities may be provided to enable test data to be run on the program with similar messages as to the results obtained. It will be apparent that this is not very difficult for the compiler, since it is normally possible to discover what routine gave rise to an error jump, but more knowledge is required by the user in terms of the real machine than is otherwise necessary.

The other items which can be considered as part of the Operating Characteristics are greatly assisted by the hardware facilities available and include: (a) checks that the correct peripherals have been assigned, and the correct media loaded, for the relevant program; (b) changes in the assignments of input/output channels due to machine faults, and options in peripherals for the same reason; (c) returns to previous dump points particularly in regard to the positioning of input/output media.

in Wegner, Peter (ed.) "An Introduction to Systems Programming" proceedings of a Symposium held at the LSE 1962 (APIC Series No 2) view details

Segmentation

Segmentation is the process of dividing a single program into pieces. This is done to permit the operation of programs that are too large to completely fit into memory. The pieces of the program are loaded only when needed. By having these segments time share areas of memory, the program may be executed.

The importance of segmentation has grown with the size of programs being produced. This has been accentuated by the popularity of compiler usage. It has become easier and as a result practical to write larger programs attacking larger problems. Divorcing the compiler user from machine considerations tends to have him create larger programs. The compiler tends to generate code that is more general and requires more space than the human created codes. The result is that every major compiler must give careful consideration to segmentation provisions.

in [AFIPS JCC 21] Proceedings of the 1962 Spring Joint Computer Conference in San Francisco, Ca. SJCC 1962 view details

in Wegner, Peter (ed.) "An Introduction to Systems Programming" proceedings of a Symposium held at the LSE 1962 (APIC Series No 2) view details

Aims

What do we want from these Automatic Programming Languages? This is a more difficult question to answer than appears on the surface as more than one participant in the recent Conference of this title made clear. Two aims are paramount: to make the writing of computer programs easier and to bring about compatibility of use between the computers themselves. Towards the close of the Proceedings one speaker ventured that we were nowhere near achieving the second nor, indeed, if COBOL were to be extended any further, to achieving the first.

These aims can be amplified. Easier writing of programs implies that they will be written in less, perhaps in much less, time, that people unskilled in the use of machine language will still be able to write programs for computers after a minimum of training, that programs will be written in a language more easily read and followed, even by those completely unversed in the computer art, such as business administrators, that even the skilled in this field will be relieved of the tedium of writing involved machine language programs, time-consuming and prone to error as this process is. Compatibility of use will permit a ready exchange of programs and applications between installations and even of programmers themselves (if this is an advantage!), for the preparation of programs will tend to be more standardised as well as simplified. Ultimately, to be complete, this compatibility implies one universal language which can be implemented for all digital computers.

Extract: FACT

FACT

The advent of COBOL has not stopped the flow of production in other source languages, either here or in the USA, although there was very little experience of their actual use yet in either country. In USA experience in the mathematical field was the greater, with wide experience of FORTRAN although little as yet of ALGOL (in the development of which Europe was regarded as the leader), but as far as commercial languages were concerned, there has been little actual use of FLOWMATIC or of COBOL, and while FACT (the Honeywell language for their 800 and 1800 computers, described in some detail to the Conference) has been in customers' hands for over a year, this was still experience of no great significance as yet.

FACT was started a month before COBOL and developed independently of it: it has many features not found in COBOL. The key to understanding FACT lies in its handling of files to which special attention has been paid. No other language has handled bulk files yet, and this has given FACT a significant role in the development of these commercial languages. In most file structures information is dealt with at various logical levels, groups pertaining to one subject coming under one group heading, and these related group headings being themselves collected under a larger group heading at a higher level. Thus an information hierarchy is arrived at and FACT is constructed to deal with file information on this basis.

In addition to this, FACT is loaded with convenient ways of doing things, including features not available in other languages: for example, handling of punched card input, with particular regard to ease of editing and checking (it is claimed to have the most powerful input/output facilities of any automatic programming language so far), sorting on magnetic tapes (40 % of data processing is reckoned to be made up of sorting and FACT has influenced the later development of COBOL in this respect), and ease of description in reporting.

The FACT compiler has 220,000 3-address machine instructions. It is being improved all the time and will run on the 1800 three times as fast as it does on the 800. Cases were cited where FACT programs were already written and working satisfactorily: one of 30,000 words for payroll, for example, was written in three man/months and had completed eighteen runs since last January. Of the Honeywell 800 customers, five use nothing but FACT, twelve use a mixture of FACT and machine code, and one actually chose a machine to take FACT because it handles paper tape so easily. Experience has already shown that for a compiler of this size, more equipment is needed for the implementation, but it does nevertheless serve to replace unavailable manpower in programming.

in The Computer Bulletin September 1962 view details

A problem of continuing concern to the computer programmer is that of file design: Given a collection of data to be processed, how should these data be organized and recorded so that the processing is feasible on a given computer, and so that the processing is as fast or as efficient as required? While it is customary to associate this problem exclusively with business applications of computers, it does in fact arise, under various guises, in a wide variety of applications: data reduction, simulation, language translation, information retrieval, and even to a certain extent in the classical scientific application. Whether the collections of data are called files, or whether they are called tables, arrays, or lists, the problem remains essentially the same.

The development and use of data processing compilers places increased emphasis on the problem of file design. Such compilers as FLOW-MATIC of Sperry Rand , Air Materiel Command's AIMACO, SURGE for the IBM 704, SHARE'S 9PAC for the 709/7090, Minneapolis- Honeywell's FACT, and the various COBOL compilers each contain methods for describing, to the compiler, the structure and format of the data to be processed by compiled programs. These description methods in effect provide a framework within which the programmer must organize his data. Their value, therefore, is closely related to their ability to yield, for a wide variety of applications, a ,data organization which is both feasible and practical.

To achieve the generality required for widespread application, a number of compilers use the concept of the multilevel, multi-record type file. In contrast to the conventional file which contains records of only one type, the multi-level file may contain records of many types, each having a different format. Furthermore, each of these record types may be assigned a hierarchal relationship to the other types, so that a typical file entry may contain records with different levels of "significance." This article describes an approach to the design and processing of multi-level files. This approach, designated the property classification method, is a composite of ideas taken from the data description methods of existing compilers. The purpose in doing this is not so much to propose still another file design method as it is to emphasize the principles underlying the existing methods, so that their potential will be more widely appreciated.

in [ACM] CACM 5(08) August 1962 view details

Q: Would you describe some of the major contracts which CSC has obtained?

A: One of our first projects included development of the FACT language, the design of its compiler, and implementation of most of the FACT processor.

Later, CSC developed the LARC Scientific Compiler, an upgraded variant of the FORTRAN II language, and designed the ALGOL/ FORTRAN compiler for the RCA 601.

Recently, we have finished other complete systems for large scale computers such as the UNIVAC III and 1107. By complete systems, I mean the algebraic and business compilers, executive system, assembly program and other routines.

For the Philco 2000, we developed ALTAC IV, COBOL-61, and a very general report generator.

An assembly program for a Daystrom process control computer, a general sort-merge for another computer, several simulators, and many others at this level of effort have been accomplished.

In areas concerned with applications, we developed two PERT/COST systems for aircraft manufacturers and are responsible for the design and implementation of a command and control system at Jet Propulsion Lab for use by NASA in many of our space probes scheduled later this year and beyond.

In the scientific area we have worked on damage assessment models, data acquisition and reduction systems, re-entry and trajectory analysis, maneuver simulation, orbit prediction and determination, stress analysis, heat transfer, impact prediction, kill probabilities, war gaming, and video data analysis.

In the commercial applications area, we have produced systems for payroll and cost accounting, material inventory, production control, sales analysis and forecasting, insurance and banking problems, etc.

Abstract: Q: On the subject of compiler development, CSC is widely known for its work on the FACT language and processor. There has been considerable discussion on delays in meeting deadlines, delays in hardware availability, etc. Your experiences with FACT are often referred to as a basis for the establishment of new customer-manufacturer relationships such as a switch from time and material to fixed cost contracts. Would you explain CSC's position with regard to FACT?

A: A full statement of history would necessarily require far more time than we have now, due to the many cause and effect relationships inherent in a development of the kind under discussion. There are things which CSC, and I'm sure Honeywell, would do differently if the problem were encountered anew, but this is always the case, whether the name of the project is FACT, FORTRAN, AIMACO, SOS or any other of the many compilers which, at their initial issue, were "late."

Within the framework of absolute accomplishment, however, neither Honeywell nor CSC need assume a defensive posture; FACT is a pioneering project incorporating a high level of achievement ? no other language or compiler yet has the breadth of scope and completeness of facility to be found in the FACT system. The Intermediate Range Committee, by far the most knowledgeable group within CODASYL, voted unanimously, despite later politically-oriented recantations, for the FACT language as the standard of excellence for its time.

That it was an ambitious project, perhaps too ambitious for the immediate needs of the ultimate users; that there was overoptimism during its development; that there was too much change after the design freeze date (much of which was demanded by the users who later bewailed its lateness), cannot be denied. Much more prosaic develop-

merits have suffered the same fate, however, and rare indeed is the systems programmer who can cast the first stone on these counts.

As a practical matter, however, regardless of good motives and the whys of lateness, it is certain that, to the ultimate user, a very substantial part of the value of a system is its availability on schedule. On this score, both Honeywell and CSC learned lessons from FACT, and from the' H-800 development itself, which I'm sure will protect their customers from a reoccurrence in the future. Certainly we learned; CSC has delivered complete software systems, including assembly program, executive system, COBOL and FORTRAN compilers, etc., on a fixed price basis and in a much shorter time frame, since the FACT project.

As to the effect of FACT on contract type for this kind of work, I'm not sure that there's any direct relationship, except as the experience might apply to the thinking of Honeywell and CSC. We have, since FACT, virtually always proposed a fixed price contract because we feel it induces a certain needed discipline in the contracted relationship and because it tends to keep the competition honest and to weed out, through economic attrition, those who bid low but don't have the capability to produce economically. As far as I know, CSC was the first to promote the use of fixed pricing on work of this type and size.

Q: Would you estimate the total costs to Honeywell of the development and implementation of the FACT language and compiler?

A: I have no way of accurately estimating this cost, since so many factors are involved, although some comparisons might be made. From exposure to software budgets and projects within other manufacturer's shops, I can state a belief that FACT did not cost more than now might be anticipated for a development of such scope. Certainly our own level of FACT effort has been exceeded by others in the implementation of even state-of-the-art systems devoid of the FACT design problems and accomplishments. As compared with the original FORTRAN, in many ways analogous to FACT development in its time, I feel intuitively but strongly that FACT must have been much less expensive.

By far the biggest influence on "cost," however, .is that connected with loss of revenue from those customers dependent upon the system in question. This is more a function of marketing policy than one of spent manpower. Some manufacturers experience little or no loss of revenue due to late deliveries, while others, dealing from a position of less strength in an attempt to attract customers, assume a posture of high vulnerability in these regards. When, in the latter case, there is a slippage, economic trauma results which can far overshadow other costs of development.

in [ACM] CACM 5(08) August 1962 view details

in Goodman, Richard (ed) "Annual Review in Automatic Programming" (3) 1963 Pergamon Press, Oxford view details

in [ACM] CACM 6(05) May 1963 "Proceedings of ACM Sort Symposium, November 29, 30, 1962" view details

The last little peak in the publicity noise level that denoted an item about FACT seems to have been some time ago. There have been several confident pronouncements from various implementers about little brother COBOL. However, where a working COBOL compiler is actually being used a similar quietness appears to prevail about how it is getting on.

For some time after a compiler has reached a state in which it is fit to be released errors continue to be found in it. The rate at which corrections are sent out to users drops steadily until eventually months pass without an error being found. The moment at which a compiler is, in that sense, error-free is not a clearly marked stage. It is in any case difficult for a manufacturer to announce six months after the release fanfare that his compiler is now actually fit to be used.

There is another reason for the prevailing quietness re-experience in use of commercial compilers. That is that the original excitement was too great. The reality continues to promise great things for the future. But in the meantime it is just a little drab. Why should this be so if the compilers work correctly to the original specification upon which the hopes were based?

Perhaps, because it was going to be so easy to write programs, it was naturally assumed that the programs written would be perfectly written. By and large they are correctly written but the relentlessness with which a computer explores every logical crevice in a program means that the old need for accurate machine code now becomes a need for precision in the plain language statements. And this precision must relate to the effect of each word, to the interpretation of symbols by the compiler and to all the combinations that occur in the program.

There is about a plain language for a computer a deceptive air of meaning what it says. Plain language is inter-man use fits the man context. To apply it to the computer context by direct analogy is fine so long as the analogy fits; generally it fits very badly. The best fit is in a statement like GO TO TOWN-HALL where there exists a paragraph in the program labelled TOWN-HALL. We know, with a. smile, what it means. But the COBOL statement MOVE CORRESPONDING CUSTOMER-ACCOUNT OF MASTER TO MAIL-LABEL OF NEW-MASTER requires a fair deal of thinking about, and that thinking is neither about a computer nor about any clear human situation. The situation is a contrived one and its concepts are clear and hard only in terms of the compiling process and the object computer configuration. Each word adds its own to the total meaning of the sentence and the sentence has no meaning apart from that achieved in that synthetic way.

In human talk, a sentence is generally more than the sum of its parts, it is frequently a single symbol of which the component words do no more than enable it to be recognised. There are, virtually, such sentences in FACT. For example the statement SEE INPUT-EDITOR could equally well be replaced by the slightly less pompous GET-ON-WITH-READING-THE-CARDS-NOW, at the cost to the programmer of remembering it as a special case. It is obviously preferable that the SEE INPUT-EDITOR form be used because it is connected by the SEE, DO, PERFORM rules to the rest of the language. It is immaterial in this case whether the programmer writes SEE INPUT-EDITOR because he remembers that as the phrase to use or because he wishes to link in a generated INPUT-EDITOR procedure to the flow of his program.

But it is not necessarily immaterial which approach he uses with other statements in a programming language. If he is to write correct programs he must know the effect of each word that he sets down within the context of the surrounding words and descriptions. The programmer may rely on the conceptual coherence of the portion of the language with which he is concerned in order to know the effect of the statements which he writes, or he may refer to given rules on the correct use of each word and on its correct relationship with others.

This article does not attempt an analysis of either FACT or COBOL to establish the underlying ideas. FACT and COBOL are clearly different in their conceptual structure in spite of the seeming similarity of words, syntax and field of operation. What it does attempt is to account for the rather odd difficulties being encountered in this stage of development and use of commercial data-processing languages.

A powerful programming language such as FACT offers a means of manipulating business data flexibly and accurately, A beginner can write correct but straightforward programs to do an enormous amount of computing. Gradually he can incorporate in his programs all the facilities which FACT contains. But this is another way of saying that in order to use FACT facilities properly and fully, the programmer requires to learn it in detail.

FACT is well-founded in its file structure. The FACT file hierarchy is conceptually simple and at the same time appropriate to the ordinary complexity of business data processing. COBOL files are founded conceptually on blocks of information assembled in a core memory buffer (agreed that business data processing can be moulded to this without too much difficulty). What matters is that the programmer makes some of his errors in fitting the language concepts to the job and others in fitting the statements permitted in the language to the concepts.

Large fields of potential error are removed by the provision in FACT of generated routines for the input of whole files of punched cards and the output of whole series of printed documents in accordance with static descriptions instead of by program statements for sequential execution by the computer. The programmer's difficulty of using time-sequential steps to arrange and determine logical and physical relationships is removed. By such means FACT brings the programmer nearer to reality than even machine-code allows. But from within the given universe the particular has to be specified and accuracy is essential. While the FACT approach is different from and much easier than that which COBOL adopts it also requires a little more care than might at first sight seem necessary. The data and relationships must be correctly described.

Even the FACT statement "SORT file-name TO file-name, CONTROL ON Key-1, Key-2,-------Key-n" which seems very simple can be wrongly used. While it will sort multi-reel files if correct, it will fail on half-a-dozen records if appropriate keys are not stated for the groups on the file. There is a certain minimum amount of information to be given before even a hand-sort can be performed and if less is given the sort cannot succeed.

No programmer ever knowingly writes a wrong statement: either another programmer or the computer makes plain the error. In the present stage when experience is being gained in the use of FACT and COBOL it is the computer which is the main agency in the inspection of programs. Much desk checking is certainly possible, but there is a limit to how much desk checking can reasonably be done. The computer will as easily reveal ten clerical errors in a program as the one error still remaining after a thorough check. In the case where one clerical error obscures another this applies with less force, but generally the computer will be used for the checking of clerical errors.

Honeywell use a short "diagnostic" version of the FACT compiler to produce a listing of each program input to it; together with explanatory comments on the errors found in it. On the H-800 computer it takes from 2 to 25 minutes running time to obtain a diagnostic listing of a FACT source program. The listing is sent to the programmer who makes any needed correction to his program. Theoretically this process is repeated once; in practice it is, at the moment, repeated several times before the listing is free from diagnostic comments.

Compilation of FACT programs on the H-800 requires from 15 to 50 minutes of machine running time. The compilation process produces another listing of the source program and from a few hundred up to many thousand words of program in ARGUS Assembly code. This is batched with ARGUS code programs from other sources and assembled on to a symbolic program magnetic tape where it is available for subsequent program testing and production scheduling and for alteration by hand-made changes if these should be wanted.

Program testing initially reveals, almost always, some logical error in the source program. With FACT the error is generally easily traced and a small change in the program is made. One then either recompiles or makes a correction to the ARGUS coding.

The choice made between recompilation and correction of the ARGUS coding, partly reflects one's view of the programming language. One may see the language as a means of programming the computer or one may see it as a means of producing large amounts of lower-level program code for the computer. To re-compile requires extra machine-time but keeps the documentation straight and is, if machine-time is available, quick and simple. To patch the ARGUS coding requires a knowledgeable ARGUS programmer and means that the program listing is no longer in line with the object program on tape, but saves machine-time.

In short, it is unexpected but true that plain language programmers make errors and these may necessitate several diagnostic runs and several compilations and assemblies. While the labour cost of writing such programs is a fraction of that for writing an equivalent amount of assembly code, the cost of machine-time is apparently very high. It is probably, however, no higher than would be required by the ARGUS programmers for assembling and testing the equivalent job within the lower level assembly language. The proportion of machine time to programming time changes enormously with the use of a compiler.

As experience is gained in writing plain language programs the frequency with which a complicated program works first time of trying will rise. The skill of the programmer and the complexity of the problem together determine the cost of programming and a small change in either might make a very large difference to the cost. Unskilled programmers will be able, with a little assistance, to write straightforward programs correctly.

The solution to the present problems lies in the skill of the programmer. The rewards of automatic coding are large and they are now available; they are those which have been the motive force behind the language design and implementation effort; however the hope that a complete layman would be able to use the full power of a language after three or four days instruction must be, for the moment, abandoned. When machine-time is severely limited the expert FACT programmer, and even more the COBOL programmer, is going to have to know the language intimately, the operation of the compiler fairly well, the intermediate assembly code and the machine. The next stage, starting now, is going to be one in which success in getting cheap and rapid production results will be normal.

Already with only two FACT programmers, three large applications have been programmed in under eight months. Early inexperience led to the loss of much time in repeated compilations, but even in those circumstances the use of FACT compares favourably with the use of assembly language. To produce by hand the coding of over 450,000 three-address instructions involved in the applications, even if somewhat more condensed, would require many man years of effort.

in [ACM] CACM 6(05) May 1963 "Proceedings of ACM Sort Symposium, November 29, 30, 1962" view details

The paper contains some detailed description of the problems involved in data handling in commercial translators with emphasis on the need for more flexibility than presently available. The author, as have many before him, again questions the value of attempting to create languages "any fool can use" at the cost of efficiency and flexibility, when even these languages will not prevent the fool from having to debug his programs.

On existing commercial translators, the author lists and compares briefly COBOL, FACT, COMTRAN and several English efforts which "are either not working, or not on a par with their American equivalents."

The paper concludes with some not so new but nevertheless appropriate recommendations to computer manufacturers and standards committees, and the expectation of the universal acceptance of COBOL as He commercial language.

in ACM Computing Reviews 4(01) January-February, 1963 view details

The segmentation method described is general in scope and can be implemented on other computers. However, the need for dynamic allocation of segments is felt more on computers having multiprogramming capabilities, and the efficiency of dynamic segmentation depends on the availability of index registers, indirect addressing, and automatic unprogrammed transfer of control (automatic program interrupt).

in ACM Computing Reviews 4(01) January-February, 1963 view details

This paper is a talk that was presented at a conference held in England. It gives a brief but adequate description of FACT, the commercial compiler produced for the Honeywell computers, and points out the differences between FACT and COBOL while indicating that the earlier design of FACT influenced that of COBOL. For a more complete description of FACT the author recommends his article in Automatic Programming, Volume 2, Pergamon Press, 1961 [CR 3, 5 (Sept.-Oct. 1962), Rev. 26021. The author provides important statistics which reveal that the program consists of about 223,000 three-address instructions (of which 158,000 are the library of routines which are selected to form the object program) and took 60 man-years to produce. A typical compilation produces 7,000 words of object program in 20 minutes. With streamlining of FACT and a larger machine, the latter figure should be considerably less.

The article concludes with the discussion period which followed the FACT paper and one on COBOL. Of particular interest is the expression of the unfavorable attitude toward COBOL which seems to be prevalent in Britain. The reasons given are partly economic: British computers are small, and often with few or no magnetic tape drives. Additional reasons are that the cost of operating COBOL seems high, the language too verbose, and there is danger of standardizing the language and machine codes to avoid reprogramming the problems of COBOL itself for new machines.

J. Levenson, Bedford, Mass.

in ACM Computing Reviews 4(01) January-February, 1963 view details

in ACM Computing Reviews 4(05) September-October 1963 view details

in The Computer Journal 7(2) July 1964 view details

in ACM Computing Reviews 5(05) September-October 1964 view details

At the original Defense Department meeting there were two points of view One group felt that the need was so urgent that it was necessary to work within the state of the art as it then existed and to specify a common language on that basis as soon as possible The other group felt that a better understanding of the problems of Data-Processing programming was needed before a standard language could be pro posed They suggested that a longer range approach looking toward the specification of a language in the course of two or three years might produce better results As a result two committees were set up a short range commit tee, and an intermediate range committee The original charter of the short range committee was to examine existing techniques and languages, and to report back to CODASYL with recommendations as to how these could be used to produce an acceptable language The committee set to work with a great sense of urgency A number of companies represented had commitments to produce Data-processing compilers, and representatives of some of these be came part of the driving force behind the committee effort The short range committee decided that the only way it could satisfy its obligations was to start immediately on the design of a new language The committee became known as the COBOL committee, and their language was COBOL.

Preliminary specifications for the new language were released by the end of 1959, and several companies, Sylvania, RCA, and Univac started almost immediately on implementation on the MOBIDIC, 501, and Univac II respectively.

There then occurred the famous battle of the committees The intermediate range committee had been meeting occasionally, and on one of these occasions they evaluated the early COBOL specifications and found them wanting The preliminary specifications for Honeywell's FACT30 compiler had become available and the inter mediate range committee indicated their feeling that Fact would be a better basis for a Common Business Oriented Language than Cobol.

The COBOL committee had no intention of letting their work up to that time go to waste. With some interesting rhetoric about the course of history having made it impossible to consider any other action, and with the support of the Codasyl executive board, they affirmed Cobol as the Cobol. Of course it needed improvements but the basic structure would remain. The charter of the Cobol committee was revised to eliminate any reference to short term goals and its effort has continued at an almost unbelievable rate from that time to the present. Computer manufacturers assigned programming Systems people to the committee essentially on a full time basis Cobol 60, the first official description of the language was followed by 6131 and more recently by 61 extended .32

Some manufacturers dragged their feet with respect to Cobol implementation. Cobol was an incomplete and developing language, and some manufacturers, especially Honeywell and IBM, were implementing quite sophisticated data processing compilers of their own which would become obsolete if Cobol were really to achieve its goal In 1960 the United States government put the full weight of its prestige and purchasing power behind Cobol, and all resistance disappeared This was accomplished by a simple announcement that the United States government would not purchase or lease computing equipment from any manufacturer unless a Cobol language compiler was available, or unless the manufacturer could prove that the performance of his equipment would not be enhanced by the availability of such a compiler. No such proof was ever attempted for large scale electronic computers.

To evaluate Cobol in this short talk is out of the question A number of quite good Cobol compilers have been written The one on the 7090 with which I have had some experience may be typical It implements only a fraction, less than half I would guess, of the language described in the manual for Cobol 61 extended. No announcement has been made as to whether or when the rest, some of which has only been published very recently will be implemented. What is there is well done, and does many useful things, but the remaining features are important, as are some that have not yet been put into the manual and which may appear in Cobol 63.

The language is rather clumsy to use, for example, long words like synchronized and computational must be written out all too frequently, but many programmers are willing to put up with this clumsiness because within its range of applicability the compiler performs many important functions that would otherwise have to be spelled out in great detail. It is hard to believe that this is the last, or even very close to the last word in data processing languages. Extract: FACT

Before leaving Data Processing compilers I wish to say a few words about the development of the FACT compiler.

In 1958 Honeywell, after almost leaving the computer business because of the failure of their Datamatic 1000 computer, decided to make an ail out effort to capture part of the medium priced computer market with their Honeywell 800 computer. The computer itself is very interesting but that is part of another talk.

They started a trend, now well established, of contracting out their programming systems development, contracting with Computer Usage Co for a Fortran language compiler.

Most interesting from our point of view was their effort in the Data Processing field. On the basis of a contract with Honeywell, the Computer Sciences Corporation was organized. Their contract called for the design and production of a data processing compiler they called FACT.

Fact combined the ideas of data processing generators as developed by Univac, GE Hanford, Surge and 9PAC with the concepts of English language data processing compilers that had been developed in connection with Univac's Flow-Matic and IBM's commercial translator.

The result was a very powerful and very interesting compiler. When completed it contained over 250,000 three address instructions. Designed to work on configurations as small as 4096 words of storage and 4 tape units it was not as fast as some more recent compilers on larger machines.

The Fact design went far beyond the original COBOL specifications. and has had considerable influence on the later COBOL development.

Like all other manufacturers, Honeywell has forced to go along with the COBOL language, and FACT will probably fall into disuse.

in [AFIPS JCC 25] Proceedings of the 1964 Spring Joint Computer Conference SJCC 1964 view details

in ACM Computing Reviews 5(06) November-December 1964 view details

in ACM Computing Reviews 5(06) November-December 1964 view details

An important step in artificial language development centered around the

idea that i t is desirable to be able to exchange computer programs between

different computer labs or at least between programmers on a universal level.

In 1958, after much work, a committee representing an active European computer

organization, GAMM, and a United States computer organization, ACNI,

published a report (updated two years later) on an algebraic language called

ALGOL. The language was designed to be a vehicle for expressing the processes

of scientific and engineering calculations of numerical analysis. Equal stress was

placed on man-to-man and man-to-machine communication. It attempts to

specify a language which included those features of algebraic languages on

which it was reasonable to expect a wide range of agreement, and to obtain a

language that is technically sound. In this respect, ALGOL Set an important

precedent in language definition by presenting a rigorous definition of its syntax.

ALGOL compilers have also been written for many different computers.

It is very popular among university and mathematically oriented computer

people especially in Western Europe. For some time in the United States, it will

remain second to FORTRAN, with FORTRAN becoming more and more like

ALGOL.

The largest user of data-processing equipment is the United States Government.

Prodded in Part by a recognition of the tremendous programming investment

and in part by the suggestion that a common language would result only

if an active Sponsor supported it, the Defense Department brought together

representatives of the major manufacturers and Users of data-processing equipment

to discuss the problems associated with the lack of standard programming

languages in the data processing area. This was the start of the conference on

Data Systems Languages that went on to produce COBOL, the common business-

oriented language. COBOL is a subset of normal English suitable for expressing

the solution to business data processing problems. The language is

now implemented in various forms on every commercial computer.

In addition to popular languages like FORTRAN and ALGOL, we have

some languages used perhaps by only one computing group such as FLOCO,

IVY, MADCAP and COLASL; languages intended for student problems, a

sophisticated one like MAD, others like BALGOL, CORC, PUFFT and various

versions of university implemented ALGOL compilers; business languages in addition

to COBOL like FACT, COMTRAN and UNICODE; assembly (machine)

languages for every computer such as FAP, TAC, USE, COMPASS; languages to simplify problem solving in "artificial intelligence," such as the so-called list

processing languages IPL V, LISP 1.5, SLIP and a more recent one NU SPEAK;

string manipulation languages to simplify the manipulation of symbols rather