COBOL(ID:139/cob004)

COmmon Business Oriented Language

for COmmon Business Oriented Language.

CODASYL Committee, Apr 1960.

Simple computations on large amounts of data. The most widely used programming language today.

The much-quoted jibes about verbosity are misguided: there was a very small subset of symbols - largely the simple arithmetic ones - which were "Englished", but the remainder were already Englished in (eg) FORTRAN, LISP and ALGOL. The natural language style was intended to make the programs largely self-documenting (pre-empting WEB); the use of divisions pre-empted structured programming, and the use of the record structure pre-empted Pascal.

Funnily enough, the same people who objected to the English in COBOL objected to the baroque elegance of APL where all the English was replaced with numerical symbols, so one might conclude they just liked the status quo. Mercifully, the COBOL wars died out when the people who hated it turned out to hate ALGOL 68 even more.

"The question is, why is Cobol still in widespread use? The answer is, it does the job, it was conceived for business. Cobol is built around the concept of moving things around in storage. Most languages are built around a lower abstraction level and are more focused on algorithms. "

John Bradley, CEO, Liant Software;

People:

Structures:

Related languages

| AIMACO | => | COBOL | Evolution of | |

| APG-1 | => | COBOL | Influence | |

| AUTOCODER III | => | COBOL | Influence | |

| COMTRAN | => | COBOL | Evolution of | |

| DETOC | => | COBOL | Compiled to | |

| FACT | => | COBOL | Evolution of | |

| FLOW-MATIC | => | COBOL | Evolution of | |

| Hanford Mark II | => | COBOL | Influence | |

| SURGE | => | COBOL | Influence | |

| COBOL | => | Basic COBOL | Subset | |

| COBOL | => | COBOL Narrator | Implementation | |

| COBOL | => | COBOL-61 | Evolution of | |

| COBOL | => | COBRA | Preprocessor for | |

| COBOL | => | Compact COBOL | Subset | |

| COBOL | => | DETAB-X | Extension of | |

| COBOL | => | DPL | Incorporated some features of | |

| COBOL | => | GECOM | Evolution of | |

| COBOL | => | ICT COBOL | Implementation | |

| COBOL | => | IDS | Extension of | |

| COBOL | => | LOBOC | Influence | |

| COBOL | => | MCOBOL | Extension of | |

| COBOL | => | METACOBOL | Implementation | |

| COBOL | => | PL/I | Influence | |

| COBOL | => | Required-COBOL | Subset | |

| COBOL | => | SIMFO | Influence | |

| COBOL | => | TABOL | Influence | |

| COBOL | => | TALL | Target language for |

References:

in Proceedings of the 1959 Computer Applications Symposium, Armour Research Foundation, Illinois Institute of Technology, Chicago, Ill., Oct. 29, 1959 view details

An important decision of the committee was to agree (Asch, 1959) "that the following language systems and programming aids would be reviewed by the committee: AIMACO, Comtran [sic], Flowmatic [sic], Autocoder III, SURGE, Fortran, RCA 501 Assembler, Report Generator (GE Hanford) , APG-I (Dupont)"

in Proceedings of the 1959 Computer Applications Symposium, Armour Research Foundation, Illinois Institute of Technology, Chicago, Ill., Oct. 29, 1959 view details

In the past few years the computer world has undergone a tremendous change, particularly in the area of programmed computer aids. Just five or six years ago the atmosphere was completely hostile to such things as compilers and synthetic languages. Today there is not only general acceptance of these devices but a definite demand for them. A prospective computer customer will not consider any machine that is not equipped with an assembly system, a full library of subroutines, diagnostic routines, sort and merge generators, an algebraic compiler, an English-language business compiler, etc.

The first automatic coding systems that were developed were the result of many man-years of experimentation and determination. They were written for machines that were rapidly approaching obsolescence. They had the many inefficiencies that are typical of prototype systems. In spite of these drawbacks, they met with tremendous success. As with any successful product, the band wagon got under way. Users demanded that compilers of all shapes, sizes, and descriptions be made available for all machines. Manufacturers built large staffs of inexperienced people to meet these demands. They produced "quick and dirty" systems that took three or four months to complete and three or four years to alter. Translators were indiscriminately built on top of existing assembly systems, and then other translators were built on top of these. The inefficiency of the products rose exponentially with the number of translators. Individuals at universities and installations throughout the country decided that they could build bigger and better compilers in a lot less time. As a result, the market was flooded with many similar systems for the same machines. Each of these systems had its own excellent features, and each had its inefficiencies. The source languages differed slightly from system to system, and no one seemed to take advantage of the experience of others in writing compilers. Each group was going to produce the ultimate system and propose its own "common natural language."

Early in 1957 the GAMM organization of Germany and Switzerland formed a committee to consider the possibility of devising a common algebraic language for the various computers that were ordered by several universities. Their primary interests were to devise a language for the teaching of numerical methods at the universities, and one that could be used to publish algorithms in a standard and precise notation. They reviewed the systems that had been produced in the United States and found them to be very similar to one another. Rather than add another variation to the saturated atmosphere, they dispatched an emissary to the United States to try to start a movement toward a single internationally accepted algebraic language. Professor Frederick Bauer of the University of Mainz was successful in this mission. A committee of about twenty people, representing computer manufacturers, universities, and industrial users, was formed under ACM sponsorship.

This committee held several meetings and surprisingly enough came through the maze of conflicting opinions with a proposed language structure. Concurrently, the GAMM committee produced a similar proposal. Four representatives from each committee met in Zurich, Switzerland, in June, 1958, with their two formal proposals and their eight individual opinions. The vote on most controversial points was four to four. But somehow or other each difficulty was overcome, and ALGOL, a basically sound and reasonable language, was produced. It had elements that were obviously the result of compromise, but on the whole it had a more consistent structure and a more powerful and comprehensive potential than any of the languages that had preceded it.

It was received in the computer community with varied reaction. Some felt that it was just impossible for eight people to do anything worthwhile in just one week; others declared that this accomplishment would settle all the problems of the computer world; still others made constructive and useful suggestions as to how the language could be improved. Users' organizations immediately formed committees to study the language and to make recommendations as to how it could be implemented on the machines with which they were concerned. Several manufacturers and several universities actually have started implementing ALGOL.

These people have been the chief source of the suggestions for improvement. A meeting of the full ACM committee is scheduled to be held in Washington, D.C., during the first week of November, 1959, to consider the various proposals that have been made; to incorporate the best of these into the language; and to set up the mechanism to publish an up-to-date description of ALGOL.

In all the excitement and discussion over the improvement of the language of ALGOL, there seems to have developed a tendency to overlook the reasons for its very existence. Let us therefore consider the advantages of ALGOL. To the user of many different machines, or to the installation that is replacing its present machine with a new one, the universality of programs coded in ALGOL presents an obvious advantage. For the communication of algorithms and effective computing techniques, ALGOL is a powerful tool. A program that is written in ALGOL can be published directly, without revision. The published program can then be used by any interested parties without expensive conversion or recoding.

The language of formal mathematics has long been established and universally accepted. The relatively new field of numerical methods, however, has no commonly accepted method of notation. It is entirely conceivable that ALGOL will become the basis of the symbology for this field, not only as the vehicle for written communication of algorithms, but also as the language of instruction. Various universities in Germany have already begun to use ALGOL in the numerical methods classrooms.

Extract: ALGOL and CODASYL

Partly as a result of the success of ALGOL, a movement has recently begun to define a common business language. The Committee for Data Systems Languages (CODASYL) was organized by the Defense Department in May of this year. Various working subcommittees were formed, and a proposal is forthcoming by the end of the year.

Extract: Conclusion

Let us now consider the impact that these movements toward standardization and the development of user-oriented languages might have on the computer world. Or, more precisely, let us consider the trends that seem to exist in the computer world that have manifested themselves in these movements and are being perpetuated by them.

The community of computer users has changed considerably in the past few years. During the Dark Ages of computing, the only way of communicating with a machine was through a highly intricate, sophisticated, and delicate piece of equipment known as a programmer. This programmer was highly specialized and intimately familiar with the computer to which it was attached. All problems for the computer had to pass through the programmer, and, although it sometimes produced works of art, it worked entirely too slowly and was much too expensive to operate.

The climate today is not favorable to the widespread operation of such a specialized piece of equipment, and there are relatively few of the old model still being produced.

The number of computers in use is increasing much more rapidly than the supply of new programmers. Many of the better members of the old school have deserted the art to enter the field of management. Personnel at installations that have more than one kind of computer, or at installations that change computers every two years, do not have the opportunity to gain the detailed knowledge of the machine that is essential to warrant the name "programmer."

I do not wish to imply that there are no good programmers today. There are a great many excellent ones. However, there is an ever growing number of "nonprogrammer" users of computers. These are people who are experts in their own fields, who know the problems or systems for which they want results, and who want to use a computer, as a tool, to get these results in the quickest and most economical way possible. This is one of the major factors that have created the need for automatic coding systems et al. The use of synthetic languages has, in turn, pulled the users farther away-from the details of the machine, thus creating the need for more sophisticated automatic systems-systems that make more decisions for the user; systems for automatic programming rather than just automatic coding; complete systems, that carry the use of the source language through the entire effort, even to the debugging of the program.

Another important effect that should result from the use of common languages is a lessening of duplication of effort. With the availability of scientific algorithms and of effective solutions to various business problems in convenient, understandable, and usable languages, it should no longer be necessary for every installation to resolve the same problems. This should permit a more rapid advancement, not only in the programming area, but in many of the fields that make use of computers.

New computers are becoming more complex and hence more difficult to use. Every known piece of peripheral equipment is being "hung" on the main frame. Each of these devices is buffered and operative simultaneously with the processor. parallel processors will soon be available, so that branches of a program, or different programs, can be executed concurrently. Recently there has been a great deal of emphasis on simultaneous execution of unrelated programs. This has created such programming problems as priority assignments and protection of instructions.

To take advantage of these efficient concepts in programming a problem is near impossible. There is only one device that I know of that can make use of these complex features with any degree of efficiency, and that of course is a computer. One of the major objections that professional programmers have raised to the use of compilers in the past has been that programs produced automatically were not so efficient as those tailored by hand. This, of course, is dependent upon the tailor and, with the continuing development of better techniques, is becoming less true. As the machines become more difficult to program, it is my feeling that no individual will, within a reasonable amount of time, be able to match the efficiency of programs that are produced automatically. The time has certainly come to automate the programming field-to automate the production of programs, runs, systems of runs, and the production of automatic programming systems.

I firmly believe that, in the near future, computers will be released to the customer with automatic programming systems and synthetic languages. The basic machine code will not be made available. All training and programming will take place at the source-language level. The desire to go below this language level will pass, just as our desire to get at the basic components of "wired-in" instructions has passed. Machines will be built in a truly modular fashion, ranging from a simple inexpensive model, expandable to a degree of complexity that we cannot now imagine. Automatic programming systems will also be built modularly, thus allowing the use of the same source language on all models. Every hardware module will be available also as a program module. The smallest model of the machine will have all the optional features available as program packages. As hardware modules are added to the machine, in order to increase speed, capacity, etc., the corresponding package in the programming system will be removed. Thus any programs written for a small model can be run without modification on a larger model, and growth of an installation is possible with a minimum of effort and expense.

One word of caution is necessary. We cannot expect these transitions to take place overnight. The present frantic activity in the common-language area has its frivolous side. There seems to be a prevalent attitude that, as soon as these languages have been defined and implemented, all computing problems will be solved. This is, of course, absurd. The time when we may just state our problem to a computer and expect it to decide on a method of solution and deliver the results has not yet come.

in Proceedings of the 1959 Computer Applications Symposium, Armour Research Foundation, Illinois Institute of Technology, Chicago, Ill., Oct. 29, 1959 view details

in Proceedings of the 1959 Computer Applications Symposium, Armour Research Foundation, Illinois Institute of Technology, Chicago, Ill., Oct. 29, 1959 view details

The programming problems listed earlier caused another significant sequence of events to transpire. Roughly two and one-half years ago, AMC jointly with the Sperry Rand Corporation embarked on an automatic programming project which gained considerable interest. This was the AIMACO project, which was to develop a compiler to translate English language into machine code for the Univac 1105. This language and many of the techniques used were based upon the Flowmatic compiler developed for the Univac I by Dr. Grace Hopper.

The story of AIMACO, its trials, tribulations, and so on would in itself make a long story. Suffice it to say that

1. AIMACO was completed, and was used successfully by a large number of programmers in AMC.

2. It gained acceptance as a good approach and technique by virtually all of the skeptics in AMC.

3. It made at least some progress in demonstrating the five basic objectives of the project:

(a) that the communications problems between operating management and programming groups could be materially helped by the use of the English language as the programming language;

(b) that the AMC could more effecively control the development of systems by this technique;

(c) that the schedule for implementing systems could be reduced by such methods;

(d) that the problem of systems modification would be eased because of the better documentation through AIMACO; and

(e) that direct comparison of EDPS would be possible.

Probably one other result of the project was that it very likely hastened the creation of the COBOL effort. The AMC recognized that COBOL represented a logical sequel to the AIMACO project. While AIMACO was designed originally for the 1105, it was intended from the start to produce a similar compiler for the IBM 705. However, with the emergence of COBOL, these plans were changed, so that COBOL was planned to become a common language for computers. This was reasonable primarily because AIMACO and COBOL had the same basic concepts. COBOL had the obvious advantage3 of stemming from a considerably broader background, because of the time factor, as well as being a voluntary effort in which all the major manufacturers were involved.

COBOL is a more sophisticated language than AIMACO, particularly in the area of allowing program iteration with address modification. So when one of our major data systems was scheduled to be converted to the 1105, the question arose, "Should we use AIMACO or try to get a COBOL compiler?" For several reasons, it was decided that a crash effort would be made to prepare a COBOL compiler. The decision hinged not only on the sophistication of the language, but also upon the fact that most of the compiling for the 1105 in AIMACO was done on a Univac I.

The project got underway officially at a meeting of AMC personnel in April 1960. Involved were three people from our Sacramento installation, one person from our Rome, N.Y., installation, one person from the Dayton Air Force Depot installation, and three people-from Headquarters, AMC. Although small in number, these eight were all experienced in the AIMACO project.

The purpose of this meeting was to analyze the COBOL report and select those elements of COBOL we thought made up a minimum required language, review the definition of these parts to be sure all concerned understood them, establish specifications for the compiler, and assign individual jobs to the personnel. Needless to say, considerable thought had gone on before this meeting. The group decided on the following approach:

1. The input-output generator and USE assembly system would remain the same as it was in AIMACO. These pieces were operational on the 1105, were operating very well, and represented a significant amount of effort.

2. To cut down compiling time, sorts would be used whenever possible to arrange that data for "straight-through" processing.

3. To cut down debugging time, the generative technique used to produce the end coding would be designed to localize the major part of the logical manipulation in one place commonly used by all other generators.

4. AIMACO would be used to the fullest extent possible to reduce programming and debugging time further.

One other reason for using AIMACO is also worth mentioning. When a routine is programmed in AIMACO, it is very nearly a subset of a COBOL routine. Therefore, we would be able to get our compiler into its own language with considerably less effort than if we had used machine code.

in Proceedings of the 1960 Computer Applications Symposium, Armour Research Foundation, Illinois Institute of Technology, Chicago, Illinois view details

employ new methods in many areas of research. Performance of 1 million

multiplications on a desk calculator is estimated to require about five vears

and to cost $25,000. On an early scientific computer, a million

multiplications required eight minutes and cost (exclusive of programing

and input preparation) about $10. With the recent LARC computer,

1 million multiplications require eight seconds and cost about

50 cents (Householder, 1956). Obviously it is imperative that researchers

examine their methods in light of the abilities of the computer.

It should be noted that much of the information published on computers

and their use has not appeared in educational or psychological literature

but rather in publications specifically concerned with computers. mathematics,

engineering, and business. The following selective survey is intended

to guide the beginner into this broad and sometimes confusing

area. It is not an exhaustive survey. It is presumed that the reader has

access to the excellent Wrigley (29571 article; so the major purpose of

this review is to note additions since 1957.

The following topics are discussed: equipment availabilitv, knowledge

needed to use computers, general references, programing the computer,

numerical analysis, statistical techniques, operations research, and mechanization

of thought processes. Extract: Compiler Systems

Compiler Systems

A compiler is a translating program written for a particular computer which accepts a form of mathematical or logical statement as input and produces as output a machine-language program to obtain the results.

Since the translation must be made only once, the time required to repeatedly run a program is less for a compiler than for an interpretive system. And since the full power of the computer can be devoted to the translating process, the compiler can use a language that closely resembles mathematics or English, whereas the interpretive languages must resemble computer instructions. The first compiling program required about 20 man-years to create, but use of compilers is so widely accepted today that major computer manufacturers feel obligated to supply such a system with their new computers on installation.

Compilers, like the interpretive systems, reflect the needs of various types of users. For example, the IBM computers use "FORTRAN" for scientific programing and "9 PAC" and "ComTran" for commercial data processing; the Sperry Rand computers use "Math-Matic" for scientific programing and "Flow-Matic" for commercial data processing; Burroughs provides "FORTOCOM" for scientific programming and "BLESSED 220" for commercial data processing.

There is some interest in the use of "COBOL" as a translation system common to all computers.

in Proceedings of the 1960 Computer Applications Symposium, Armour Research Foundation, Illinois Institute of Technology, Chicago, Illinois view details

employ new methods in many areas of research. Performance of 1 million

multiplications on a desk calculator is estimated to require about five vears

and to cost $25,000. On an early scientific computer, a million

multiplications required eight minutes and cost (exclusive of programing

and input preparation) about $10. With the recent LARC computer,

1 million multiplications require eight seconds and cost about

50 cents (Householder, 1956). Obviously it is imperative that researchers

examine their methods in light of the abilities of the computer.

It should be noted that much of the information published on computers

and their use has not appeared in educational or psychological literature

but rather in publications specifically concerned with computers. mathematics,

engineering, and business. The following selective survey is intended

to guide the beginner into this broad and sometimes confusing

area. It is not an exhaustive survey. It is presumed that the reader has

access to the excellent Wrigley (29571 article; so the major purpose of

this review is to note additions since 1957.

The following topics are discussed: equipment availabilitv, knowledge

needed to use computers, general references, programing the computer,

numerical analysis, statistical techniques, operations research, and mechanization

of thought processes. Extract: Compiler Systems

Compiler Systems

A compiler is a translating program written for a particular computer which accepts a form of mathematical or logical statement as input and produces as output a machine-language program to obtain the results.

Since the translation must be made only once, the time required to repeatedly run a program is less for a compiler than for an interpretive system. And since the full power of the computer can be devoted to the translating process, the compiler can use a language that closely resembles mathematics or English, whereas the interpretive languages must resemble computer instructions. The first compiling program required about 20 man-years to create, but use of compilers is so widely accepted today that major computer manufacturers feel obligated to supply such a system with their new computers on installation.

Compilers, like the interpretive systems, reflect the needs of various types of users. For example, the IBM computers use "FORTRAN" for scientific programing and "9 PAC" and "ComTran" for commercial data processing; the Sperry Rand computers use "Math-Matic" for scientific programing and "Flow-Matic" for commercial data processing; Burroughs provides "FORTOCOM" for scientific programming and "BLESSED 220" for commercial data processing.

There is some interest in the use of "COBOL" as a translation system common to all computers.

in Proceedings of the 1960 Computer Applications Symposium, Armour Research Foundation, Illinois Institute of Technology, Chicago, Illinois view details

in The Computer Journal 4(3) October 1961 view details

Powerful New Programming Aids Announced for IBM 1401 Computer

International Business Machines Corp.

Data Processing Division

White Plains, N.Y.

Users of one of the widely-accepted data processing systems ? the IBM 1401 ? will be able to get their computers "on the air" faster at far lower cost with three new programming systems announced in April by this company.

The three aids ? COBOL, Autocoder and an Input/Output Control System ? are powerful additions to a broad selection of advanced programming systems and routines already available to 1401 users. The new additions make the programming package for the 1401 a very comprehensive set of programming tools.

COBOL

The IBM 1401 COBOL programming system will enable the 1401 to generate its own internal "machine language" instructions from programs whose statements closely follow written English usage and syntax. By permitting 1401 programmers to communicate with the computer in familiar terms related to business operations, the 1401 COBOL system will free them from the use of detailed machine language codes. This can mean significant savings in programming time and cost for 1401 users.

COBOL (Common Business Oriented Language) is the result of work by the Conference on Data Systems Languages (CODASYL), a voluntary cooperative effort of various computer manufacturers and users under the sponsorship of the U.S. Department of Defense. COBOL programming systems are also being developed by IBM for other computers, including the IBM 705, 705 III, 709, 1410, 7070, 7080 and 7090.

Autocoder

Autocoder is a symbolic language of easily remembered operation codes and names or symbols. Data and instructions can be referred to by meaningful labels, such as "WHTX" for withholding tax in a payroll operation, rather than by specific 1401 core storage locations.

The IBM 1401 Autocoder programming system will automatically assemble a program written in Autocoder language, translate the coded symbols into instructions in 1401 machine language and assign locations within the computer's memory for both data and instructions. Autocoder includes a number of single commands, each of which can generate sequences of machine language instructions. Like other programming systems, it relieves the 1401 programmer of much time-consuming clerical work.

Input/Output Control System

The Input/Output Control System (IOCS) for the 1401 will provide programmers with instructions and generalized routines that automatically perform the various input/ output operations of the computer. With IOCS, the 140G s input/output units can be programmed with only four commands ? "GET," "POT," "OPEN" and "CLOSE". Since about forty per cent of the instructions in a typical machine-coded computer program are related to the machine's input/output operations, the IOCS can save considerable 1401 programming time and effort by efficiently scheduling these computer functions.

Availability

The 1401 COBOL system will be initially available in the first quarter of 1962 for 1401s with core storage capacities of 8,000, 12,000 or 16,000 characters. A version for 1401s with 4,000 positions of core memory will become available during the second quarter of 1962. A 1401 will also require certain other standard units, such as magnetic tape drives, and special features to be able to handle COBOL.

The 1401 Autocoder is now available.

The 1401 IOCS will be made available in the third quarter of 1961.

COBOL, other 1401 programming aids, Autocoder, and IOCS have been designed to match the capabilities of specific configurations of the 1401.

This package of programming aids for the 1401 represents many man-years of preparation and testing by IBM. Besides the three new systems, the aids range from pre-written routines, which perform many of the everyday operations of the 1401, to other highly-refined programming systems that provide various simplified languages to speed program writing. Most of the programs developed for the 1401 can be used without revision for the larger, more powerful IBM 1410 data processing system. This programming compatibility permits ready systems expansion by firms with growing volumes and expanding computer needs.

The IBM 1401 is a small-to-medium-size computer available in a wide variety of configurations which can be tailored to individual user requirements. It has four basic models: punched card, magnetic tape, RAMAC and BAMAC/tape. With a monthly rental that starts below $2,500, the 1401 is bringing advanced data processing methods and numerous operating features previously found only in larger, more costly computers within the reach of many smaller firms.

in Computer and Automation May 1961 view details

in [ACM] CACM 4(01) (Jan 1961) view details

in Goodman, Richard (ed) "Annual Review in Automatic Programming" (2) 1961 Pergamon Press, Oxford view details

in Goodman, Richard (ed) "Annual Review in Automatic Programming" (2) 1961 Pergamon Press, Oxford view details

Univac LARC is designed for large-scale business data processing as well as scientific computing. This includes any problems requiring large amounts of input/output and extremely fast computing, such as data retrieval, linear programming, language translation, atomic codes, equipment design, largescale customer accounting and billing, etc.

University of California

Lawrence Radiation Laboratory

Located at Livermore, California, system is used for the

solution of differential equations.

[?]

Outstanding features are ultra high computing speeds and the input-output control completely independent of computing. Due to the Univac LARC's unusual design features, it is possible to adapt any source of input/output to the Univac LARC. It combines the advantages of Solid State components, modular construction, overlapping operations, automatic error correction and a very fast and a very large memory system.

[?]

Outstanding features include a two computer system (arithmetic, input-output processor); decimal fixed or floating point with provisions for double

precision for double precision arithmetic; single bit error detection of information in transmission and arithmetic operation; and balanced ratio of high speed auxiliary storage with core storage.

Unique system advantages include a two computer system, which allows versatility and flexibility for handling input-output equipment, and program interrupt on programmer contingency and machine error, which allows greater ease in programming.

in Goodman, Richard (ed) "Annual Review in Automatic Programming" (2) 1961 Pergamon Press, Oxford view details

in Goodman, Richard (ed) "Annual Review in Automatic Programming" (2) 1961 Pergamon Press, Oxford view details

in Goodman, Richard (ed) "Annual Review in Automatic Programming" (2) 1961 Pergamon Press, Oxford view details

The "commercial" papers are mostly devoted to COBOL. There is a detailed description, by Jean E. Sammet, and a paper of general views on COBOL, by the same author, also "A critical discussion of COBOL," by several members of the British Computer Society. A long paper by R. F. Clippinger describes FACT, a commercial language developed for the HONEYWELL 800, and compares it in considerable detail with COBOL and IBM Commercial Translator, aiming to show the superiority of FACT over these languages. "The growth of a commercial programming language" by H. D. Baeker describes SEAL, a language developed for the Stantec Data Processing System, and again aims to demonstrate its superiority over COBOL.

in The Computer Bulletin June 1962 view details

COBOL was developed by the COBOL Committee. which is a subcommittee of a layer committee called the "Conference on Data System Languages" which has an Executive Committee and, in addition to the COBOL Committee, a Development Committee. Within the Development Committee are two working groups called the Language Structure Group (whose members are Roy Goldfinger of IBM, Robert Bosak of SDC, Carey Dobbs of Spcrry Rand, Renee Jasper of Navy Management Office, William Keating of National Cash Register, George Kendrick of General Electric, and Jack Porter of Mitre Corporation). and the Systems Group. Both of these groups are interested in going beyond COBOL to further simplify the work of problem preparation. The Systems Group has concentrated on an approach which represents a generalization of TABSOL in GE's GECOM. The driving force behind this effort is Burt Grad.

The Language Structure Group has taken a different approach. It notes that FACT, COBOL, and other current data-processing compilers are "procedure-oriented," that is, they require a solution to the dataprocessing problem to be worked out in detail, and they are geared to the magnetic-tape computer and assume that information is available in files on magnetic tape. sorted according to some keys. The Language Structure Group felt that an information algebra could be defined which would permit the definition of a data-processing problem without postulating a solution. It felt that if this point were reached. then certain classes of problems could be handled by compilers that would, in effect, invent the necessary procedures for the problem solution.

One sees a trend in this direction in FLOW-MATIC, COBOL, Commercial Translator, and FACT, if one notes that a sort can be specified in terms of the ipnput file and the output file, with no discussion of the technique required to create strings of ordered items and merge these strings. Similarly, a report writer specifies where the information is to come from and how it is to be arranged on the printed page, and the compiler generates a program which accomplishes the purpose without the programmer having to supply the details. The FACT update verb specifies a couple of input files and criteria for matching, and FACT generates a program which does the housekeeping of reading records from both files, matching them and going to appropriate routines depending on where there is a match, an extra item, a missing item, etc. A careful study of existing compilers will reveal many areas where the compiler supplies a substantial program as a result of having been given some information about data and interrelationships between the data. The Language Structure Group has, by no means, provided a technique of solving problems once they are stated in abstract form. Indeed, it is clear that this problem is not soluble in this much generality. All the Language Structure Group claims is to provide an information algebra which may serve as a stimulus to the development of compilers with somewhat larger abilities to invent solutions in restricted cases. The work of the Language Structure Group will be described in detail in a paper which will appcar shortly in the Coinnnmications offhe ACM. Extract: Basic concepts

Basic concepts

The algebra is built on three undefined concepts: ENTITY, PROPERTY and VALUE. These concepts are related by the following two rules which are used as the basis of two specific postulates:

(a) Each PROPERTY has a set of VALUES associated with it.

(b) There is one and only one VALUE associated with each PROPERTY of each ENTITY.

The data of data processing are collections of VALUES of certain selected PROPERTIES of certain selected ENTITIES. For example, in a payroll application. one class of ENTITIES are the employees. Some of the PROPERTIES which may be selected for inclusion are EMPLOYEE NUMBER, NAME, SEX, PAY RATE, and a payroll file which contain a set of VALUES of these PROPERTIES for each ENTITY.

Extract: Property spaces

Property spaces

Following the practice of modern algebra, the Information Algebra deals with sets of points in a space. The set of VALUES is assigned to a PROPERTY that is called the PROPERTY VALUE SET. Each PROPERTY VALUE SET contains at least two VALUES U (undefined, not releUant) and II (missing, relevant, but not known). For example, the draft status of a female employee would be undefined and not relevant, whereas if its value were missing for a male employee it would be relevant but not known. Several definitions are introduced :

(1) A co-ordinate set (Q) is a finite set of distinct properties.

(2) The null co-ordinate set contains no properties.

(3) Two co-ordinate sets are equivalent if they contain exactly the same properties.

(4) The property space (P) of a co-ordinate set (Q) is the cartesian product P = V, X V, x Vj X . . . X V,, where V, is the property value set assigned to the ith property of Q. Each point (p) of the property space will be represented by an n-tuple p = (a,, a,, a,, . . ., a,), where a, is some value from C',. The ordering of properties in the set is for convenience only. If n -= 1, then P = V,. If n = 0, then P is the Null Space.

(5) The datum point (d) of an entity (e) in a property space (P) is a point of P such that if (1 -= (a,, al. a,. . . ., a,,), then a, is the value assigned to e from the ith property value set of P for i -I 1. 2, 3, . . ., n. ((l) is the representation of (e) in (P). Thus, only a small subset of the points in a property space are datum points. Every entity has exactly one datum point in a given property space.

Extract: Lines and functions of lines

Lines and functions of lines

A line (L) is an ordered set of points chosen from P.

The span (n) of the line is the number of points comprising the line. A line L of span n is written as: L = (p,, p2. . . ., p,,) The term line is introduced to provide a generic term for a set of points which are related. In a payroll application the datum points might be individual records for each person for five working days of the week. These live records plotted in the property space would represent a line of span five.

A function of lines (FOL) is a mapping that assigns one and only one value to each line in P. The set of distinct values assigned by an FOL is the value set of the FOL. It is convenient to write an FOL in the functional form , f ' ( X ) , where f is the FOL and X is the line. In the example of the five points representing work records for five days work, a FOL for such a line might be the weekly gross pay of an individual, which would be the hours worked times payrate summed over the five days of the week. An Ordinal FOL (OFOL) is an FOL whose value set is contained within some defining set for which there exists a relational operator R which is irreflexive, asymetric, and transitive. Such an operator is "less than" on the set of real numbers. Extract: Areas and functions of areas

Areas and functions of areas

An area is any subset of the property space P; thus the representation of a file in the property space is an area. A function of an area (FOA) is a mapping that assigns one and only one value to each area. The set of distinct values assigned by an FOA is defined to be the value set of the FOA. It is convenient to write an FOA in the functional form f'(X), where f is the FOA and X is the area.

Extract: Bundles and functions of bundles

Bundles and functions of bundles

In data-processing applications, related data from several sources must be grouped. Various types of functions can be applied to these data to define new information. For example, files are merged to create a new file. An area set /A of order n is an ordered n-tuple of areas (A,, A,. . . ., A, = /A). Area set is a generic term for a collection of areas in which the order is significant. It consists of one or more areas which are considered simultaneously; for example. a transaction file and master file from an area set of order 2.

Definition:

The Bundle B -: B(b, /A) of an area set /A for a selection OFOL h is the set of all lines L such that if

(a) /A = (A,, .Az. . . ., A,,) and

(0) L = ( p , . p*, . . .,p,,). where p, is a point of A, for

i = 1,2 , . . . , n,

then b( L) = True.

A bundle thus consists of a set of lines each of order n where 11 is the order of the area set. Each line in the bundle contains one. and only one. point from each area. The concept of bundles gives us a method of conceptually linking points from different areas so that they may be considered jointly. As an example, consider two areas whose names are "Master File" and "Transaction File," each containing points with the property Part Number. The bundling function is the Part Number. The lines of the bundle are tlie pairs of points representing one Master File record and one Transaction File record with the same partner.

A function of a bundle (FOB) is a mapping that assigns an area to a bundle such that

(a) there is a many-to-one correspondence between the lines in the bundle and the points in the area;

(b) the value of each properly of each point in the area is defined by an FOL of the corresponding line of the bundle;

(c) the value set of such an FOL must be a subset of the corresponding property value set.

The function of a bundle assigns a point to each line of the bundle; thus a new Master File record must be assigned to an old master file record and a matching transaction file record.

Extract: Glumps and functions of glumps

Glumps and functions of glumps

If A is an area and g is an FOL defined over lines of

span 1 in A, then a Glump G - G(g, A) of an area A for an FOL g is a partition of A by g such that an ele- ment of this partition consists of all points of A that have identical values f' or g. The function g will be called the Glumping Function for G. The concept of a glump does not imply an ordering either of points within an element, or of elements within a glump. As an example, let a back order consist of points with the three non-null properties: Part Number, Quantity Ordered, and Date. Define a glumping function to he Part Number. Each element of this glump consists of all orders for the same part. Different glump elements may contain different numbers of points. A function of a glump (FOG) is a mapping that assigns an area to a glump such that

(a) there is a many-to-one correspondence between the elements of the glump and the points of the area ;

(b) the value of each property of a point in the assigned area is defined by an FOA of the corresponding element in the glump:

(c) the value set of the FOA must be a subset of the corresponding property value set.

in The Computer Journal 5(3) October 1962 view details

Aims

What do we want from these Automatic Programming Languages? This is a more difficult question to answer than appears on the surface as more than one participant in the recent Conference of this title made clear. Two aims are paramount: to make the writing of computer programs easier and to bring about compatibility of use between the computers themselves. Towards the close of the Proceedings one speaker ventured that we were nowhere near achieving the second nor, indeed, if COBOL were to be extended any further, to achieving the first.

These aims can be amplified. Easier writing of programs implies that they will be written in less, perhaps in much less, time, that people unskilled in the use of machine language will still be able to write programs for computers after a minimum of training, that programs will be written in a language more easily read and followed, even by those completely unversed in the computer art, such as business administrators, that even the skilled in this field will be relieved of the tedium of writing involved machine language programs, time-consuming and prone to error as this process is. Compatibility of use will permit a ready exchange of programs and applications between installations and even of programmers themselves (if this is an advantage!), for the preparation of programs will tend to be more standardised as well as simplified. Ultimately, to be complete, this compatibility implies one universal language which can be implemented for all digital computers.

Extract: COBOL

COBOL

While there has been probably even more controversy over COBOL than over ALGOL, it has ultimately gained acceptance quicker, at any rate in the USA. This acceptance was certainly aided by the attitude of the US Dept. of Defence, under whose sponsorship the body responsible for constructing COBOL had been set up (CODASYL?Conference on Data Systems Languages), and who attempted to enforce its use by insisting that all the computers which it purchased (and it is by far the largest owner of computers in the USA) should be able to implement the COBOL language.

In the UK, however, the attitude to COBOL was lukewarm and its acceptance slower for many reasons. The distance between the potential implementers and the source of the COBOL reports led to a lack of drive in obtaining acceptance, as to a certain apparent vagueness and lack of definition about the language itself. Furthermore, the descriptions of COBOL were tape-orientated and in this country the use of tape had been pared to a minimum so that the equipment to implement COBOL was not available and, contrariwise, there was vast capital investment in the present equipment. And this is a conservative country anyway.

A significant point in the history of this acceptance of COBOL in the UK came about 18 months before when a Working Committee of the British Computer Society, set up to study COBOL in detail, advised that they could not recommend the language as it then was, and that experience could as well be gained from the manufacturers' own languages. ICT was the only manufacturer to ignore this advice, the others pursuing in the main, until recently, their own developments.

in The Computer Bulletin September 1962 view details

A problem of continuing concern to the computer programmer is that of file design: Given a collection of data to be processed, how should these data be organized and recorded so that the processing is feasible on a given computer, and so that the processing is as fast or as efficient as required? While it is customary to associate this problem exclusively with business applications of computers, it does in fact arise, under various guises, in a wide variety of applications: data reduction, simulation, language translation, information retrieval, and even to a certain extent in the classical scientific application. Whether the collections of data are called files, or whether they are called tables, arrays, or lists, the problem remains essentially the same.

The development and use of data processing compilers places increased emphasis on the problem of file design. Such compilers as FLOW-MATIC of Sperry Rand , Air Materiel Command's AIMACO, SURGE for the IBM 704, SHARE'S 9PAC for the 709/7090, Minneapolis- Honeywell's FACT, and the various COBOL compilers each contain methods for describing, to the compiler, the structure and format of the data to be processed by compiled programs. These description methods in effect provide a framework within which the programmer must organize his data. Their value, therefore, is closely related to their ability to yield, for a wide variety of applications, a ,data organization which is both feasible and practical.

To achieve the generality required for widespread application, a number of compilers use the concept of the multilevel, multi-record type file. In contrast to the conventional file which contains records of only one type, the multi-level file may contain records of many types, each having a different format. Furthermore, each of these record types may be assigned a hierarchal relationship to the other types, so that a typical file entry may contain records with different levels of "significance." This article describes an approach to the design and processing of multi-level files. This approach, designated the property classification method, is a composite of ideas taken from the data description methods of existing compilers. The purpose in doing this is not so much to propose still another file design method as it is to emphasize the principles underlying the existing methods, so that their potential will be more widely appreciated.

in [ACM] CACM 5(08) August 1962 view details

in [ACM] CACM 5(05) May 1962 view details

Q: Would you describe some of the major contracts which CSC has obtained?

A: One of our first projects included development of the FACT language, the design of its compiler, and implementation of most of the FACT processor.

Later, CSC developed the LARC Scientific Compiler, an upgraded variant of the FORTRAN II language, and designed the ALGOL/ FORTRAN compiler for the RCA 601.

Recently, we have finished other complete systems for large scale computers such as the UNIVAC III and 1107. By complete systems, I mean the algebraic and business compilers, executive system, assembly program and other routines.

For the Philco 2000, we developed ALTAC IV, COBOL-61, and a very general report generator.

An assembly program for a Daystrom process control computer, a general sort-merge for another computer, several simulators, and many others at this level of effort have been accomplished.

In areas concerned with applications, we developed two PERT/COST systems for aircraft manufacturers and are responsible for the design and implementation of a command and control system at Jet Propulsion Lab for use by NASA in many of our space probes scheduled later this year and beyond.

In the scientific area we have worked on damage assessment models, data acquisition and reduction systems, re-entry and trajectory analysis, maneuver simulation, orbit prediction and determination, stress analysis, heat transfer, impact prediction, kill probabilities, war gaming, and video data analysis.

In the commercial applications area, we have produced systems for payroll and cost accounting, material inventory, production control, sales analysis and forecasting, insurance and banking problems, etc.

Extract: On Cobol and possible commercial Algols

Q: Since you've raised the subject of "other" compilers, what is your opinion of the COBOL compiler as far as compatibility, efficiency and ease of use are concerned?

A: As to the first part of the question, I can't believe now, and I haven't believed since the first meeting of CODASYL, in which I participated, that the language stands a ghost of a chance of becoming common within the definition that a common language may be taken from one computer library and used immediately in another without a measurable change.

Those who felt that this could be done were not entirely familiar with some of the problems involved, and consequently overlooked several important and basic reasons why effective compatibility cannot be achieved with a language such as COBOL.

With respect to the efficiency of the language in problem statement, COBOL suffers the fate that any narrative-based language would ? there must be a better way, and I personally believe that there is, namely through the use of a strong symbolic language form. As far as concerns the permissible efficiency allowed the compiler by the COBOL language, this is doubtless much lower than need be.

Insofar as ease of use is concerned, COBOL is without doubt easier to use than machine language, but also doubtless harder to use than a language should be. Most to whom I've spoken on the subject state they think it is fourth place in ease of use and power in a list which includes COBOL, FACT, COMTRAN, and JOVIAL.

All of this isn't to state that COBOL should not have been done. Contrarily, I believe it was and is a worthwhile development when considered in proper perspective. I don't believe, however, that its development has attained any of the major goals which its promoters asserted were cinches; I don't believe it is or will be "common," or that it is qualified to be; I don't believe that it's a good communication language, even though its promoters said that "vice-presidents could read it and understand what's intended after an afternoon's instruction"?a laughable assertion now, as it was then; I lastly don't believe it's easy to teach ? there are too many exceptions, too many disconformities, too many singularities.

All of these points of criticism would, in general, be true of any language developed at the time, particularly if narrative-based, including, though it is often believed to a lesser extent, FACT, COMTRAN, and others. The pity, then, is not that COBOL was developed, but rather that naivete and heavy-handedness forced it upon the field to the point that other developments are stifled for whatever period of time is required for the field to purge itself of the notion that COBOL is the panacea for language problems; then, parallel efforts using different approaches may resume and continue to a healthier conclusion. In the meantime, computer manufacturers, the principal source for new language development, are naturally inclined to produce only that which is in demand, not engaging in much parallel language development, in an effort to keep costs low.

As I stated before, I believe the field could eventually rely on a strong symbolic language for the statement of business problems. This form would provide conciseness and clarity, would be much more easily and efficiently processed than narrative forms, and would stand a much greater chance of becoming common. I'm convinced that relatively few programmers using a strong symbolic language could get a lot more done than hordes using a narrative-based form.

Q: Assuming that COBOL has many disadvantages, wouldn't the use of a symbolic language be a reversal in efforts toward facilitating communication?

A: No, I feel it would be a definite advance. Symbolic notation has proven to be vastly superior to narrative as a communication medium. To illustrate, I believe we can point to the experience of those who've done more re-search in problem statement language than anyone else ? mathematicians. Logicians and mathematicians have spent 3,000 years in language development, and you won't find one defining, solving, or even communicating on a problem in English. This is one reason I find it nearly unbelievable that some of the strongest supporters of narrative-based language, and COBOL in particular, are allegedly well-grounded in mathematics and its principles.

As to the ease of teaching symbolic form, the countless people who've learned and used FORTRAN, as an example, attest to the fact that symbolic is no harder to teach than narrative; contrarily, most I've heard who've been exposed to both, feel that FORTRAN is vastly easier to learn than COBOL.

Q: Is there any correlation between COBOL development and the lack of commercial implementation of ALGOL?

A: I don't see a direct correlation, except perhaps one having to do with the fact that, if ALGOL had been well specified and subsequently expanded in scope to include a facility for commercial problem statement, COBOL might never have entered the picture; however, this is true of other languages as well. Unfortunately, this was not the case. There are many, by the way, who believe that either ALGOL or FORTRAN is a good base for expansion to handle business problems, and some good students of this area even believe that one or both are now better than COBOL for this purpose.

In most directions, the two cases are quite different. COBOL entered the picture in a near vacuum, where hardly anyone was committed to the use of a given data processing language. ALGOL, on the other hand, faced the de facto standard, FORTRAN, and the pragmatics of the situation were and are such that popularity is not in the cards for ALGOL ? no computer user who has a large library of FORTRAN programs, or who has access to the huge collective FORTRAN library, can justify the cost of conversion to a system which most are not even sure is better. Retraining of programmers is a significant factor, too. And, since user demand isn't present, most manufacturers think of ALGOL as a luxury or nice experiment, while they must regard FORTRAN as the sine qua non in the marketplace. The effect is cyclic and cumulative.

Also, COBOL had the benefit, if it is such, of sponsorship by government edict, while ALGOL didn't.

in [ACM] CACM 5(05) May 1962 view details

in Goodman, Richard (ed) "Annual Review in Automatic Programming" (3) 1963 Pergamon Press, Oxford view details

in The Computer Journal 7(2) July 1964 view details

The first large scale electronic computer available commercially was the Univac I (1951). The first Automatic Programming group associated with a commercial computer effort was the group set up by Dr. Grace Hopper in what was then the Eckert-Mauchly Computer Corp., and which later became the Univac Division of Sperry Rand. The Univac had been designed so as to be relatively easy to program in its own code. It was a decimal, alphanumeric machine, with mnemonic instructions that were easy to remember and use. The 12 character word made scaling of many fixed-point calculations fairly easy. It was not always easy to see the advantage of assembly systems and compilers that were often slow and clumsy on a machine with only 12,000 characters of high speed storage (200 microseconds average access time per 12 character word). In spite of occasional setbacks, Dr. Hopper persevered in her belief that programming should and would be done in problem-oriented languages. Her group embarked on the development of a whole series of languages, of which the most used was probably A2, a compiler that provided a three address floating point system by compiling calls on floating point subroutines stored in main memory. The Algebraic Translator AT3 (Math-Matic) contributed a number of ideas to Algol and other compiler efforts, but its own usefulness was very much limited by the fact that Univac had become obsolete as a scientific computer before AT3 was finished. The B0 (Flow-Matic) compiler was one of the major influences on the COBOL language development which will be discussed at greater length later. The first sort generators were produced by the Univac programming group in 1951. They also produced what was probably the first large scale symbol manipulation program, a program that performed differentiation of formulas submitted to it in symbolic form.

in [AFIPS JCC 25] Proceedings of the 1964 Spring Joint Computer Conference SJCC 1964 view details

in ACM Computing Reviews 5(06) November-December 1964 view details

An important step in artificial language development centered around the

idea that i t is desirable to be able to exchange computer programs between

different computer labs or at least between programmers on a universal level.

In 1958, after much work, a committee representing an active European computer

organization, GAMM, and a United States computer organization, ACNI,

published a report (updated two years later) on an algebraic language called

ALGOL. The language was designed to be a vehicle for expressing the processes

of scientific and engineering calculations of numerical analysis. Equal stress was

placed on man-to-man and man-to-machine communication. It attempts to

specify a language which included those features of algebraic languages on

which it was reasonable to expect a wide range of agreement, and to obtain a

language that is technically sound. In this respect, ALGOL Set an important

precedent in language definition by presenting a rigorous definition of its syntax.

ALGOL compilers have also been written for many different computers.

It is very popular among university and mathematically oriented computer

people especially in Western Europe. For some time in the United States, it will

remain second to FORTRAN, with FORTRAN becoming more and more like

ALGOL.

The largest user of data-processing equipment is the United States Government.

Prodded in Part by a recognition of the tremendous programming investment

and in part by the suggestion that a common language would result only

if an active Sponsor supported it, the Defense Department brought together

representatives of the major manufacturers and Users of data-processing equipment

to discuss the problems associated with the lack of standard programming

languages in the data processing area. This was the start of the conference on

Data Systems Languages that went on to produce COBOL, the common business-

oriented language. COBOL is a subset of normal English suitable for expressing

the solution to business data processing problems. The language is

now implemented in various forms on every commercial computer.

In addition to popular languages like FORTRAN and ALGOL, we have

some languages used perhaps by only one computing group such as FLOCO,

IVY, MADCAP and COLASL; languages intended for student problems, a

sophisticated one like MAD, others like BALGOL, CORC, PUFFT and various

versions of university implemented ALGOL compilers; business languages in addition

to COBOL like FACT, COMTRAN and UNICODE; assembly (machine)

languages for every computer such as FAP, TAC, USE, COMPASS; languages to simplify problem solving in "artificial intelligence," such as the so-called list

processing languages IPL V, LISP 1.5, SLIP and a more recent one NU SPEAK;

string manipulation languages to simplify the manipulation of symbols rather

than numeric data like COMIT, SHADOW and SNOBOL; languages for

command and control problems like JOVIAL and NELIAC; languages to simplify

doing symbolic algebra by computer such as ALPAK and FORMAC;

a proposed new programming language tentatively titled NPL; and many,

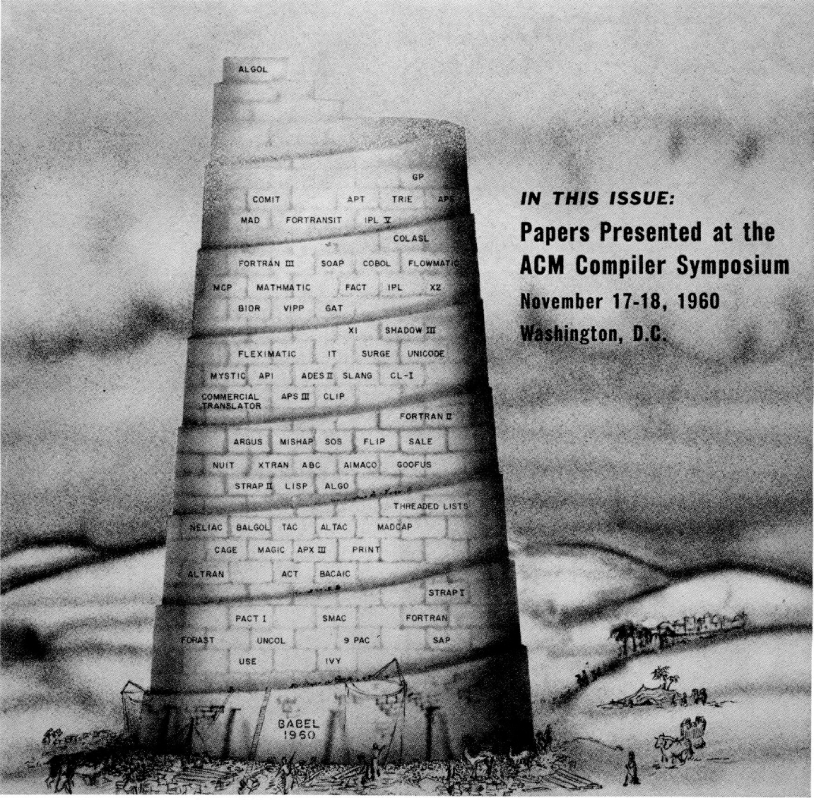

many, more. A veritable tower of BABEL!

in ACM Computing Reviews 5(06) November-December 1964 view details

in Computers & Automation 16(6) June 1967 view details

in Computers & Automation 16(6) June 1967 view details

The major data processing languages (e.g. COBOL, NEBULA) recognise the importance of data definition by having a distinct data division. Recent language development lays even greater emphasis on data structure specification and BCL (Hendry, 1966; Hendry and Mohan, 1968) could be described as 'structure oriented'.

The systems methodology proposals discussed in the foregoing all present their data dictionary as an alphabetic list of data element names together with field requirements and miscellaneous information. They do not include group or structure names in the dictionary nor provide formal facilities for group naming and specification. The data divisions of COBOL and NEBULA, on the other hand, emphasise structure and display it clearly. BCL does not attempt to display structure but specifies it precisely in a very simple way. Notation of the BCL type is adopted in the suggestions in this paper. Information conveyed in this notation is processed to provide data dictionary documentation, the self-consistency or otherwise of the information being determined during processing. Amending and correcting facilities are provided to alter and improve the documentation. It is suggested that the processing involved should be a computer function.

Attributes of a data dictionary-programming requirements As stated above a data dictionary provides working information for implementation. What does this requirement involve ?

First, it must specify all data elements involved giving a useful and meaningful name to each for human communication purposes. A field specification for each element or adequate information to determine one is required, together with allowable ranges for numerical items and sets of allowable values for non-numerical items. Secondly, it should provide information on the grouping and structuring of the data, since this is often a natural way of defining records within a programming scheme. As with elements, sensible and meaningful names are required for groups and structures. A third requirement is information on variable and optional occurrence of data. For example, in an input structure groups or elements occurring optionally or a variable number of times must be so specified. With variable occurrence, information is required on variability likely to be expected in practice, since decisions may be necessary on whether to use fixed or variable length records.

Data names which are clearly meaningful frequently prove rather cumbersome for programming. A fairly common technique of avoiding this is to assign simple codes as alternatives to names to give the required brevity. We suggest that a data dictionary should define such codes.

Data dictionary construction during analysis and design Data definition in programming requires individual data elements to be identified and defined before groups and structures; there is definition 'upwards' from the elements. This is so even where the notation (e.g. COBOL) is apparently 'downwards'. It is necessary for program generation but implies that formalised documentation and use of the computer's checking capacity must wait on all detail being specified.

Data specification is 'from the top down', that is broad groups and structures are defined first and given names, then sub-groups and sub-structures and so on down to the individual elements; this is the natural approach of systems analysis. BCL has a simple notation for such a method of working and groups and structures are defined by statements of the type

A is (B, C, D)

B is (X, Y), etc.

This notation corresponds to the natural approach of systems analysis. In BCL the above definition of A is only valid if B, C and D are already defined. Use of this type of notation for analysis and design requires this restriction to be relaxed, since introduction of data names without a precise definition must be allowed. There will, of course, be a general idea of what such a name signifies but there must be freedom to leave precise specification to later.

In the example above A and B are defined as group names but C, D, X and Y remain undefined. Subsequently they may be defined as group names or specified as data elements by the giving of a field definition, information on permissible values, uniqueness, etc. There is advantage in not requiring field specifications at the time names are introduced as is isually required in programming languages. Names not defined as groups or given a field definition represent data about which further information must be provided.

A large number of definitions of the type discussed, together with field specifications, information on variability, optionality, etc., would not be very readable even though precise in information content. In addition to proposing formalised ways of giving these definitions it is suggested they should be processed by computer, vetted for errors and used to create a data dictionary file. From this the data dictionary documentation will be obtained. Thus it is explicitly recognised that part of systems analysis and design is itself data processing and that a computer can be used advantageously in this. An important feature is that the dictionary file is created at an early stage and information is added continuously as the work proceeds. A display is always available of the 'state of the work so far' with processing and clarification as it proceeds. Much of the formal documentation chore thus becomes a com~uter function. Work outstanding is indicated in the dictionary documentation and, where a team is involved, there is automatic amalgamation of different members' work, each receiving up to date documentation of the whole project in standard form.

in The Computer Journal 12(1) 1969 view details

in The Computer Journal 12(1) 1969 view details

[321 programming languages with indication of the computer manufacturers, on whose machinery the appropriate languages are used to know. Register of the 74 computer companies; Sequence of the programming languages after the number of manufacturing firms, on whose plants the language is implemented; Sequence of the manufacturing firms after the number of used programming languages.]

in The Computer Journal 12(1) 1969 view details

in [ACM] CACM 15(06) (June 1972) view details

in Computers & Automation 21(6B), 30 Aug 1972 view details

in ACM Computing Reviews 15(04) April 1974 view details

The exact number of all the programming languages still in use, and those which are no longer used, is unknown. Zemanek calls the abundance of programming languages and their many dialects a "language Babel". When a new programming language is developed, only its name is known at first and it takes a while before publications about it appear. For some languages, the only relevant literature stays inside the individual companies; some are reported on in papers and magazines; and only a few, such as ALGOL, BASIC, COBOL, FORTRAN, and PL/1, become known to a wider public through various text- and handbooks. The situation surrounding the application of these languages in many computer centers is a similar one.

There are differing opinions on the concept "programming languages". What is called a programming language by some may be termed a program, a processor, or a generator by others. Since there are no sharp borderlines in the field of programming languages, works were considered here which deal with machine languages, assemblers, autocoders, syntax and compilers, processors and generators, as well as with general higher programming languages.

The bibliography contains some 2,700 titles of books, magazines and essays for around 300 programming languages. However, as shown by the "Overview of Existing Programming Languages", there are more than 300 such languages. The "Overview" lists a total of 676 programming languages, but this is certainly incomplete. One author ' has already announced the "next 700 programming languages"; it is to be hoped the many users may be spared such a great variety for reasons of compatibility. The graphic representations (illustrations 1 & 2) show the development and proportion of the most widely-used programming languages, as measured by the number of publications listed here and by the number of computer manufacturers and software firms who have implemented the language in question. The illustrations show FORTRAN to be in the lead at the present time. PL/1 is advancing rapidly, although PL/1 compilers are not yet seen very often outside of IBM.

Some experts believe PL/1 will replace even the widely-used languages such as FORTRAN, COBOL, and ALGOL.4) If this does occur, it will surely take some time - as shown by the chronological diagram (illustration 2) .

It would be desirable from the user's point of view to reduce this language confusion down to the most advantageous languages. Those languages still maintained should incorporate the special facets and advantages of the otherwise superfluous languages. Obviously such demands are not in the interests of computer production firms, especially when one considers that a FORTRAN program can be executed on nearly all third-generation computers.

The titles in this bibliography are organized alphabetically according to programming language, and within a language chronologically and again alphabetically within a given year. Preceding the first programming language in the alphabet, literature is listed on several languages, as are general papers on programming languages and on the theory of formal languages (AAA).

As far as possible, the most of titles are based on autopsy. However, the bibliographical description of sone titles will not satisfy bibliography-documentation demands, since they are based on inaccurate information in various sources. Translation titles whose original titles could not be found through bibliographical research were not included. ' In view of the fact that nany libraries do not have the quoted papers, all magazine essays should have been listed with the volume, the year, issue number and the complete number of pages (e.g. pp. 721-783), so that interlibrary loans could take place with fast reader service. Unfortunately, these data were not always found.

It is hoped that this bibliography will help the electronic data processing expert, and those who wish to select the appropriate programming language from the many available, to find a way through the language Babel.

We wish to offer special thanks to Mr. Klaus G. Saur and the staff of Verlag Dokumentation for their publishing work.

Graz / Austria, May, 1973

in ACM Computing Reviews 15(04) April 1974 view details

in ACM Computing Reviews 15(04) April 1974 view details

in ACM Computing Reviews 15(04) April 1974 view details

in ACM Computing Reviews 15(04) April 1974 view details

in Computer Languages 2(4) view details

in Computer Languages 2(4) view details

in SIGPLAN Notices 13(11) Nov 1978 view details

in SIGPLAN Notices 14(04) April 1979 including The first ACM SIGPLAN conference on History of programming languages (HOPL) Los Angeles, CA, June 1-3, 1978 view details

The author's abstract succinctly describes the paper's contents:

This paper discusses the early history of COBOL, starting with the May 1959 meeting in the Pentagon which established the Short Range Committee which defined the initial version of COBOL, and continuing through the creation of COBOL 61. The paper gives a detailed description of the committee activities leading to the publication of the first official version, namely COBOL 60. The major inputs to COBOL are discussed, and there is also a description of how and why some of the technical decisions in COBOL were made. Finally, there is a brief "after the fact" evaluation, and some indication of the implication of COBOL on current and future languages.

Jean Sammet has done her usual careful, painstaking, and thorough job in putting together this incisive narration of the events, the resulting conflicts, and their resolution before, during, and after the development of the original COBOL. This reviewer cannot add anything to her article, and would like to conclude by extracting the following from the end of the article:

The main lesson that I think should be learned from the early COBOL development is a nontechnical one--namely that experience with real users and real compilers is desperately needed before "freezing" a set of language specifications. Had we known from the beginning that COBOL would have such a long life, I think many of us would have refused to operate under the time scale that was handed to us. On the other hand the "early freezing" prevented the establishment of dialects, as occurred with FORTRAN; that made the development of the COBOL standard much easier. Another lesson that I think should be learned for languages which are meant to have wide practical usage (as distinguished from theoretical and experimental usage) is that the users must be more involved from the beginning.

I think that the long-range future of COBOL is clearly set, and for very similar reasons to those of FORTRAN--namely the enormous investment in programs and training that aleady exists. I find it impossible to envision a situation in which most of the data processing users are weaned away from COBOL to another language, regardless of how much better the other language might be.

in ACM Computing Reviews 21(04) April 1980 view details

in IBM Journal of Research and Development, 25(5), September 1981 25th anniversary issue view details

in IBM Journal of Research and Development, 25(5), September 1981 25th anniversary issue view details

in IBM Journal of Research and Development, 25(5), September 1981 25th anniversary issue view details

in IBM Journal of Research and Development, 25(5), September 1981 25th anniversary issue view details